I have a HA proxmox cluster with three nodes.

I have replication between two nodes. Each of those two nodes have a ZFS pool that is used for replication. I have replication rules setup between those two servers with ZFS for HA.

I have an older third node (called virtual) that does not have any ZFS disk, so I don't use it as a replication target.

Today one of the nodes with ZFS died, and one of the containers (CT) ended on the third node, the non ZFS one, I don't know how.

Because this third node does not have any ZFS pool (nor replicated volumens) the container is on a weird state because the disk it references does not exist. Now I can not migrate this CT to the correct remaining node.

When I try to migrate the CT to the correct node I get this error:

Replication Log

2022-03-21 17:53:01 105-0: start replication job

2022-03-21 17:53:01 105-0: guest => CT 105, running => 0

2022-03-21 17:53:01 105-0: volumes => rpool:subvol-105-disk-0

2022-03-21 17:53:01 105-0: create snapshot '__replicate_105-0_1647881581__' on rpool:subvol-105-disk-0

2022-03-21 17:53:01 105-0: end replication job with error: zfs error: For the delegated permission list, run: zfs allow|unallow

Obviously the third node doesn't have the rpool volume, so I don't know why proxmox decided to migrate that CT there. How can I start that container on the remaining node? The appropriate disk exists on the server that has the ZFS pool, I can't just migrate to it.

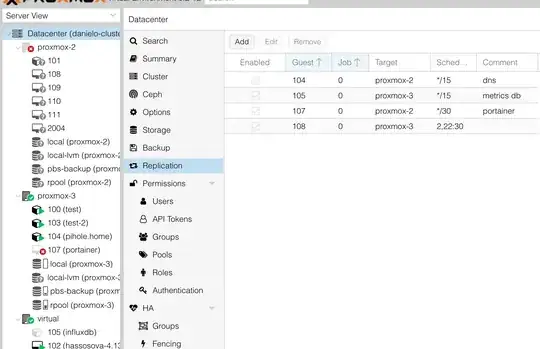

Here is an screenshot of my current cluster state and the replication tasks. As you can see, replication is only done between nodes proxmox-2 and proxmox-3, which are the ones having ZFS storage. The container that I'm talking about is the one with ID 105