Besides whatever metadata the RAID controller uses, are there any differences between these two arrays (in terms of data layout, performance, reliability)?

Yes. RAID5 uses a single, rotating parity while RAID6 uses two. You may be visualizing dedicated parity disks, but they're in fact rotating.

RAID5 should be rather straight-forward:

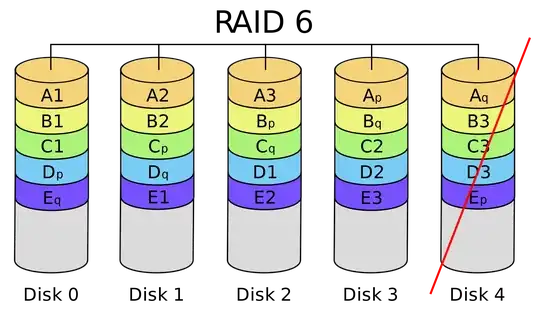

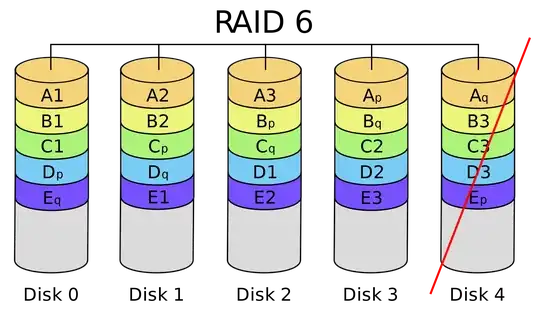

With RAID6, imagine the last disk missing:

diagrams courtesy of Wikipedia [*1]

The layouts obviously differ, and a degraded RAID6 requires significant extra fetching and recalculation to compensate for the missing disk in contrast to an intact RAID5 array.

For instance, instead of reading B3 directly, any three chunks out of [B1, B2, Bp, Bq] need to be read to reconstruct B3 - note that this data reconstruction is only temporary and needs to be done each time B3 is accessed. Also note that this 'read amplification' for random reads increases with the number of disks. For longer, sequential reads, the other chunks would have to be read anyway.

The degraded RAID6 array is expected to perform much worse, depending on the workload and the RAID controller implementation and caching. RAID implementations tend to be optimized for normal operation and stability, so a degraded array may perform below expectations, even with a large cache.

Could I convert a RAID6 array with one disk missing to a RAID5 of one less expected disk with minimal "reshaping"/"rewriting"?

Yes, in theory, since all data can be reconstructed. In practice that depends on the capabilities of the RAID controller at hand. Since it requires a special migration algorithm, implementation is not too likely. Personally, I don't think I've ever seen that option but then again I haven't been looking for it either.

[*1] You should note that there are different ways to map the data in RAID arrays. The above diagrams show the simplest, not optimized schemes. Other schemes would optimize specific workloads and a RAID5 scheme for sequential read access could be

A1-A2-A3-Ap

B2-B3-Bp-B1

C3-Cp-C1-C2

Dp-D1-D2-D3