We have just installed a cluster of 6 Proxmox servers, using 3 nodes as Ceph storage, and 3 nodes as compute nodes.

We are experiencing strange and critical issues with the performances and stability of our cluster.

VMs and Proxmox web access tends to hang for no obvious reason, from a few seconds to a few minutes - when accessing via SSH, RDP or VNC console directly.

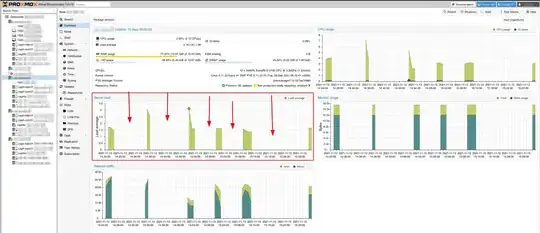

Even the Proxmox hosts seem to be out of reach, as can be seen in this monitoring capture. This also creates Proxmox cluster issues with some servers falling out of sync.

For instance, when testing ping between host nodes, it would work perfectly a few pings, hang, carry on (without any pingback time increase - still <1ms), hang again, etc.

We had some performance issues initially, but those have been fixed by adjusting the NICs' MTU to 9000 (+1300% read/write improvement). Now we need to get all this stable, because right now it's not production ready.

Hardware configuration

We have a similar network architecture to the one described in Ceph's official doc, with a 1 Gbps public network, and a 10 Gbps cluster network.

Those are connected to two physical network cards for each of the 6 servers.

Storage server nodes:

- CPU: Xeon E-2136 (6 cores, 12 threads), 3.3 GHz, Turbo 4.5 GHz

- RAM: 16 GB

- Storage:

- 2x RAID 1 256 GB NVMe, LVM

- system root logical volume: 15 GB (~55% free)

- swap: 7.4 GB

- WAL for OSD2: 80 GB

- 4 TB SATA SSD (OSD1)

- 12 TB SATA HDD (OSD2)

- 2x RAID 1 256 GB NVMe, LVM

- Network Interface Controller:

- Intel Corporation I350 Gigabit: connected to public 1 Gbps network

- Intel Corporation 82599 10 Gigabit: connected to 10 Gbps cluster (internal) network

Compute server nodes:

- CPU: Xeon E-2136 (6 cores, 12 threads), 3.3 GHz, Turbo 4.5 GHz

- RAM: 64 GB

- Storage:

- 2x RAID 1 256 GB SATA SSD

- system root logical volume: 15 GB (~65% free)

- 2x RAID 1 256 GB SATA SSD

Software: (on all 6 nodes)

- Proxmox 7.0-13, installed on top of Debian 11

- Ceph v16.2.6, installed with Proxmox GUI

- Ceph Monitor on each storage node

- Ceph manager on storage node 1 + 3

Ceph configuration

ceph.conf of the cluster:

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 192.168.0.100/30

fsid = 97637047-5283-4ae7-96f2-7009a4cfbcb1

mon_allow_pool_delete = true

mon_host = 1.2.3.100 1.2.3.101 1.2.3.102

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 1.2.3.100/30

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[mds]

keyring = /var/lib/ceph/mds/ceph-$id/keyring

[mds.asrv-pxdn-402]

host = asrv-pxdn-402

mds standby for name = pve

[mds.asrv-pxdn-403]

host = asrv-pxdn-403

mds_standby_for_name = pve

[mon.asrv-pxdn-401]

public_addr = 1.2.3.100

[mon.asrv-pxdn-402]

public_addr = 1.2.3.101

[mon.asrv-pxdn-403]

public_addr = 1.2.3.102

Questions:

- Is our architecture correct?

- Should the Ceph Monitors and Managers be accessed through the public network? (Which is what Proxmox's default configuration gave us)

- Does anyone know where these disturbances/instabilities come from and how to fix those?

[edit]

- Is it correct to use a default pool size of 3, when you have 3 storage nodes? I was initially temped by using 2, but couldn't find similar examples and decided to use the default config.

Noticed issues

- We noticed that arping somehow is returning pings from two MAC addresses (public NIC and private NIC), which doesn't make any sens since these are seperate NICs, linked by a separate switch. This is maybe part of the network issue.

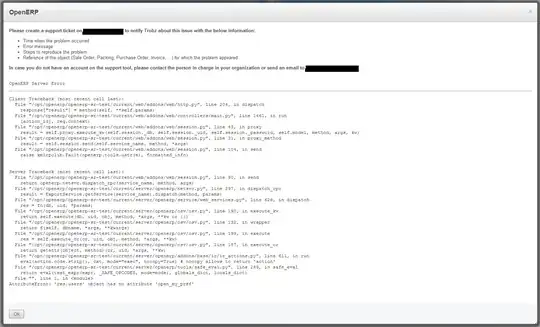

- During a backup task on one of the VMs (backup to a Proxmox Backup Server, physically remote), somehow it seems to affect the cluster. The VM gets stuck in backup/locked mode, even though the backup seems to have finished properly (visible and accessible on the backup server).

- Since the first backup issue, Ceph has been trying to rebuild itself, but hasn't managed to do so. It is in a degraded state, indicating that it lacks an MDS daemon. However, I double check and there are working MDS daemons on storage node 2 & 3. It was working on rebuilding itself until it got stuck in this state.

Here's the status:

root@storage-node-2:~# ceph -s

cluster:

id: 97637047-5283-4ae7-96f2-7009a4cfbcb1

health: HEALTH_WARN

insufficient standby MDS daemons available

Slow OSD heartbeats on back (longest 10055.902ms)

Slow OSD heartbeats on front (longest 10360.184ms)

Degraded data redundancy: 141397/1524759 objects degraded (9.273%), 156 pgs degraded, 288 pgs undersized

services:

mon: 3 daemons, quorum asrv-pxdn-402,asrv-pxdn-401,asrv-pxdn-403 (age 4m)

mgr: asrv-pxdn-401(active, since 16m)

mds: 1/1 daemons up

osd: 6 osds: 4 up (since 22h), 4 in (since 21h)

data:

volumes: 1/1 healthy

pools: 5 pools, 480 pgs

objects: 691.68k objects, 2.6 TiB

usage: 5.2 TiB used, 24 TiB / 29 TiB avail

pgs: 141397/1524759 objects degraded (9.273%)

192 active+clean

156 active+undersized+degraded

132 active+undersized

[edit 2]

root@storage-node-2:~# ceph osd tree ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF -1 43.65834 root default -3 14.55278 host asrv-pxdn-401 0 hdd 10.91409 osd.0 up 1.00000 1.00000 3 ssd 3.63869 osd.3 up 1.00000 1.00000 -5 14.55278 host asrv-pxdn-402 1 hdd 10.91409 osd.1 up 1.00000 1.00000 4 ssd 3.63869 osd.4 up 1.00000 1.00000 -7 14.55278 host asrv-pxdn-403 2 hdd 10.91409 osd.2 down 0 1.00000 5 ssd 3.63869 osd.5 down 0 1.00000