(Originally posted on DBA.StackExchange.com but closed, hopefully more relevant here.)

Alexander and the Terrible, Horrible, No Good, Very Bad...backups.

The Setup:

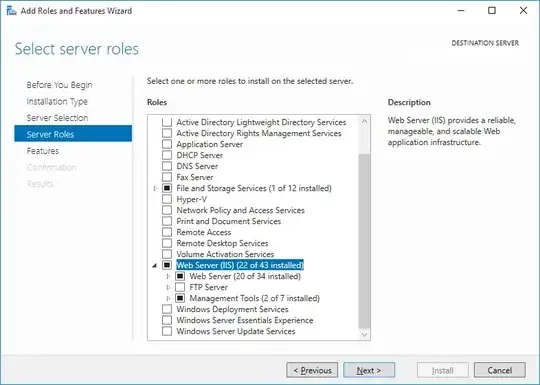

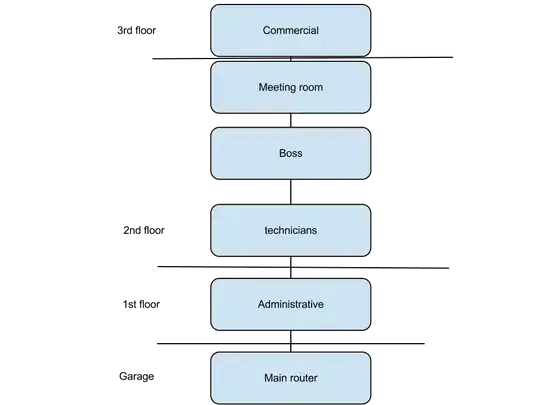

I have an on-premise SQL Server 2016 Standard Edition instance running on a virtual machine from VMWare.

@@Version:

Microsoft SQL Server 2016 (SP2-CU17) (KB5001092) - 13.0.5888.11 (X64) Mar 19 2021 19:41:38 Copyright (c) Microsoft Corporation Standard Edition (64-bit) on Windows Server 2016 Datacenter 10.0 (Build 14393: ) (Hypervisor)

The server itself is currently allocated 8 virtual processors, has 32 GB of memory, and all the disks are NVMes which get around 1 GB/sec of I/O. The databases themselves live on the G: drive, and the backups are separately stored on the P: drive. The total size across all of the databases is about 500 GB (before being compressed into the backup files themselves).

The maintenance plan runs once a night (around 10:30 PM) to do a full backup on every database on the server. Nothing else out of the ordinary is running on the server, nor is anything else running at that time in particular. The Power Plan off the server is set to "Balanced" (and "Turn off hard disk after" is set to 0 minutes aka never turn it off).

What Happened:

For the last year or so, the total runtime for the maintenance plan job took about 15 minutes total to complete. Since last week, it has skyrocketed to taking about 40x as long, about 15 hours to complete.

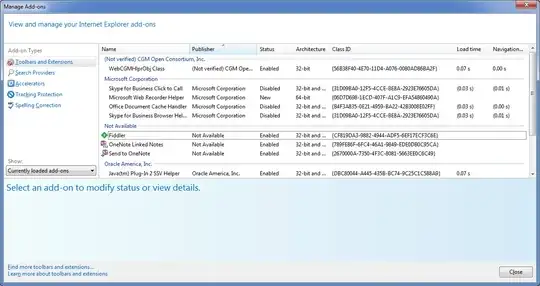

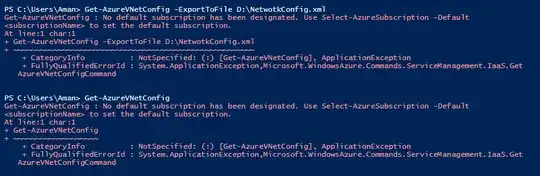

The only thing I'm aware of changing on the same day the maintenance plan slowed down was the following Windows updates were installed on the machine prior to the maintenance plan running:

We also have another similarly provisioned SQL Server instance on another VM that underwent the same Windows updates, and then subsequently experienced slower backups as well after. Thinking the Windows updates were directly the cause, we rolled them back completely, and the backups maintenance plan still runs extremely slow anyway. Weirdly, restoring the backups for a given database happens very quickly, and uses almost the full 1 GB/sec of I/O on the NVMes.

Things I've Tried:

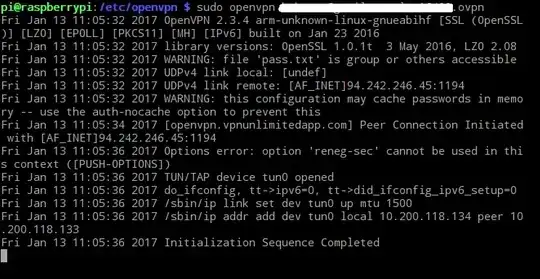

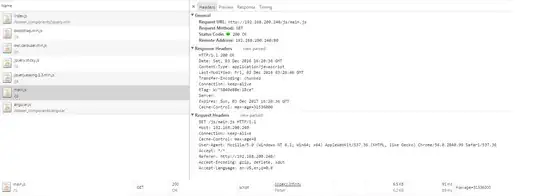

When using Adam Mechanic's sp_whoisactive, I've identified that the Last Wait Types of the backup processes are always indicative of a disk performance issue. I always see BACKUPBUFFER and BACKUPIO wait types, in addition to ASYNC_IO_COMPLETION:

When looking at the Resource Monitor on the server itself, during the backups, the Disk I/O section shows that the total I/O being utilized is only about 14 MB/sec (the most I've ever seen since this issue occurred is 30 MB/sec):

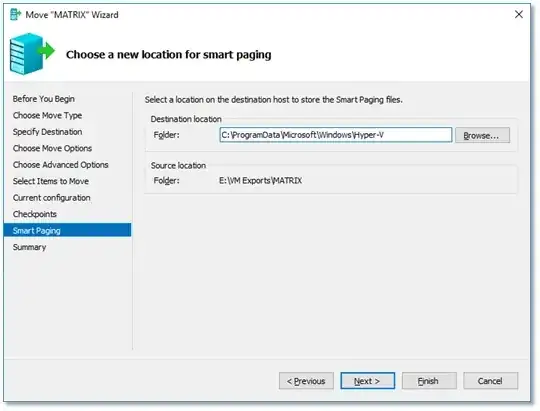

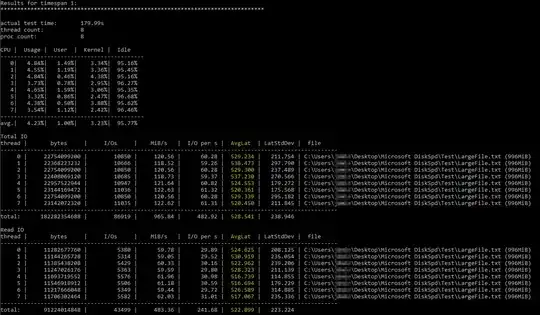

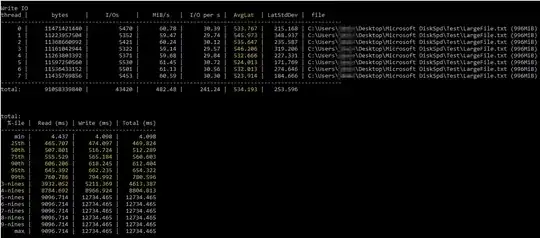

After stumbling on this helpful Brent Ozar article on using DiskSpd, I tried running it myself under similar parameters (only lowering the number of threads to 8 since I have 8 virtual processors on the server and setting the writes to 50%). This is the exact command diskspd.exe -b2M -d60 -o32 -h -L -t8 -W -w50 "C:\Users\...\Desktop\Microsoft DiskSpd\Test\LargeFile.txt". I used a text file I manually generated that's just under 1 GB big. I believe the I/O it measured seems OK, but the disk latencies were showing some ludicrous numbers:

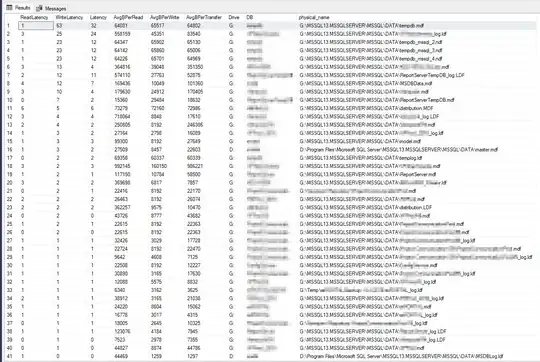

The DiskSpd results seem literally unbelievable. After further reading, I stumbled on a query from Paul Randall that returns disk latency metrics per database. These were the results:

The worst Write Latency was 63 milliseconds and the worst Read Latency was 6 milliseconds, so that seems to be a big variance from DiskSpd, and doesn't seem terrible enough to be the root cause of my issue. Cross-checking things further, I ran a few PerfMon counters on the server itself, per this Microsoft article, and these were the results:

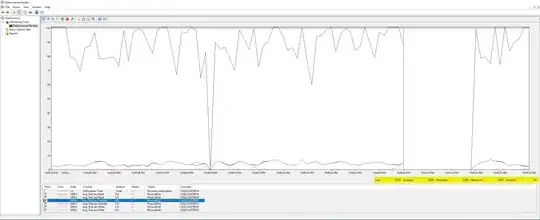

Nothing extraordinary here, the max value of all the counters I measured was 0.007 (which I believe is milliseconds?). Finally, I had my Infrastructure team check the disk latency metrics that VMWare was logging during the backups job and these were the results:

Seems like at worst, there was a spike of latency of about 200 milliseconds around midnight, and the highest I/O was 600 KB/sec (which I don't really understand since the Resource Monitor is showing that the backups are at least using around 14 MB/sec of I/O).

Other Things I've Tried:

I just tried restoring one of the larger databases (it's about 250 GB) and it only took about 8 minutes total to restore. Then I tried running DBCC CHECKDB on it and that took a total of 16 minutes to run (not sure if this is normal) but Resource Monitor showed similar I/O problems (the most I/O it ever utilized was 100 MB/s), with nothing else running:

Here is sp_whoisactive results when I first ran DBCC CHECKDB and then after it was 5% complete, notice the Estimated Time Remaining increased about 5 minutes even after it was already 5% done.

I'm guessing this is normal with it just being an estimate, and 16 minutes doesn't seem too bad for a 250 GB database (though I'm not sure if that's normal) but again the I/O was only maxing out at about 10% of the drive's capabilities, with nothing else running on the server or the SQL instance.

These are the results of DBCC CHECKDB, no errors reported.

I also have been experiencing weird slowness issues with the SHRINK command. I just tried to SHRINK the database which had 5% space to release (about 14 GB). It only took about 1 minute for it to complete 90% of the SHRINK:

About 5 minutes later, and it's still stuck at the same percent complete, and my Transaction Log Backups (that usually finish in 1-2 seconds) have been under contention for about 30 seconds:

15 minutes later and the SHRINK just finishes, while the Transaction Log Backups are still under contention for about 6 minutes now, and only 50% complete. I believe they immediately finished right after that since the SHRINK finished. The whole time the Resource Monitor showed the I/O sucking still:

Then I got an error with the SHRINK command when it finished:

I retried SHRINK again and it resulted in the same exact outcome as above.

Then I tried manually scripting a T-SQL backup to a file on the P: drive and that ran slow just like the maintenance plan backup job:

I ended up cancelling it after about 3 minutes, and it immediately rolled back.

Summary:

Coincidentally, the backups maintenance plan job got about 40x slower (from 15 minutes to 15 hours) every night, right after Windows updates were installed. Rolling back those Windows updates didn't fix the issue. SQL Server Wait Types, Resource Monitor, and Microsoft DiskSpd indicate a disk problem (I/O in particular), but all other measurements from Paul Randall's query, PerfMon, and VMWare Logs don't report any issues with the disks. Restoring the backups for a particular database are quick and use almost the full 1 GB/sec I/O. I'm scratching my head...