Disclaimer: repost from stackoverflow: https://stackoverflow.com/questions/67917278/site2site-wireguard-with-docker-routing-problems

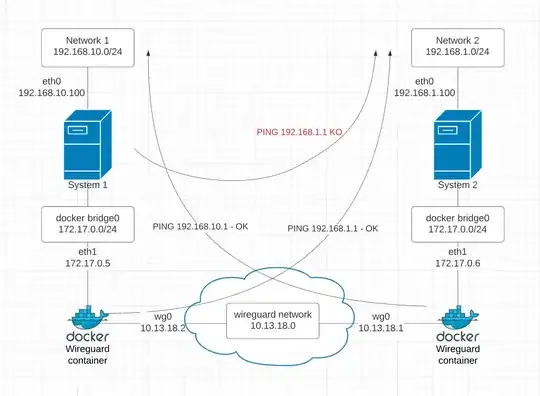

I am trying to have two containers, running on two RPI, act as a site-to-site VPN between Network 1 and Network 2.

With the setup below, I am able to ping from within the container each other network:

- from docker container 1 I can ping an address 192.168.1.1

- from docker container 2 I can ping the address 192.168.10.1

But if I try to ping 192.168.1.1 from the System1 host (192.168.10.100) I have errors (see below image to visualize what I am trying to do).

I understand I have to add a static route on system1 host (192.168.10.100) to direct the traffic for 192.168.1.0/24 through the wireguard container (172.17.0.5), thus I run:

$i p route add 192.168.1.0/24 via 172.17.0.5

$ ip route

default via 192.168.10.1 dev eth0 proto dhcp src 192.168.10.100 metric 100

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

172.18.0.0/16 dev br-e19a4f1b7646 proto kernel scope link src 172.18.0.1 linkdown

172.19.0.0/16 dev br-19684dacea29 proto kernel scope link src 172.19.0.1

172.20.0.0/16 dev br-446863cf7cef proto kernel scope link src 172.20.0.1

172.21.0.0/16 dev br-6800ed9b4dd6 proto kernel scope link src 172.21.0.1 linkdown

172.22.0.0/16 dev br-8f8f439a7a28 proto kernel scope link src 172.22.0.1 linkdown

192.168.1.0/24 via 172.17.0.5 dev docker0

192.168.10.0/24 dev eth0 proto kernel scope link src 192.168.10.100

192.168.10.1 dev eth0 proto dhcp scope link src 192.168.10.100 metric 100

but the ping to 192.168.1.1 still fails.

by running tcpdump on the container 2 I see that some packets are indeed reaching the container :

root@936de7c0d7eb:/# tcpdump -n -i any

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on any, link-type LINUX_SLL (Linux cooked), capture size 262144 bytes

10:11:19.885845 IP [publicIPsystem1].56200 > 172.17.0.6.56100: UDP, length 128

10:11:30.440764 IP 172.17.0.6.56100 > [publicIPsystem1].56200: UDP, length 32

10:11:35.480625 ARP, Request who-has 172.17.0.1 tell 172.17.0.6, length 28

10:11:35.480755 ARP, Reply 172.17.0.1 is-at 02:42:24:e5:ac:38, length 28

so I guess it is not a routing problem on system 1.

Can anyone tell me how to diagnose this further?

EDIT 1:

I have done the following test:

- run 'tcpdump -ni any' on container 2

- sent a ping from System 1 (from the host system) 'ping -c 1 192.168.1.1 .

On container 2 tcpdump records the following:

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on any, link-type LINUX_SLL (Linux cooked), capture size 262144 bytes

15:04:47.495066 IP [publicIPsystem1].56200 > 172.17.0.3.56100: UDP, length 128

15:04:58.120761 IP 172.17.0.3.56100 > [publicIPsystem1].56200: UDP, length 32

- sent a ping from container (within the container) 'ping -c 1 192.168.1.1 .

On container 2 tcpdump records the following:

# tcpdump -ni any

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on any, link-type LINUX_SLL (Linux cooked), capture size 262144 bytes

15:05:48.120717 IP [publicIPsystem1].56200 > 172.17.0.3.56100: UDP, length 128

15:05:48.120871 IP 10.13.18.2 > 192.168.1.1: ICMP echo request, id 747, seq 1, length 64

15:05:48.120963 IP 172.17.0.3 > 192.168.1.1: ICMP echo request, id 747, seq 1, length 64

15:05:48.121955 IP 192.168.1.1 > 172.17.0.3: ICMP echo reply, id 747, seq 1, length 64

15:05:48.122054 IP 192.168.1.1 > 10.13.18.2: ICMP echo reply, id 747, seq 1, length 64

15:05:48.122246 IP 172.17.0.3.56100 > [publicIPsystem1].56200: UDP, length 128

15:05:53.160617 ARP, Request who-has 172.17.0.1 tell 172.17.0.3, length 28

15:05:53.160636 ARP, Request who-has 172.17.0.3 tell 172.17.0.1, length 28

15:05:53.160745 ARP, Reply 172.17.0.3 is-at 02:42:ac:11:00:03, length 28

15:05:53.160738 ARP, Reply 172.17.0.1 is-at 02:42:24:e5:ac:38, length 28

15:05:58.672032 IP [publicIPsystem1].56200 > 172.17.0.3.56100: UDP, length 32

so, It seems that packets are treated differently from container 2 depending on something that I am currently missing.. could it be an iptables problem?

| Site 1 | Site 2 | |

|---|---|---|

| Network 1 IP range | 192.168.10.0/24 | 192.168.1.0/24 |

| host system address | 192.168.10.100 | 192.168.1.100 |

| bridge docker0 range | 172.17.0.0/16 | 172.17.0.0/16 |

| container address | 172.17.0.5 | 172.17.0.6 |

System 1 - wg0.conf

[Interface]

Address = 10.13.18.2

PrivateKey = *privatekey*

ListenPort = 56200

PostUp = iptables -A FORWARD -i %i -j ACCEPT; iptables -A FORWARD -o %i -j ACCEPT; iptables -t nat -A POSTROUTING -o eth0 -j MASQUERADE

PostDown = iptables -D FORWARD -i %i -j ACCEPT; iptables -D FORWARD -o %i -j ACCEPT; iptables -t nat -D POSTROUTING -o eth0 -j MASQUERADE

[Peer]

PublicKey = *publickey*

Endpoint = *system2address*:56100

AllowedIPs = 10.13.18.1/32 , 192.168.1.0/24

System 2 - wg0.conf

[Interface]

Address = 10.13.18.1

ListenPort = 56100

PrivateKey = *privatekey*

PostUp = iptables -A FORWARD -i %i -j ACCEPT; iptables -A FORWARD -o %i -j ACCEPT; iptables -t nat -A POSTROUTING -o eth0 -j MASQUERADE

PostDown = iptables -D FORWARD -i %i -j ACCEPT; iptables -D FORWARD -o %i -j ACCEPT; iptables -t nat -D POSTROUTING -o eth0 -j MASQUERADE

[Peer]

# peer_casaleuven

PublicKey = *publickey*

AllowedIPs = 10.13.18.2/32 , 192.168.10.0/24

Endpoint = *system1address*:56200