I have a zookeeper ensemble running in Kubernetes consisting of 3 instances. The ensemble operates mostly correctly and successfully services the Solr cluster it is backing. One thing does seem incorrect however, and it's breaking some health checks in another part of my application (Sitecore) as a result.

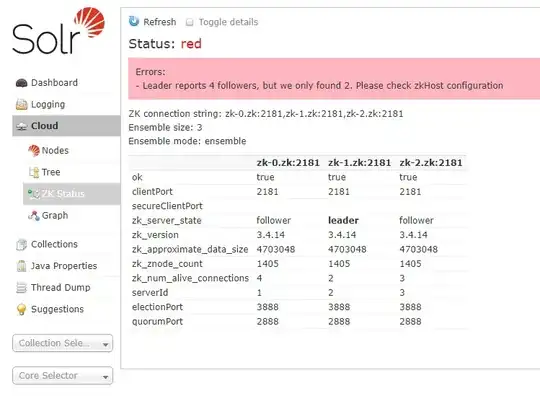

For some reason, the Solr -> Cloud -> ZK Status page -AND- the mntr command on the leader itself report sporadically changing and incorrect numbers of followers:

root@zk-1:/zookeeper-3.4.14# echo mntr | nc localhost 2181

zk_version 3.4.14-4c25d480e66aadd371de8bd2fd8da255ac140bcf, built on 03/06/2019 16:18 GMT

zk_avg_latency 2

zk_max_latency 4

zk_min_latency 1

zk_packets_received 1132

zk_packets_sent 1131

zk_num_alive_connections 5

zk_outstanding_requests 0

zk_server_state leader

zk_znode_count 1405

zk_watch_count 0

zk_ephemerals_count 57

zk_approximate_data_size 4703048

zk_open_file_descriptor_count 42

zk_max_file_descriptor_count 1048576

zk_fsync_threshold_exceed_count 0

zk_followers 5

zk_synced_followers 2

zk_pending_syncs 0

zk_last_proposal_size 92

zk_max_proposal_size 55791

zk_min_proposal_size 32

Note the number of reported followers (zk_followers) is not consistent - in this sample the GUI reports 4 but the command line reports 5. This number jumps up and down every time I refresh the GUI or re-run the mntr command, usually anywhere from 3-6 (the correct result obviously should be 2)

I can't work out what these extra followers being reported could be, as no output other than the zk_followers var shows anything other than the two real ones and logs don't seem to report anything untoward.

zoo.cfg:

root@zk-1:/zookeeper-3.4.14# cat /conf/zoo.cfg

clientPort=2181

dataDir=/store/data

dataLogDir=/store/datalog

tickTime=2000

initLimit=5

syncLimit=2

autopurge.snapRetainCount=3

autopurge.purgeInterval=0

maxClientCnxns=60

server.1=zk-0.zk:2888:3888

server.2=0.0.0.0:2888:3888

server.3=zk-2.zk:2888:3888

zooservers.txt:

root@zk-1:/zookeeper-3.4.14# cat /conf/zooservers.txt

server.1=zk-0.zk:2888:3888 server.2=0.0.0.0:2888:3888 server.3=zk-2.zk:2888:3888

As noted this doesn't seem to cause any real problems with the ensemble or the solr cluster except that it's failing some container start health checks which cause inconsistency in the application, and could potentially bring down parts of it.