Troubleshooting Pod-to-Pod Communication Cross Nodes

Prerequisite:

I use VirtualBox to setup 2 VMs. VirtualBox version is 6.1.16 r140961 (Qt5.6.2). The OS is CentOS-7(CentOS-7-x86_64-Minimal-2003.iso).

One VM as kubernetes master node, named k8s-master1. Another VM as kubernetes worker node, named k8s-node1.

Attach 2 network interfaces, NAT + Host-Only. NAT is used for internet facing. Host-Only is used for connections among VMs.

The network information on k8s-master1.

[root@k8s-master1 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:d1:41:4b brd ff:ff:ff:ff:ff:ff

inet 192.168.56.101/24 brd 192.168.56.255 scope global noprefixroute enp0s3

valid_lft forever preferred_lft forever

inet6 fe80::62ae:c676:da76:cbff/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:8a:2d:63 brd ff:ff:ff:ff:ff:ff

inet 10.0.3.15/24 brd 10.0.3.255 scope global noprefixroute dynamic enp0s8

valid_lft 80380sec preferred_lft 80380sec

inet6 fe80::952e:9af8:a1cb:8a07/64 scope link noprefixroute

valid_lft forever preferred_lft forever

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:04:6e:c7:dc brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

5: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 06:cd:b1:24:62:fc brd ff:ff:ff:ff:ff:ff

inet 10.244.0.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::4cd:b1ff:fe24:62fc/64 scope link

valid_lft forever preferred_lft forever

6: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether 06:75:06:d0:17:ee brd ff:ff:ff:ff:ff:ff

inet 10.244.0.1/24 brd 10.244.0.255 scope global cni0

valid_lft forever preferred_lft forever

inet6 fe80::475:6ff:fed0:17ee/64 scope link

valid_lft forever preferred_lft forever

8: veth221fb276@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 62:b9:61:95:0f:73 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::60b9:61ff:fe95:f73/64 scope link

valid_lft forever preferred_lft forever

The network information on k8s-node1.

[root@k8s-node1 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:ba:34:4f brd ff:ff:ff:ff:ff:ff

inet 192.168.56.102/24 brd 192.168.56.255 scope global noprefixroute enp0s3

valid_lft forever preferred_lft forever

inet6 fe80::660d:ec2:cb1c:49de/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:48:6d:8b brd ff:ff:ff:ff:ff:ff

inet 10.0.3.15/24 brd 10.0.3.255 scope global noprefixroute dynamic enp0s8

valid_lft 80039sec preferred_lft 80039sec

inet6 fe80::62af:7770:3b6d:6576/64 scope link noprefixroute

valid_lft forever preferred_lft forever

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:e4:65:f4:28 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

5: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 8e:d3:79:63:40:22 brd ff:ff:ff:ff:ff:ff

inet 10.244.1.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::8cd3:79ff:fe63:4022/64 scope link

valid_lft forever preferred_lft forever

6: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether 8e:22:8d:e5:29:24 brd ff:ff:ff:ff:ff:ff

inet 10.244.1.1/24 brd 10.244.1.255 scope global cni0

valid_lft forever preferred_lft forever

inet6 fe80::8c22:8dff:fee5:2924/64 scope link

valid_lft forever preferred_lft forever

8: veth5314dbaf@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 82:58:1c:3a:a1:a3 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::8058:1cff:fe3a:a1a3/64 scope link

valid_lft forever preferred_lft forever

10: calic849a8cefe4@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 2

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

11: cali82f9786c604@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 3

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

12: caliaa01fae8214@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 4

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

13: cali88097e17cd0@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 5

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

15: cali788346fba46@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

16: caliafdfba3871a@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 6

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

18: cali329803e4ee5@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 7

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

I make a table to simplify the network information.

| k8s-master1 | k8s-node1 |

| ----------------------------------------- | ------------------------------------------ |

| NAT 10.0.3.15/24 enp0s8 | NAT 10.0.3.15/24 enp0s8 |

| Host-Only 192.168.56.101/24 enp0s3 | Host-Only 192.168.56.102/24 enp0s3 |

| docker0 172.17.0.1/16 | docker0 172.17.0.1/16 |

| flannel.1 10.244.1.0/32 | flannel.1 10.244.1.0/32 |

| cni0 10.244.1.1/24 | cni0 10.244.1.1/24 |

Problem Description

Test case1:

Expose Kubernetes Service nginx-svc as NodePort, and Pod nginx is running on k8s-node1。

View the NodePort of nginx-svc

[root@k8s-master1 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-svc NodePort 10.103.236.60 <none> 80:30309/TCP 9h

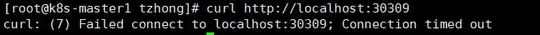

Run command curl http://localhost:30309 on k8s-master1. Finally, it returns Connection timed out.

Run command curl http://localhost:30309 on k8s-node1. It returns response.

Test case 2:

I created another Pod nginx on k8s-master1. There is already a Pod nginx on k8s-node1. Make a call from the Pod nginx on k8s-master1 to the Pod nginx on k8s-node1. It also Connection timed out. The problem is very likely to could not communicate cross node's pod in kubernetes cluster using flannel

Test case 3:

If 2 nginx pod on the same node, whatever k8s-master1 or k8s-node1. The connection is ok. The pod can get response from another pod.

Test case 4:

I did several ping requests from k8s-master1 to k8s-node1 using different IP address.

Ping k8s-node1's Host-Only IP address: Connection Success

ping 192.168.56.102

Ping k8s-node1's flannel.1 IP address: Connection Failed

ping 10.244.1.0

Ping k8s-node1's cni0 IP address: Connection Failed

ping 10.244.1.1

Test case 5:

I setup a new k8s cluster using bridge network on the same laptop. Test case 1,2,3,4 cannot be reproduced. No problem of 'Pod-to-Pod Communication Cross Nodes'.

Guess

Firewall. I disabled the firewall on all VMs. Also the firewall on laptop(Win 10), including any antivirus software. The connection problem is still there.

Compare the iptables between k8s cluster attached NAT & Host-Only with k8s cluster attached bridge network. Not found any big difference.

yum install -y net-tools iptables -L

Solution

# install route

[root@k8s-master1 tzhong]# yum install -y net-tools

# View routing table after k8s cluster installed thru. kubeadm.

[root@k8s-master1 tzhong]# route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default gateway 0.0.0.0 UG 100 0 0 enp0s3

10.0.2.0 0.0.0.0 255.255.255.0 U 100 0 0 enp0s3

10.244.0.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

10.244.1.0 10.244.1.0 255.255.255.0 UG 0 0 0 flannel.1

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.56.0 0.0.0.0 255.255.255.0 U 101 0 0 enp0s8

Focus on the below routing table rule. This rule means that all the requests from k8s-master1, and the destination is 10.244.1.0/24 will be forwarded by flannel.1.

But seems that the flannel.1 has some problems. So I just have a try. Add a new routing table rule that all the requests from k8s-master1, and the destination is 10.244.1.0/24 will be forwarded by enp0s8.

# Run below commands on k8s-master1 to add routing table rules. 10.244.1.0 is the IP address of flannel.1 on k8s-node1.

route add -net 10.244.1.0 netmask 255.255.255.0 enp0s8

route add -net 10.244.1.0 netmask 255.255.255.0 gw 10.244.1.0

[root@k8s-master1 ~]# route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default gateway 0.0.0.0 UG 100 0 0 enp0s3

10.0.2.0 0.0.0.0 255.255.255.0 U 100 0 0 enp0s3

10.244.0.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

10.244.1.0 10.244.1.0 255.255.255.0 UG 0 0 0 enp0s8

10.244.1.0 0.0.0.0 255.255.255.0 U 0 0 0 enp0s8

10.244.1.0 10.244.1.0 255.255.255.0 UG 0 0 0 flannel.1

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.56.0 0.0.0.0 255.255.255.0 U 101 0 0 enp0s8

Two additional routing table rules has been added.

10.244.1.0 10.244.1.0 255.255.255.0 UG 0 0 0 enp0s8

10.244.1.0 0.0.0.0 255.255.255.0 U 0 0 0 enp0s8

Do the same way on k8s-node1.

# Run below commands on k8s-node1 to add routing table rules. 10.244.0.0 is the IP address of flannel.1 on k8s-master1.

route add -net 10.244.0.0 netmask 255.255.255.0 enp0s8

route add -net 10.244.0.0 netmask 255.255.255.0 gw 10.244.0.0

Do test case 1,2,3,4,5 again. All passed. I am confused, why? I have a couple of questions.

Questions:

Why the connection of

flannel.1is blocked? Any way/methods to investigation?Is that a limitation of Host-Only network interface with flannel? If I change to other CNI plugins, the connection will be ok?

After add routing tables using

enp0s8, why the connection becomes ok?