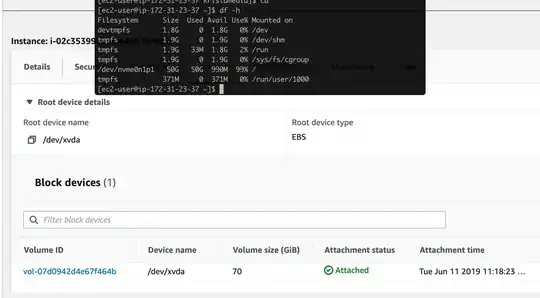

My EBS storage volume for EC2 instance was nearly full then I tried to upgrade from 50gb to 70gb. The upgrade process take a long time, I didn't check how many minute/hours but after some days I see the progress status was completed. Then I tried go to SSH and using command df -h, but the size still 50gb. Why?

mount return :

[root@ip-172-31-23-37 ec2-user]# mount

sysfs on /sys type sysfs (rw,nosuid,nodev,noexec,relatime)

proc on /proc type proc (rw,nosuid,nodev,noexec,relatime)

devtmpfs on /dev type devtmpfs (rw,nosuid,size=1878544k,nr_inodes=469636,mode=755)

securityfs on /sys/kernel/security type securityfs (rw,nosuid,nodev,noexec,relatime)

tmpfs on /dev/shm type tmpfs (rw,nosuid,nodev)

devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000)

tmpfs on /run type tmpfs (rw,nosuid,nodev,mode=755)

tmpfs on /sys/fs/cgroup type tmpfs (ro,nosuid,nodev,noexec,mode=755)

cgroup on /sys/fs/cgroup/systemd type cgroup (rw,nosuid,nodev,noexec,relatime,xattr,release_agent=/usr/lib/systemd/systemd-cgroups-agent,name=systemd)

pstore on /sys/fs/pstore type pstore (rw,nosuid,nodev,noexec,relatime)

cgroup on /sys/fs/cgroup/net_cls,net_prio type cgroup (rw,nosuid,nodev,noexec,relatime,net_cls,net_prio)

cgroup on /sys/fs/cgroup/devices type cgroup (rw,nosuid,nodev,noexec,relatime,devices)

cgroup on /sys/fs/cgroup/hugetlb type cgroup (rw,nosuid,nodev,noexec,relatime,hugetlb)

cgroup on /sys/fs/cgroup/memory type cgroup (rw,nosuid,nodev,noexec,relatime,memory)

cgroup on /sys/fs/cgroup/perf_event type cgroup (rw,nosuid,nodev,noexec,relatime,perf_event)

cgroup on /sys/fs/cgroup/freezer type cgroup (rw,nosuid,nodev,noexec,relatime,freezer)

cgroup on /sys/fs/cgroup/blkio type cgroup (rw,nosuid,nodev,noexec,relatime,blkio)

cgroup on /sys/fs/cgroup/cpu,cpuacct type cgroup (rw,nosuid,nodev,noexec,relatime,cpu,cpuacct)

cgroup on /sys/fs/cgroup/pids type cgroup (rw,nosuid,nodev,noexec,relatime,pids)

cgroup on /sys/fs/cgroup/cpuset type cgroup (rw,nosuid,nodev,noexec,relatime,cpuset)

/dev/nvme0n1p1 on / type xfs (rw,noatime,attr2,inode64,noquota)

systemd-1 on /proc/sys/fs/binfmt_misc type autofs (rw,relatime,fd=25,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=13932)

debugfs on /sys/kernel/debug type debugfs (rw,relatime)

hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime,pagesize=2M)

mqueue on /dev/mqueue type mqueue (rw,relatime)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw,relatime)

tmpfs on /run/user/1000 type tmpfs (rw,nosuid,nodev,relatime,size=379360k,mode=700,uid=1000,gid=1000)

I tried xfs_growfs -d /dev/nvme0n1p1 return:

[root@ip-172-31-23-37 ec2-user]# xfs_growfs -d /dev/nvme0n1p1

meta-data=/dev/nvme0n1p1 isize=512 agcount=26, agsize=524159 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1 spinodes=0

data = bsize=4096 blocks=13106683, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

data size unchanged, skipping

without -d arg:

[root@ip-172-31-23-37 kristacrm-new]# xfs_growfs /dev/nvme0n1p1

meta-data=/dev/nvme0n1p1 isize=512 agcount=26, agsize=524159 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1 spinodes=0

data = bsize=4096 blocks=13106683, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

I also tried xfs_resize but command is not found

I tried to reboot my EC2 instance but still 50gb.