In general, no, unless you meet one of two very specific constraints. Either:

- The device needs to be exposed read-only (this MUST be at the device level, not the filesystem level) to all the VMs, and MUST NOT be written to from anywhere at runtime.

or:

- The volume must be formatted using a cluster-aware filesystem, and all VMs must be part of the cluster (and the host system too if it needs access to the data).

In general, filesystems that are not cluster-aware are designed to assume they have exclusive access to their backing storage, namely that it’s contents will not change unless they do something to change them. This obviously can cause caching issues if you violate this constraint, but it’s actually far worse than that, because it extends to the filesystem’s internal structures, not just the file data. This means that you can quite easily completely destroy a filesystem by mounting it on multiple nodes at the same time.

Cluster-aware filesystems are the traditional solution to this, they use either network-based locking or a special form of synchronization on the shared storage itself to ensure consistency. On Linux, your options are pretty much OCFS2 and GFS2 (I recommend OCFS2 over GFS2 for this type of thing based on personal experience, but YMMV). However, they need a lot more from all the nodes in the cluster to be able to keep things in sync. As a general rule, they have significant performance limitations on many workloads due to the locking and cache invalidation requirements they enforce, they tend to involve a lot of disk and network traffic, and they may not be feature-complete compared to traditional single-node filesystems.

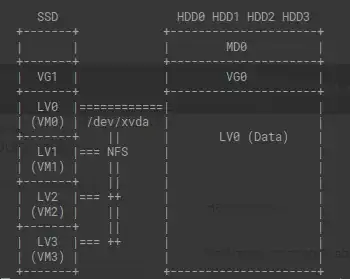

I would like to point out that NFS over a local network bridge (the ‘easy’ option to do what you want) is actually rather efficient. Unless you use a rather strange setup or insist on each VM being on it’s own VLAN, the NFS traffic will never even touch your NIC, which means it all happens in-memory, and thus has very little in the way of efficiency issues (especially if you are using paravirtualized networking for the VMs).

In theory, if you set up 9P, you could probably get better performance than NFS, but the effort involved is probably not worth it (the performance difference is not likely to be much).

Additionally to all of this, there is a third option, but it’s overkill for use on a single machine. You could set up a distributed filesystem like GlusterFS or Ceph. This is actually probably the best option if your data is not inherently colocated with your VMs (that is, you may be running VMs on nodes other than the ones the data is on), as while it’s not as efficient as NFS or 9P, it will give you much more flexibility in terms of infrastructure.