I have servers with many NVMe disks. I am testing disk performance with fio using the following:

fio --name=asdf --rw=randwrite --direct=1 --ioengine=libaio --bs=16k --numjobs=8 --size=10G --runtime=60 --group_reporting

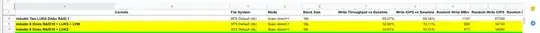

For a single disk, LUKS doesn't impact performance very much.

I tried using mdadm with 6 disks in raid10 + an XFS fiile system. It performed well.

But when I create a LUKS container on top of the mdadm device, I get terrible performance:

To recap:

- 6 disk

mdadmRAID10 + XFS = 116% of normal performance, ie 16% better write throughput and IOPS compared to a single disk + XFS - 6 disk

mdadmRAID10 + LUKS + XFS = 33% of normal performance, ie 67% worse write throughput and IOPS compared to a single disk + XFS

In all other scenarios I have not observed such a performance difference between LUKS and non LUKS. That includes LVM spanning, striping, and mirroring. In other words, mdadm RAID10 with 6 disks (I understand this to be spanned over 3 2-disk mirrors), with a LUKS container and an XFS or ext4 file system, performs worse in every regard when compared to:

- Single disk with/out LUKS

- 2 LUKS disks mirrored by LVM (2 LUKS containers)

- 2 LUKS disks spanned by LVM (2 LUKS containers)

I want one LUKS container on top of mdadm RAID10. That is the easiest configuration to understand and is recommended by many people on ServerFault, reddit, etc. I cannot see how it would be better to LUKS the disks first and then join them to the array, although I have not tested this. Seems like most people recommend the order MDADM => LUKS => LVM => File System.

A lot of the advice I've seen online is about somehow aligning stripe sizes of the RAID array with something else (LUKS? filesystem?) But the configuration choices they recommend are no longer available. For instance, in Ubuntu 18.04, there is no stripe_cache_size for me to set.

The only thing that made a difference for me was the instructions on this page. I do have the same CPU, a variant of the AMD EPYC.

Is there something fundamentally wrong with MDADM + LUKS + FileSystem (XFS) on Ubuntu 18.04 with 6 NVMe drives? If so, I would appreciate to understand the problem. If not, what accounts for the huge gap in performance between non-LUKS and LUKS? I've checked CPU and memory while tests are running, and neither are saturated at all.

Side curiosity:

MDADM + LUKS + XFS outperforms MDADM + XFS when using 75/25 R/W mix. Does that make any sense? I would imagine that LUKS should always be a bit worse than no LUKS, especially with libaio direct=1....

Edit 1

@Michael Hampton

processor : 0

vendor_id : AuthenticAMD

cpu family : 23

model : 49

model name : AMD EPYC 7452 32-Core Processor

stepping : 0

microcode : 0x8301034

cpu MHz : 1499.977

cache size : 512 KB

physical id : 0

siblings : 64

core id : 0

cpu cores : 32

apicid : 0

initial apicid : 0

fpu : yes

fpu_exception : yes

cpuid level : 16

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht s

yscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology nonstop_tsc cpuid extd_apicid aperfmper

f pni pclmulqdq monitor ssse3 fma cx16 sse4_1 sse4_2 movbe popcnt aes xsave avx f16c rdrand lahf_lm cmp_legacy svm extap

ic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw ibs skinit wdt tce topoext perfctr_core perfctr_nb bpext perfctr_

llc mwaitx cpb cat_l3 cdp_l3 hw_pstate sme ssbd ibrs ibpb stibp vmmcall fsgsbase bmi1 avx2 smep bmi2 cqm rdt_a rdseed ad

x smap clflushopt clwb sha_ni xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local clzero ir

perf xsaveerptr arat npt lbrv svm_lock nrip_save tsc_scale vmcb_clean flushbyasid decodeassists pausefilter pfthreshold

avic v_vmsave_vmload vgif umip rdpid overflow_recov succor smca

bugs : sysret_ss_attrs spectre_v1 spectre_v2 spec_store_bypass

bogomips : 4699.84

TLB size : 3072 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 43 bits physical, 48 bits virtual

power management: ts ttp tm hwpstate cpb eff_freq_ro [13] [14]

...Continues until process 63.

What hardware? Well, nvme list:

sudo nvme list

Node SN Model Namespace Usage Format FW Rev

---------------- -------------------- ---------------------------------------- --------- -------------------------- ---------------- --------

/dev/nvme0n1 BTLJ0086052F2P0BGN INTEL SSDPE2KX020T8 1 2.00 TB / 2.00 TB 512 B + 0 B VDV10152

/dev/nvme1n1 BTLJ007503YS2P0BGN INTEL SSDPE2KX020T8 1 2.00 TB / 2.00 TB 512 B + 0 B VDV10152

/dev/nvme2n1 BTLJ008609DJ2P0BGN INTEL SSDPE2KX020T8 1 2.00 TB / 2.00 TB 512 B + 0 B VDV10152

/dev/nvme3n1 BTLJ008609KE2P0BGN INTEL SSDPE2KX020T8 1 2.00 TB / 2.00 TB 512 B + 0 B VDV10152

/dev/nvme4n1 BTLJ00860AB92P0BGN INTEL SSDPE2KX020T8 1 2.00 TB / 2.00 TB 512 B + 0 B VDV10152

/dev/nvme5n1 BTLJ007302142P0BGN INTEL SSDPE2KX020T8 1 2.00 TB / 2.00 TB 512 B + 0 B VDV10152

/dev/nvme6n1 BTLJ008609VC2P0BGN INTEL SSDPE2KX020T8 1 2.00 TB / 2.00 TB 512 B + 0 B VDV10152

/dev/nvme7n1 BTLJ0072065K2P0BGN INTEL SSDPE2KX020T8 1 2.00 TB / 2.00 TB 512 B + 0 B VDV10152

What Linux Distro? Ubuntu xenial 18.04

What kernel? uname -r gives 4.15.0-121-generic

@anx

numactl --hardware gives

available: 1 nodes (0)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63

node 0 size: 1019928 MB

node 0 free: 1015402 MB

node distances:

node 0

0: 10

cryptsetup benchmark gives

# Tests are approximate using memory only (no storage IO).

PBKDF2-sha1 1288176 iterations per second for 256-bit key

PBKDF2-sha256 1466539 iterations per second for 256-bit key

PBKDF2-sha512 1246820 iterations per second for 256-bit key

PBKDF2-ripemd160 916587 iterations per second for 256-bit key

PBKDF2-whirlpool 698119 iterations per second for 256-bit key

argon2i 6 iterations, 1048576 memory, 4 parallel threads (CPUs) for 256-bit key (requested 2000 ms time)

argon2id 6 iterations, 1048576 memory, 4 parallel threads (CPUs) for 256-bit key (requested 2000 ms time)

# Algorithm | Key | Encryption | Decryption

aes-cbc 128b 1011.5 MiB/s 3428.1 MiB/s

serpent-cbc 128b 90.2 MiB/s 581.3 MiB/s

twofish-cbc 128b 174.3 MiB/s 340.6 MiB/s

aes-cbc 256b 777.0 MiB/s 2861.3 MiB/s

serpent-cbc 256b 93.6 MiB/s 581.9 MiB/s

twofish-cbc 256b 179.1 MiB/s 340.6 MiB/s

aes-xts 256b 1630.3 MiB/s 1641.3 MiB/s

serpent-xts 256b 579.2 MiB/s 571.9 MiB/s

twofish-xts 256b 336.2 MiB/s 335.8 MiB/s

aes-xts 512b 1438.0 MiB/s 1438.3 MiB/s

serpent-xts 512b 583.3 MiB/s 571.6 MiB/s

twofish-xts 512b 336.9 MiB/s 335.7 MiB/s

disks namplate RIO ? not sure what you mean but Im guessing you mean the disk hardware:

INTEL SSDPE2KX020T8 - which is rated at 2000 MB/s for random write

@shodanshok

my RAID array is rebuilding, it's doing this weird thing where when I reboot it goes from /dev/md0 to /dev/md127 and loses the first device.

So I dded the first 1G of each of the 6 disks and then rebuilt

mdadm --create --verbose /dev/md0 --level=10 --raid-devices=6 /dev/nvme[0-5]n1

mdadm: layout defaults to n2

mdadm: layout defaults to n2

mdadm: chunk size defaults to 512K

mdadm: size set to 1953382400K

mdadm: automatically enabling write-intent bitmap on large array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

Now mdadm -D /dev/md0 says

/dev/md0:

Version : 1.2

Creation Time : Tue Oct 20 07:27:19 2020

Raid Level : raid10

Array Size : 5860147200 (5588.67 GiB 6000.79 GB)

Used Dev Size : 1953382400 (1862.89 GiB 2000.26 GB)

Raid Devices : 6

Total Devices : 6

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Tue Oct 20 07:27:50 2020

State : clean, resyncing

Active Devices : 6

Working Devices : 6

Failed Devices : 0

Spare Devices : 0

Layout : near=2

Chunk Size : 512K

Consistency Policy : bitmap

Resync Status : 0% complete

Name : large20q3-co-120:0 (local to host large20q3-co-120)

UUID : 6d422227:dbfac37a:484c8c59:7ce5cf6e

Events : 6

Number Major Minor RaidDevice State

0 259 1 0 active sync set-A /dev/nvme0n1

1 259 0 1 active sync set-B /dev/nvme1n1

2 259 3 2 active sync set-A /dev/nvme2n1

3 259 5 3 active sync set-B /dev/nvme3n1

4 259 7 4 active sync set-A /dev/nvme4n1

5 259 9 5 active sync set-B /dev/nvme5n1

@Mike Andrews

Rebuild completed.

Edit 2

So after rebuild, I create luks container and XFS filesystem on the container.

Then I try the fio without specify ioengine and increasing numjobs to 128.

fio --name=randwrite --rw=randwrite --direct=1 --bs=16k --numjobs=128 --size=10G --runtime=60 --group_reporting

randw: (g=0): rw=randwrite, bs=(R) 16.0KiB-16.0KiB, (W) 16.0KiB-16.0KiB, (T) 16.0KiB-16.0KiB, ioengine=psync, iodepth=1

...

fio-3.1

Starting 128 processes

randw: Laying out IO file (1 file / 10240MiB)

Jobs: 128 (f=128): [w(128)][100.0%][r=0KiB/s,w=1432MiB/s][r=0,w=91.6k IOPS][eta 00m:00s]

randw: (groupid=0, jobs=128): err= 0: pid=17759: Wed Oct 21 04:02:36 2020

write: IOPS=103k, BW=1615MiB/s (1693MB/s)(94.9GiB/60148msec)

clat (usec): min=96, max=6186.3k, avg=1231.81, stdev=10343.03

lat (usec): min=97, max=6186.3k, avg=1232.92, stdev=10343.03

clat percentiles (usec):

| 1.00th=[ 898], 5.00th=[ 930], 10.00th=[ 955], 20.00th=[ 971],

| 30.00th=[ 996], 40.00th=[ 1012], 50.00th=[ 1020], 60.00th=[ 1037],

| 70.00th=[ 1057], 80.00th=[ 1090], 90.00th=[ 1827], 95.00th=[ 2024],

| 99.00th=[ 2147], 99.50th=[ 2245], 99.90th=[ 9634], 99.95th=[ 16188],

| 99.99th=[274727]

bw ( KiB/s): min= 32, max=16738, per=0.80%, avg=13266.43, stdev=3544.46, samples=15038

iops : min= 2, max= 1046, avg=828.56, stdev=221.45, samples=15038

lat (usec) : 100=0.01%, 250=0.01%, 500=0.01%, 750=0.02%, 1000=34.71%

lat (msec) : 2=59.09%, 4=6.03%, 10=0.05%, 20=0.05%, 50=0.01%

lat (msec) : 100=0.01%, 250=0.01%, 500=0.01%, 750=0.01%, 1000=0.01%

lat (msec) : 2000=0.01%, >=2000=0.01%

cpu : usr=0.31%, sys=2.33%, ctx=6292644, majf=0, minf=1308

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwt: total=0,6216684,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=1615MiB/s (1693MB/s), 1615MiB/s-1615MiB/s (1693MB/s-1693MB/s), io=94.9GiB (102GB), run=60148-60148msec

Disk stats (read/write):

dm-0: ios=3/6532991, merge=0/0, ticks=0/7302772, in_queue=7333424, util=98.56%, aggrios=3/6836535, aggrmerge=0/0, aggrticks=0/0, aggrin_queue=0, aggrutil=0.00%

md0: ios=3/6836535, merge=0/0, ticks=0/0, in_queue=0, util=0.00%, aggrios=0/2127924, aggrmerge=0/51503, aggrticks=0/102167, aggrin_queue=21846, aggrutil=32.64%

nvme0n1: ios=0/2131196, merge=0/51420, ticks=0/110120, in_queue=25668, util=29.16%

nvme3n1: ios=0/2127405, merge=0/51396, ticks=0/96844, in_queue=19064, util=22.12%

nvme2n1: ios=1/2127405, merge=0/51396, ticks=0/102132, in_queue=22128, util=25.15%

nvme5n1: ios=2/2125172, merge=0/51693, ticks=0/92864, in_queue=17464, util=20.39%

nvme1n1: ios=0/2131196, merge=0/51420, ticks=0/116220, in_queue=28492, util=32.64%

nvme4n1: ios=0/2125172, merge=0/51693, ticks=0/94824, in_queue=18264, util=20.72%

and then I unmount, remove the luks container... and then try to mkfs.xfs -f /dev/md0 on /dev/md0, and it hangs... but eventually it completes. I run the same test.

Jobs: 128 (f=128): [w(128)][100.0%][r=0KiB/s,w=2473MiB/s][r=0,w=158k IOPS][eta 00m:00s]

randw: (groupid=0, jobs=128): err= 0: pid=13910: Wed Oct 21 07:48:59 2020

write: IOPS=276k, BW=4314MiB/s (4523MB/s)(253GiB/60003msec)

clat (usec): min=23, max=853750, avg=460.62, stdev=2832.50

lat (usec): min=24, max=853751, avg=461.24, stdev=2832.50

clat percentiles (usec):

| 1.00th=[ 42], 5.00th=[ 48], 10.00th=[ 53], 20.00th=[ 61],

| 30.00th=[ 68], 40.00th=[ 77], 50.00th=[ 88], 60.00th=[ 102],

| 70.00th=[ 131], 80.00th=[ 693], 90.00th=[ 1762], 95.00th=[ 2180],

| 99.00th=[ 2671], 99.50th=[ 2868], 99.90th=[ 4817], 99.95th=[ 6980],

| 99.99th=[21890]

bw ( KiB/s): min= 1094, max=48449, per=0.78%, avg=34643.43, stdev=7669.85, samples=15360

iops : min= 68, max= 3028, avg=2164.78, stdev=479.37, samples=15360

lat (usec) : 50=7.27%, 100=51.59%, 250=16.09%, 500=3.16%, 750=2.39%

lat (usec) : 1000=2.11%

lat (msec) : 2=10.08%, 4=7.16%, 10=0.12%, 20=0.03%, 50=0.01%

lat (msec) : 100=0.01%, 250=0.01%, 500=0.01%, 750=0.01%, 1000=0.01%

cpu : usr=0.66%, sys=10.31%, ctx=17040235, majf=0, minf=1605

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwt: total=0,16565027,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=4314MiB/s (4523MB/s), 4314MiB/s-4314MiB/s (4523MB/s-4523MB/s), io=253GiB (271GB), run=60003-60003msec

Disk stats (read/write):

md0: ios=1/16941906, merge=0/0, ticks=0/0, in_queue=0, util=0.00%, aggrios=0/5682739, aggrmerge=0/2473, aggrticks=0/1218564, aggrin_queue=1186133, aggrutil=74.38%

nvme0n1: ios=0/5685248, merge=0/2539, ticks=0/853448, in_queue=796840, util=66.08%

nvme3n1: ios=0/5681945, merge=0/2474, ticks=0/1807992, in_queue=1812712, util=74.38%

nvme2n1: ios=1/5681946, merge=0/2476, ticks=0/772512, in_queue=718264, util=63.36%

nvme5n1: ios=0/5681023, merge=0/2406, ticks=0/1339628, in_queue=1300048, util=70.97%

nvme1n1: ios=0/5685248, merge=0/2539, ticks=0/1361944, in_queue=1329024, util=70.38%

nvme4n1: ios=0/5681029, merge=0/2406, ticks=0/1175864, in_queue=1159912, util=66.80%