Preliminary note

I'd like to second @PossiblyUsefulProbablyNot's definitely useful answer.

tldr; The real answer is probably "more RAM"

Especially this point.

Caveat

Not so much of an admin per sé.

More of a software engineering perspective, maybe.

No alternative to measurement

What we know

So, the machine is

- going to run an (Enterprise?) Java-based backend-application of sorts

- publicly (within some sizeable context, anyway) expose an HTTP API handling client requests

- presumably with some form of Database attached

- is otherwise described as not very much I/O-bound

- does not rely on the availability, latency or throughput of 3rd party services

Not all that vague a picture, the OP is painting. But at the same time far from adequate enough data to give an answer pertaining to the OPs individual situation.

Sure, 32 cores at 2/3 the clock speed is likely to perform better than 1/4 of the cores at comparatively small a speed advantage. Sure, heat generated doesn't scale well with clock speeds above the 4GHz threshold. And sure, if I'd have to blindly have to put my eggs in one basket, I'd pick the 32 cores any day of the week.

What we don't know

Way too much, still.

However, beyond these simple truths, I'd be very skeptical of an hypothetical attempt at a more concrete and objective answer. Iff it is at possible (and you have ample reason to remain convinced about ops per unit time being a valid concern), get your hands on the hardware you intend to run the system on, measure and test it, end-to-end.

An informed decision involves relevant and believable data.

OP wrote:

RAM is not important

In the vast majority of cases, memory is the bottleneck.

Granted, the OP is primarily asking about CPU cores vs. clock speed and thus memory appears on the fringes of being off-topic.

I don't think it is, though. To me, it appears much more likely the question if based on a false premise. Now, don't get me wrong, @OP, your question is on-topic, well phrased and your concern obviously real. I am simply not convinced that the answer to which CPU would perform "better" in your use-case is at all relevant (to you).

Why memory matters (to the CPU)

Main memory is excruciatingly slow.

Historically, as compared to the hard drive, we tend to think of RAM as "the fast type of storage". In the context of that comparison, it still holds true. However, over the course of the recent decades, processor speeds have consistently grown at significantly more rapid a rate than has the performance of DRAM. This development over time has led to what is commonly known as the "Processor-Memory-Gap".

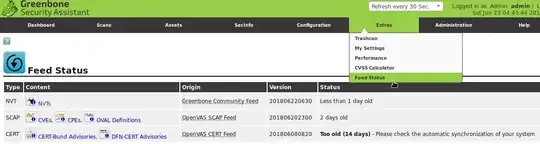

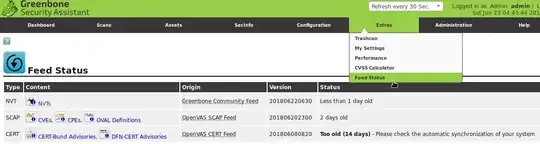

The Gap between Processor and Memory Speeds

(source: Carlos Carvalho, Departamento de Informática, Universidade do Minho)

Fetching a cache line from main memory into a CPU register occupies roughly ~100 clock cycles of time. During this time, your operating system will report one of the two hardware threads in one of the 4 (?) cores of your x86 architecture as busy.

As far as the availability of this hardware thread is concerned, your OS ain't lying, it is busy waiting. However, the processing unit itself, disregarding the cache line that is crawling towards it, is de facto idle.

No instructions / operations / calculations performed during this time.

+----------+---------------+---------------------------------------------------------------------------------------------------+

| Type of | size of | Latency due to fetching a cache line |

| mem / op | cache +--------+--------+------------+--------------------------------------------------------------------+

| | (register) | clock | real | normalized | now I feel it |

| | | cycles | time | | |

+----------+---------------+--------+--------+------------+--------------------------------------------------------------------+

| tick | 16KB | 1 | 0.25ns | 1s | Dinner is already served. Sit down, enjoy. |

| | *the* 64 Bits | | | | |

+----------+---------------+--------+--------+------------+--------------------------------------------------------------------+

| L1 | 64KB | 4 | 1ns | 4s | Preparations are done, food's cooking. |

| | | | | | Want a cold one to bridge the gap? |

+----------+---------------+--------+--------+------------+--------------------------------------------------------------------+

| L2 | 2048KB | 11 | ~3ns | 12s | Would you be so kind as to help me dice the broccoli? |

| | | | | | If you want a beer, you will have to go to the corner store. |

+----------+---------------+--------+--------+------------+--------------------------------------------------------------------+

| L3 | 8192KB | 39 | ~10ns | 40s | The car is in the shop, you'll have to get groceries by bike. |

| | | | | | Also, food ain't gonna cook itself, buddy. |

+----------+---------------+--------+--------+------------+--------------------------------------------------------------------+

| DRAM | ~20GB | 107 | ~30ns | 2min | First year of college. First day of the holiday weekend. |

| | | | | | Snow storm. The roommate's are with their families. |

| | | | | | You have a piece of toast, two cigarettes and 3 days ahead of you. |

+----------+---------------+--------+--------+------------+--------------------------------------------------------------------+

Latency figures of the Core-i7-9XX series chips (source: Scott Meyers, 2010)

Bottom line

If proper measurement is not an option, rather than debating cores vs. clock speed, the safest investment for excess hardware budget is in CPU cache size.

So, if memory is regularly keeping individual hardware threads idle, surely more ~cow bell~ cores is the solution?

In theory, if software was ready, multi/hyper-threading could be fast

Suppose you are looking at you tax returns (e.g.) of the last few years, say 8 years of data in total. You are holding 12 monthly values (columns) per year (row).

Now, a byte can hold 256 individual values (as its 8 individual binary digits, may assume 2 states each, which results in 8^2 = 256 permutations of distinct state. Regardless of the currency, 256 feels a little on the low end to be able to represent the upper boundary of salary figures. Further, for the sake of argument, let's assume the smallest denomination ("cents") to not matter (everybody earns whole integer values of the main denomination). Lastly suppose the employer is aware of the salary gap between upper management and the regular workforce and hence keeps those selected few in an entirely different accounting system altogether.

So, in this simplified scenario, let's assume that twice the aforementioned amount of memory space, i.e. 2 byte (or a "halfword"), when used in unsigned form, i.e. representing the range from [0, 2^16 = 65536), suffices to express all employee's monthly salary values.

So in the language / RDBS / OS of your choice, you are now holding a matrix (some 2-dimensional data structure, a "list of lists") with values of uniform data size (2-byte / 16 Bit).

In, say C++, that would be a std::vector<std::vector<uint16_t>>. I am guessing you'd use a vector of vector of short in Java as well.

Now, here's the prize question:

Say you want to adjust the values for those 8 years for inflation (or some other arbitrary reason to write to the address space). We are looking at a uniform distribution of 16 Bit values. You will need to visit every value in the matrix once, read it, modify it, and then write it to the address space.

Does it matter how you go about traversing the data?

The answer is: yes, very much so.

If you iterate over the rows first (the inner data structure), you will get near perfect scalability in a concurrent execution environment. Here, an extra thread and hence half the data in one and the other half in the other will run you job twice as fast. 4 threads? 4 times the performance gain.

If however you choose to do the columns first, two threads will run your task significantly slower. You will need approx 10 parallel threads of execution to only to mitigate (!) the negative effect that the choice of major traversal direction just had. And as long as your code ran in a single thread of execution, you couldn't have measured a difference.

+------+------+------+------+------+------+------+

| Year | Jan | Feb | Mar | Apr | ... | Dec |

+------+------+------+------+------+------+------+

| 2019 | 8500 | 9000 | 9000 | 9000 | 9000 | 9000 | <--- contiguous in memory

+------+------+------+------+------+------+------+

| 2018 | 8500 | 8500 | 8500 | 8500 | 8500 | 8500 | <--- 12 * 16Bit (2Byte)

+------+------+------+------+------+------+------+

| 2017 | 8500 | 8500 | 8500 | 8500 | 8500 | 8500 | <--- 3 * (4 * 16Bit = 64Bit (8Byte)

+------+------+------+------+------+------+------+

| ... | 8500 | 7500 | 7500 | 7500 | 7500 | 7500 | <--- 3 cache lines

+------+------+------+------+------+------+------+

| 2011 | 7500 | 7200 | 7200 | 7200 | 7200 | 7200 | <--- 3 lines, likely from the same

+------+------+------+------+------+------+------+ virtual memory page, described by

the same page block.

The OP wrote:

a) a CPU with 32 cores and clock speed 2.5 Ghz

or

b) a CPU with 8 cores but clock speed of 3.8 Ghz

All else being equal:

--> Consider cache size, memory size, the hardware's speculative pre-fetching capabilities and running software that can actually leverage parallelisation all more important than clock speed.

--> Even without reliance on 3rd party distributed systems, make sure you truly aren't I/O bound under production conditions. If you must have the hardware in-house and can't let AWS / GCloud / Azure / Heroku / Whatever-XaaS-IsHipNow deal with that pain, spend on the SSDs you put your DB on. While you do not want to have the database live on the same physical machine as does your application, make sure the network distance (measure latency here too) is as short as possible.

--> The choice of a renowned, vetted, top-of-the-line, "Enterprise-level" HTTP Server Library that is beyond the shadow of any doubt built for concurrency, does not alone suffice. Make sure any 3rd party libraries you run in your routes are. Make sure your in-house code is as well.

VMs or cloud solutions are not an option in this case

This I get.

Various valid reasons exist.

it has to be a physical machine [...]

[...] CPU with 32 cores and clock speed 2.5 Ghz

But this not so much.

Neither AWS nor Azure invented distributed systems, micro-clustering or load balancing.

It's more painful to setup on bare metal hardware and without MegaCorp-style resources, but you can run a distributed mesh of K8 clusters right in your own living room. And tooling for recurring health checks and automatic provisioning on peak load exists for self-hosted projects too.

OP wrote:

RAM is not important

Here's a ~hypothetical~ reproducible scenario:

Enable zram as your swapspace, because, RAM is cheap and not important and all that. Now run a steady, memory-intensive task that doesn't result in frequent paging exactly. When you have reached the point of serious LRU inversion, your fan will get loud and your CPU cores hot - because it is busy dealing with memory management (moving crap in and out of swap).

OP wrote:

RAM is not important

In case I haven't expressed myself clearly enough:

I think you should reconsider this opinion.

TL;DR?

32 cores.

More is better.