Security is like an onion - its all about layers, stinky ogre-like layers.

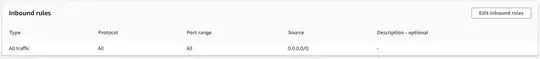

By allowing SSH connections from everywhere you've removed one layer of protection and are now depending solely on the SSH key, which is thought to be secure at this time, but in the future a flaw could be discovered reducing or removing that layer.

And when there are no more layers, you have nothing left.

A quick layer is to install fail2ban or similar. These daemons monitor your auth.log file and as SSH connections fail, their IPs are added to an iptables chain for a while. This reduces the number of times a clinet can attempt connections every hour/day. I end up blacklisting bad sources indefinitely - but hosts that have to hang SSH out listening promiscuously might still get 3000 failed root login attempts a day. Most are from China, with Eastern Europe and Russia close behind.

If you have static source IPs then including them in your Security Group policy is good, and this means the rest of the world can't connect. Downside, what if you can't come from an authorised IP for some reason, like your ISP is dynamic or your link is down?

A reasonable solution is to run a VPN server on your instance, listening to all source IPs, and then once the tunnel is up, connect over the tunnel via SSH. Sure its not perfect protection, but its one more layer in your shield of ablative armour... OpenVPN is a good candidate,

You can also leverage AWS's "Client VPN" solution, which is a managed OpenVPN providing access to your VPC. No personal experience of this sorry.

Other (admittedly thin) layers are to move SSH to a different port. This doesn't really do much other than reducing the script-kiddy probes that default to port 22/tcp. Anyone trying hard will scan all ports and find your SSH server on 2222/tcp or 31337/tcp or whatever.

If possible, you can investigate IPv6 ssh only, again it merely limits the exposure without adding any real security. The number of unsolicited SSH connections on IPv6 is currently way lower than IPv4, but still non-zero.