Boot Drive is a WD 1TB SSD running Ubuntu 18.04 LTS...

I have 2 arrays running off 1 LSI Card... 16 x 8tb IronWolf inside a RaidMachine (Raid 6) [my main data] 11 x 10tb Exos inside a SuperMicro (Raid 5) [backups]

Everything was fine, and has been fine for months. Did a minor BIOS update (Asus MB running Ryzen 1700 with OPCACHE Disabled, has been rock solid). Rebooted, Raid6 UUID was missing. So, I commented out the FSTAB entry for the raid6 to be required for bootup and got into my system. Weird things are showing for the Raid6. Maybe someone has an idea what happened? And if so, how to maybe fix it? I have 60TB of data, about 50TB has been backed up onto the raid5 array that is working fine.

My stats are as follows... (edited for clarity)

Checking the raid gives me the following... and as you will notice... it says raid0 not raid6...

/dev/md126:

Version : 1.2

Raid Level : raid0

Total Devices : 1

Persistence : Superblock is persistent

State : inactive

Working Devices : 1

Name : tbserver:0 (local to host tbserver)

UUID : 3d6402fe:8204e7c2:48b87456:8dc2cc39

Events : 650489

Number Major Minor RaidDevice

- 8 32 - /dev/sdc

When I query the only working drive... I get the following...

Personalities : [linear] [multipath] [raid6] [raid5] [raid10]

md126 : inactive sdc[16](S)

7813894488 blocks super 1.2

unused devices: <none>

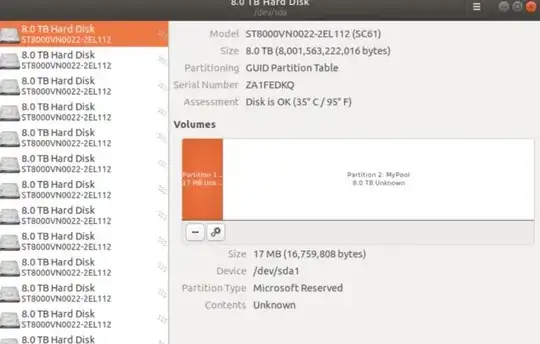

There are supposed to be 16 drives listed here. Weird. If I go into DISKS I see all 16 drives BUT here is what is weird...

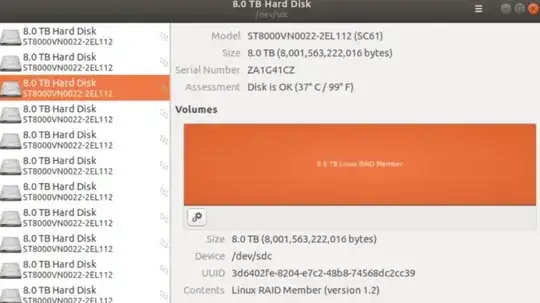

and the 1 working drive shows this...

Background... both arrays were created with mdadm. I was NOT using any kind of POOL or LVM. All the drives are the SAME size and type for each raid.

Something I noticed right off the bat was... the 15 missing drives are showing a Microsoft partition. How? This machine runs a barebones Ubuntu installation.

My FSTAB

#raid6 2019-10-24

UUID=6db81514-82d6-4e60-8185-94a56ca487fe /raid6 ext4 errors=remount-ro,acl 0 1

This worked before. The weird thing is... that UUID does NOT exist anymore and when I get details on the sdc drive... the only one that shows... it has a different UUID... maybe that's the individual drives UUID?

I'm not an expert by any means. I can do quite a few things and have 40 years of computer background in programming, tinkering, etc... but, before I start tinkering with this... and maybe wrecking it?!?!!

Has anyone seen this happen before? Did my doing anything in the BIOS of this Asus machine cause the BIOS to write to any drives!??!?! And if so, why didn't it ruin the Raid5 array?! Beats me what happened... here is the error log I had...

In case the image is hard to read...

Jun 27 15:02:35 tbserver udisksd[1573]: Error reading sysfs attr `/sys/devices/virtual/block/md126/md/degraded': Failed to open file “/sys/devices/virtual/block/md126/md/degraded”: No such file or directory (g-file-error-quark, 4)

Jun 27 15:02:35 tbserver udisksd[1573]: Error reading sysfs attr `/sys/devices/virtual/block/md126/md/sync_action': Failed to open file “/sys/devices/virtual/block/md126/md/sync_action”: No such file or directory (g-file-error-quark, 4)

Jun 27 15:02:35 tbserver udisksd[1573]: Error reading sysfs attr `/sys/devices/virtual/block/md126/md/sync_completed': Failed to open file “/sys/devices/virtual/block/md126/md/sync_completed”: No such file or directory (g-file-error-quark, 4)

Any suggestions? Has anyone seen anything weird like this happen out of the blue? How could 15 drives get a 2nd partition. No Microsoft OS or anything has ever been booted up on this machine.

The only thing I did after the BIOS flash (before it could boot up even once) was to disable the OPCACHE for stability (old Ryzens need this). And I did turn CSM to AUTO after I tried booting a couple of times (in case I didn't have it disabled, but that wasn't it). Could turning on the CSM write anything to the disks?!??!?

I don't think anything I could do in the BIOS would ever write a bunch of data to a drive and create new partitions!??!!?

Many thanks for your time! David Perry Perry Computer Services

EDIT: I'm assuming the array is trashed. If anyone has seen this before I would be very interested to know how it happened or any info on this would be appreciated.

EDIT - update to a question asked by shodanshok.. MORE INFO - Before I had Linux installed, I did tinker with Microsoft Storage Spaces but found them garbage (slow). I fresh installed Linux on the system, and created the arrays with MDADM in Sept 2019 approx and things have been running awesome ever since up until today... so, I don't know what happened... why the storage spaces showed up "all of a sudden" when I haven't seen them in ages. All the drives in DISKS looked like sdc in the pictures above... there never was any partition1 and 2 prior to the raid failing... so, very strange...

david@tbserver:~$ sudo mdadm -E /dev/sda

/dev/sda:

MBR Magic : aa55

Partition[0] : 4294967295 sectors at 1 (type ee)

david@tbserver:~$ sudo mdadm -E /dev/sdc

/dev/sdc:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x1

Array UUID : 3d6402fe:8204e7c2:48b87456:8dc2cc39

Name : tbserver:0 (local to host tbserver)

Creation Time : Thu Oct 24 18:44:30 2019

Raid Level : raid6

Raid Devices : 16

Avail Dev Size : 15627788976 (7451.91 GiB 8001.43 GB)

Array Size : 109394518016 (104326.74 GiB 112019.99 GB)

Used Dev Size : 15627788288 (7451.91 GiB 8001.43 GB)

Data Offset : 264192 sectors

Super Offset : 8 sectors

Unused Space : before=264112 sectors, after=688 sectors

State : clean

Device UUID : bfe3008d:55deda2b:cc833698:bfac79c3

Internal Bitmap : 8 sectors from superblock

Update Time : Sat Jun 27 11:08:53 2020

Bad Block Log : 512 entries available at offset 40 sectors

Checksum : 631761e6 - correct

Events : 650489

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 1

Array State : AAAAAAAAAAAAAAAA ('A' == active, '.' == missing, 'R' == replacing)

david@tbserver:~$ sudo mdadm -D /dev/sdc

mdadm: /dev/sdc does not appear to be an md device

And for extra info - I added this...

david@tbserver:~$ sudo gdisk /dev/sda

GPT fdisk (gdisk) version 1.0.3

Partition table scan:

MBR: protective

BSD: not present

APM: not present

GPT: present

Found valid GPT with protective MBR; using GPT.

Command (? for help): p

Disk /dev/sda: 15628053168 sectors, 7.3 TiB

Model: ST8000VN0022-2EL

Sector size (logical/physical): 512/4096 bytes

Disk identifier (GUID): 42015991-F493-11E9-A163-04D4C45783C8

Partition table holds up to 128 entries

Main partition table begins at sector 2 and ends at sector 33

First usable sector is 34, last usable sector is 15628053134

Partitions will be aligned on 8-sector boundaries

Total free space is 655 sectors (327.5 KiB)

Number Start (sector) End (sector) Size Code Name

1 34 32767 16.0 MiB 0C01 Microsoft reserved ...

2 32768 15628052479 7.3 TiB 4202 MyPool

UPDATE: 2020-06-27 - 9:05pm EST - What we think happened was, some corruption happened and when Ubuntu tried automatically repairing the array it found some OLD Microsoft raid partition data and somehow made them the active partitions or something. Which clobbered the entire REAL MDADM raid. The weird thing is... why 15 drives out of 16? Also, why would it do that "on it's own" without asking confirmation!??!

We are zero wiping these drives right now. I'll pull my backup from June 12, 2020 - I lost about 10TB of data. I am using R-Studio to recover the deleted data off another machine to help reduce the impact of the 10TB lost. I'll be backing up from now on before any Ubuntu software updates, or any BIOS updates in the future. And even though we zero wiped the disks when I first bought them to test for bad sectors and to "touch" the entire surface, I didn't zero wipe them when installing Linux fresh after testing and fiddling around with Microsoft Spaces which had decent READ performance, but was horrific writing. Also, I figured MDADM is more robust... I have a feeling this is just a fluke that happened, and now that I am zeroing out these disks, this probably will never happen again. How else could those partition "just appear" after 9 months of running Linux perfectly?!?!? It had to be some "Recovery" software in Linux built in to repair the array and it made the wrong decision because it saw some OLD structures on the drive and was confused...

It shouldn't take long... it's writing at 3.5GB/s - 207MB per drive approx.

If anyone could shed some light on this I would LOVE to hear if what happened to me has happened to anyone else and if my theory on what happened is accurate.

I think in the future, as a lesson to everyone, if you play with other raids or partitions, make sure to zero wipe the OLD raid data off the drives.. it's the only explanation I can think of for what happened... I have a feeling this wouldn't have even been an issue if Linux didn't find that old Microsoft Storage spaces partition data on the drive. To be honest, I'm shocked it was still there.. I would assume that's due to the obvious thing that MDADM doesn't wipe out whatever is put there previously. My fault for taking a shortcut and not zero wiping the drives. In the future, I'll always zero wipe the entire array before re-creating any new arrays.

UPDATE: 2020-07-05... We are pretty sure we found out WHY the initial problem happened (the initial corruption anyways NOT the recovery disaster). After the BIOS update, yes I know, everything was reset. I did turn off OPCACHE as expected for an old Ryzen 1700 running in Linux. It's a known bug. What happened after I rebuilt the raid, was I could copy files for hours, with no problems, and then poof... rsync showed no activity... no error messages, nothing... I bumped up the SOC voltages just a BIT... and have been able to copy terabytes of data for DAYS without a single problem. So, I find that quite troublesome that "default" settings in this Asus board do not work as being stable. The settings I changed are...

VDDCR SOC Voltage to OFFSET MODE + 0.02500

VDDCR CPU Load Line Calibration to Level 2

VDDCR SOC Load Line Calibration to Level 2

VDDCR SOC Power Phase Control to Extreme

The system is rock solid again. I have also been talking to a friend who has a 3950x running in a B350 motherboard and has had to change the same settings on his to get his to pass Prime 95 in Linux.

I don't notice any extra heat coming from the system based on those voltage adjustments. I am still running a Ryzen Wraith Prism Fan Air Cooled which is overkill for a 1700 but, it's a beautiful and quiet fan. My memory is only Crucial DOCP at 2666Mhz on the QVL.

My data recovery finally finished a couple of days ago but I thought I should share my solution to the corruption anyways. I am still concerned about leaving old raid data on the drives in the future. As mentioned above, we zero wiped the entire array so I doubt this will ever happen again.

Kudos to my long time friend Doug Gale for helping me make my system rock solid again. Without knowing about those voltage adjustments, I would have had to rebuild the system and who knows, maybe the problem would have surfaced again... I'm also using Crucial Gold Rated PSU's in case anyone is curious... :) with Cyperpower Dual 1500VA Backups.