I am using systemd to run Celery, a python distributed task scheduler, on an Ubuntu 18.04 LTS server.

I have to schedule mostly long running tasks (taking several minutes to execute), which, depending on data, may end up eating a large amount of RAM. I want to put on some sort of safety measure in order to keep memory usage under control.

I have read here that you can control how much RAM it's used by a systemd service using the MemoryHigh and MemoryMax options

I have used these options in my Celery service setup, and I watched what happens to a celery service when it reaches the given limits with htop.

The service stops the execution and is put on "D" state, but stays there and the allocated memory is not freed.

Is it possible for systemd to kill the memory eating process?

UPDATE

As suggested in the below comment I have tried some diagnostic, and I'm going to add other problem details.

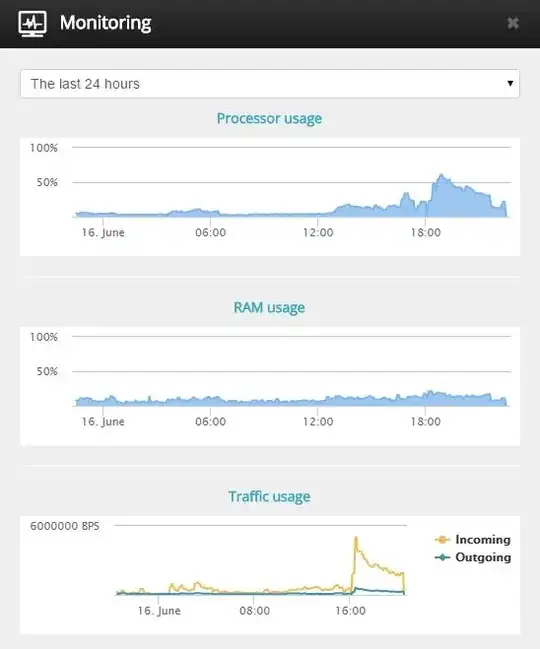

The Linux server runs on a development virtual machine hosted by Virtual Box, running just the resource consuming task.

The MemoryMax setting is set as percentage (35%).

In the picture below you can see the process status in htop

As suggested in the answer to this question, the following is the content of the suspended process stack.

[<0>] __lock_page_or_retry+0x19b/0x2e0

[<0>] do_swap_page+0x5b4/0x960

[<0>] __handle_mm_fault+0x7a3/0x1290

[<0>] handle_mm_fault+0xb1/0x210

[<0>] __do_page_fault+0x281/0x4b0

[<0>] do_page_fault+0x2e/0xe0

[<0>] page_fault+0x45/0x50

[<0>] 0xffffffffffffffff