Been running a couple of fio tests on a new server with the following setup:

- 1x Samsung PM981a 512GB M.2 NVMe drive.

- Proxmox installed with ZFS on root.

- 1x VM with 30GB space created and Debian 10 installed.

- 6x Intel P4510 2TB U.2 NVMe drives connected to 6x dedicated PCIe 4.0 x4 lanes with OCuLink.

- Directly attached to the single VM.

- Configured as RAID10 in the VM (3x mirrors striped).

- Motherboard / CPU / memory: ASUS KRPA-U16 / EPYC 7302P / 8x32GB DDR4-3200

The disks are rated up to 3,200 MB/s sequential reads. From a theoretical point of view that should give a max bandwidth of 19.2 GB/s.

Running fio with numjobs=1 on the ZFS RAID I'm getting results in the range ~2,000 - 3,000 MB/s (the disks are capable of the full 3,200 MB/s when testing without ZFS or any other overhead, for example, while running Crystal Disk Mark in Windows installed directly on one of the disks):

fio --name=Test --size=100G --bs=1M --iodepth=8 --numjobs=1 --rw=read --filename=fio.test

=>

Run status group 0 (all jobs):

READ: bw=2939MiB/s (3082MB/s), 2939MiB/s-2939MiB/s (3082MB/s-3082MB/s), io=100GiB (107GB), run=34840-34840msec

Seems reasonable everything considered. Might also be CPU limited as one of the cores will be sitting on 100% load (with some of that spent on ZFS processes).

When I increase numjobs to 8-10 things get a bit weird though:

fio --name=Test --size=100G --bs=1M --iodepth=8 --numjobs=10 --rw=read --filename=fio.test

=>

Run status group 0 (all jobs):

READ: bw=35.5GiB/s (38.1GB/s), 3631MiB/s-3631MiB/s (3808MB/s-3808MB/s), io=1000GiB (1074GB), run=28198-28199msec

38.1 GB/s - well above the theoretical maximum bandwidth.

What exactly is the explanation here?

Additions for comments:

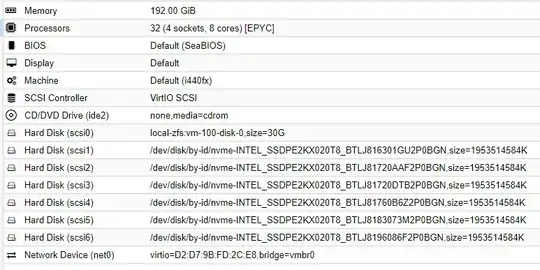

VM configuration:

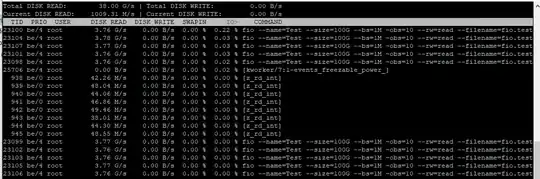

iotop during test: