You've got a couple of options...

Longer session duration

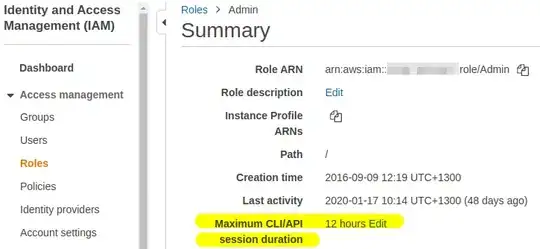

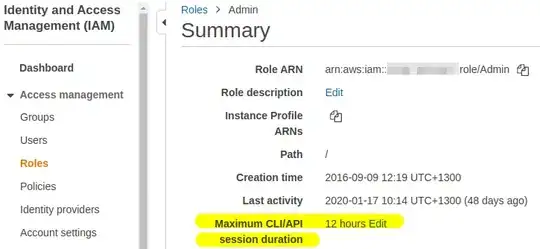

You can set the maximum session duration to up to 12 hours - that may be enough for your long running tasks.

Not sure how you're obtaining your temporary credentials, you may have to set the session duration there to 12 hours as well as some tools request tokens valid for to 1 hour by default.

Also check out get-credentials script that may facilitate your workflow. Maybe, I don't know what exactly are you doing now, however writing temporary creds to ~/.aws/credentials is usually not the best practice.

Copy to EC2 first

If you can’t increase the max duration setting you may be able to work around the limitation by:

first copying the data to an EC2 instance, e.g. using rsync.

then from EC2 upload to S3, taking advantage of the instance EC2 Instance Role that automatically renews. Besides copying from EC2 to S3 may be faster.

Use EC2 credentials locally

You can also "steal" the EC2 role credentials and use it locally. Check out this get-instance-credentials script.

[ec2-user@ip-... ~] ./get-instance-credentials

export AWS_ACCESS_KEY_ID="ASIA5G7...R3KG5"

export AWS_SECRET_ACCESS_KEY="bzkNi/9YV...FDzzd0"

export AWS_SESSION_TOKEN="IQoJb3JpZ2luX2VjEKf....PUtXw=="

These creds are usually good for 6 hours.

Now copy and past those lines to your local non-EC2 machine.

user@server ~ $ export AWS_ACCESS_KEY_ID="ASIA5G7...R3KG5"

user@server ~ $ export AWS_SECRET_ACCESS_KEY="bzkNi/9YV...FDzzd0"

user@server ~ $ export AWS_SESSION_TOKEN="IQoJb3JpZ2luX2VjEKf....PUtXw=="

And verify that the credentials work:

user@server ~ $ aws sts get-caller-identity

{

"UserId": "AROAIA...DNG:i-abcde123456",

"Account": "987654321098",

"Arn": "arn:aws:sts::987654321098:assumed-role/EC2-Role/i-abcde123456"

}

As you can see you local non-EC2 server now has the same privileges as the EC2 instance from where you retrieved the credentials.

Use aws CLI to multipart-upload the file

You can split your large file to smaller chunks (see split man page) and use aws s3api multipart-upload sub-commands. See aws s3api create-multipart-upload, complete-multipart-upload and part-upload. You can refresh the credentials between each part and retry the failed parts if your credentials expire half-way through.

Create a custom script

You can use the briliant boto3 Python AWS SDK library to build your own file uploader. It should be very simple to write a little multipart-uploader that will request new credentials every time they expire, including asking for the MFA.

As you can see you've got many options.

Hope that helps :)