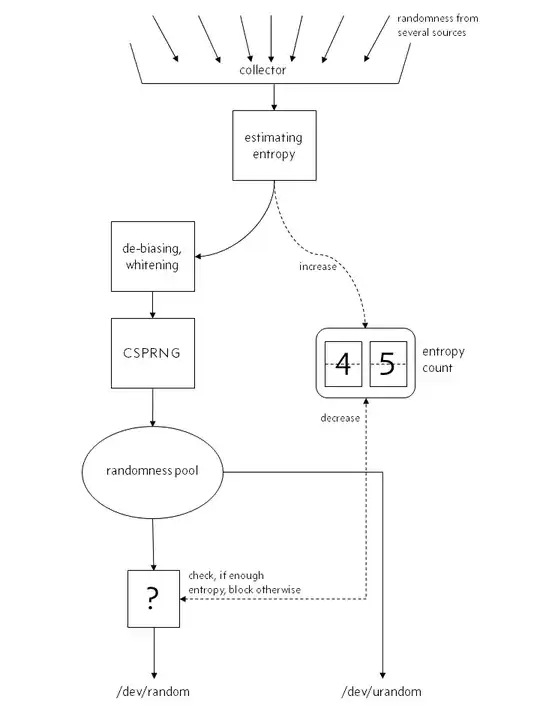

- Modern Linux systems, especially headless virtuals, often have shallow /dev/random entropy pools, which can cause software to block or fail to run (e.g. Tripwire in FIPS mode)

- While many agree /dev/urandom is preferred, many packages simply default to /dev/random

- Pointing individual software packages at /dev/urandom may be possible, but represents a maintenance issue (e.g., will you remember to re-do customizations after the package gets updated?) or require a recompile (and do you have source?)

So my question is: lacking a legitimate (hardware) source of entropy, is it reasonable to routinely augment /dev/random using something like rng-tools (fed from /dev/urandom) or haveged?

It is more dangerous to have programs depending and/or waiting on /dev/random's shallow entropy pool, or to have (arguably) less robust randomness introduced purely by software?

And when I say "routinely", I mean "all my servers, starting when booted, running all the time, just to be safe."

I'm not questioning whether /dev/urandom is sufficiently strong - as per the cites above, almost everybody agrees it's fine (well, not everybody, but still). I want to be certain that using a daemon like rngd or haveged to work randomness back into /dev/random - even if they're based on /dev/urandom like rngd would be in most cases - doesn't introduce weaknesses. (I'm not comfortable with just mknod'ing the problem away, for maintenance and transparency reasons.)

(While this question could be considered a dupe of Is it safe to use rng-tools on a virtual machine?, that answer seems to fly against the widespread reputable voices saying /dev/urandom is sufficient, so this is in a sense seeking clarification as to where vulnerability would be introduced (if agreeing with that answer) or whether it is in fact introduced (if not).)

(related reading - Potter and Wood's BlackHat presentation "Managing and Understanding Entropy Usage" is what got me thinking about this)