Should I change all of my online passwords due to the heartbleed bug?

Edit:

I found a list of vulnerable sites on GitHub and checked all my critical sites. Everything I was really concerned with was not vulnerable according to the list.

Should I change all of my online passwords due to the heartbleed bug?

Edit:

I found a list of vulnerable sites on GitHub and checked all my critical sites. Everything I was really concerned with was not vulnerable according to the list.

Short answer: Yes, all passwords.

Long answer: At first sight, you only need to change the secret key of the certificate. But due to several reasons, all passwords are affected. Here's why:

Reason 1: Chained attack

Therefore you at least have to check each single password, whether it is affected or not.

Good thing on this attack vector: You'll be aware of the issue.

Reason 2: Access to database

Since you as the user cannot know how securely the password was stored in the database, you need to consider that the attacker has the password (and username). This is a problem, if you reused the password for other services.

Bad thing: You don't know whether you are affected, because logging in to other services still works (for you and for the attacker).

Only solution: Change all passwords.

Reason 3: not only secrets certificate keys are leaked

I looked at some of the data dumps

from vulnerable sites,

and it was ... bad.

I saw emails, passwords, password hints.

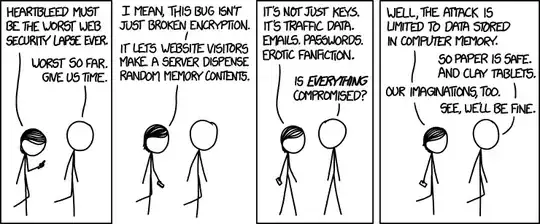

As posted by XKCD #1353:

So the attacker could already have your password in plain text even without access to the database and without chained attack.

Notes

The second problem, described by @Iszy, still remains: Wait to change your password until the service has fixed the Heartbleed issue. This is a critical hen-and-egg issue, because you can only reliably change all the passwords, when all services you use are updated.

No. You do not have to change all your passwords due to heartbleed. You have to change all your passwords because everybody seems to have become a huge herd of panicking sheep; changing your passwords will give you a warm feeling of doing something useful while you are running to your ultimate destination, which may well be off the nearest cliff. Such is the fate of sheep.

While you metaphorically plummet to your death, you will have time to reflect on the stupendous ability of human brains to cease to function properly right at the time when they are most needed.

Edit: OK, just to be done with it, here are some considerations expressed in a more boring way.

The heartbleed bug is serious. Nobody disputes that (well, some people have, but there always are people like that). That other answer explains at length how the bug can be leveraged to seriously compromise the security of the target server. In effect, this means that though the bug is a read-only buffer overrun, that may be sufficient to bring its consequences on par with that of a write buffer overrun (the classical kind of buffer overflow).

However, three distinct fallacies are at work in the whole heartbleed "debate" (mmh, "debate" may not be the right word; "panicking mob" would be closer to the truth). These are the following:

Fallacy 1: this bug is exceptional. This one is flatly wrong. Buffer overflows are relatively uncommon in well-managed and seasoned code, but any significant piece of C code must still have a few overflows lurking in it. In the case of a Web server, overflows and other vulnerabilities (e.g. use-after-free) in OpenSSL but also Apache (and its modules !) and the kernel are relevant. Such things happen regularly, say several times per year. Not once per decade, as sensationalist articles claim.

This fallacy is dangerous because it comforts sloppy administrators in the idea that they can get away with not applying security patches on a regular basis; they believe that they can just be "reactive", doing something only when it hits the news.

Fallacy 2: we have all been hacked two years ago. This one hinges on a suspension of quantification: if something is theoretically possible, just assume that it has happened, however improbable it was. This is often justified by righteous claims of "not wanting any compromise about security", which is just contrary to both best practices and reality. Every security feature has a cost which must be balanced with the expected risks and consequences. In this case, some people claim that since the bug has been there for two years, it is ob-vi-ous that it has been exploited by <insert-name-here> for two years.

It would be preposterous to claim that heartbleed is the last bug ever in the whole code stack of a server. Experience shows that there always is at least one more bug, somewhere. The consequence is that if attackers are "allowed" to be aware of bugs years before everybody else, then they are still in control of your server. If you must reset keys or passwords because of the heartbleed bug, then, by the same logic, you must do it again. And again. This is not tenable in practice. At some point, one must assume that a server may be not-attacked despite having potential vulnerabilities. Alternatives being either to use only code which has been proven to be bug-free (fat chance on that; NASA spends 20000$ per line of code to make it as free of bugs as possible, and they still have bugs); or not operating a Web server at all (the only code without bugs is the code which does not exist).

Fallacy 3: resetting a password cleanses the server. Let's assume that you strongly believe that attackers did exploit the heartbleed bug, and obtained all your deepest secrets. What will you do about that ? You reset passwords and private keys, and... that's all ? Such a course of action makes sense only if the private key and the users' passwords are the only private things in the server. What kind of server is that, then ? Why would passwords be needed to access a server which contains only public data ?

As the other answer says, the attacker may have leveraged the bug in order to "gain access to the database". At which point the whole database contents are in his possession, not just hashed passwords; he may also have altered parts of it, and planted hostile code (e.g. backdoors to be able to come back). There is no end to the mischief that such an attacker may enact. Merely resetting passwords and changing certificate keys is like bringing a mop to deal with an excess of moisture on the floor of a Titanic cabin; it misses the point. If you really believe that a compromise may have occurred, then there is only one thing to do: nuke it from orbit. If you compromise, by just resetting the passwords, then you are demonstrating that you do not seriously believe in your own justification; you reset passwords for Public Relations, not because of an actually suspected attack.

So there you have it: ineffective countermeasures to a fantasized attack stemming from an overhyped bug (serious, but still overhyped), which comforts poor sysadmins in their bad practices. Mr Sheep, please meet Mrs Cliff. I am sure you will get along well.

Changing passwords on a site that is/was vulnerable to Heartbleed is only effective after:

All of the above being beyond your control as an end-user, it's best to just wait for confirmation from the site owner that they've fully mitigated the vulnerability.

That said, following best practices such as using complex and unique passwords for all websites would still help to lessen the impact of a password compromise. This is something you should be doing regardless of what the latest and greatest vulnerability news says. It would also help simplify your own response to issues such as this, since you will only need to change your passwords on affected sites and not all others also.

Yes, you should always change all of your passwords.

You never know when someone might have compromised your password. And really without any certainty that it the worst hasn't happened today, the only sensible action is to take any and all measures possible to protect yourself.

The same goes for tomorrow.