Why is HTTP still commonly used, instead what I would believe much more secure HTTPS?

-

1Example: [Why aren't application downloads routinely done over HTTPS?](http://security.stackexchange.com/q/18853) – Gilles 'SO- stop being evil' Aug 30 '12 at 20:12

-

3Not directly related, but an interesting take by Troy Hunt: https://www.troyhunt.com/i-wanna-go-fast-https-massive-speed-advantage/ Basically HTTP/2 only works with TLS, so using HTTPS can improve the speed (if the client and server uses HTTP/2). – user276648 Jul 25 '16 at 11:07

-

IMO, browsers should not accept an address without scheme at all. If you want to find info about a domain name or an IP address, this is a pain in the ass with Chrome and other browsers that don't have a separate search field. – Oskar Skog Apr 19 '17 at 16:45

-

what does it mean default? is it not a) configuration of a browser and b) configuration of a web server. I think most browsers and most web servers have https by default – Effie Sep 08 '21 at 12:36

10 Answers

SSL/TLS has a slight overhead. When Google switched Gmail to HTTPS (from an optional feature to the default setting), they found out that CPU overhead was about +1%, and network overhead +2%; see this text for details. However, this is for Gmail, which consists of private, dynamic, non-shared data, and hosted on Google's systems, which are accessible from everywhere with very low latency. The main effects of HTTPS, as compared to HTTP, are:

Connection initiation requires some extra network roundtrips. Since such connections are "kept alive" and reused whenever possible, this extra latency is negligible when a given site is used with repeated interactions (as is typical with Gmail); systems which serve mostly static contents may find the network overhead to be non-negligible.

Proxy servers cannot cache pages served with HTTPS (since they do not even see those pages). There again, there is nothing static to cache with Gmail, but this is a very specific context. ISPs are extremely fond of caching since network bandwidth is their lifeforce.

HTTPS is HTTP within SSL/TLS. During the TLS handshake, the server shows its certificate, which must designate the intended server name -- and this occurs before the HTTP request itself is sent to the server. This prevents virtual hosting, unless a TLS extension known as Server Name Indication is used; this requires support from the client. In particular, Internet Explorer does not support Server Name Indication on Windows XP (IE 7.0 and later support it, but only on Vista and Win7). Given the current market share of desktop systems using WinXP, one cannot assume that "everybody" supports Server Name Indication. Instead, HTTPS servers must use one IP per server name; the current status of IPv6 deployment and IPv4 address shortage make this a problem.

HTTPS is "more secure" than HTTP in the following sense: the data is authenticated as coming from a named server, and the transfer is confidential with regards to whoever may eavesdrop on the line. This is a security model which does not make sense in many situations: for instance, when you look at a video from Youtube, you do not really care about whether the video really comes from youtube.com or from some hacker who (courteously) sends you the video you wish to see; and that video is public data anyway, so confidentiality is of low relevance here. Also, authentication is only done relatively to the server's certificate, which comes from a Certification Authority that the client browser knows of. Certificates are not free, since the point of certificates is that they involve physical identification of the certificate owner by the CA (I am not telling that commercial CA price their certificates fairly; but even the fairest of CA, operated by the Buddha himself, would still have to charge a fee for a certificate). Commercial CA would just love HTTPS to be "the default". Moreover, it is not clear whether the PKI model embodied by the X.509 certificates is really what is needed "by default" for the Internet at large (in particular when it comes to relationships between certificates and the DNS -- some argue that a server certificate should be issued by the registrar when the domain is created).

In many enterprise networks, HTTPS means that the data cannot be seen by eavesdroppers, and that category includes all kinds of content filters and antivirus software. Making HTTPS the default would make many system administrators very unhappy.

All of these are reasons why HTTPS is not necessarily a good idea as default protocol for the Web. However, they are not the reason why HTTPS is not, currently, the default protocol for the Web; HTTPS is not the default simply because HTTP was there first.

- 9,141

- 11

- 44

- 62

- 320,799

- 57

- 780

- 949

-

6+1 for static content takes a hit. Consider a site with multiple

tags. Since each image will have a separate connection, the computational overhead of say a 2048-bit or 4096-bit encrypted connection can become fairly significant on mobile platforms where increasesd CPU usage quickly drains a battery, users might avoid your site because for one reason or another they think it drains their battery. This is of course one merit to hosting non-confidential static content on a separate server (without SSL). – jamesbtate Jun 06 '11 at 01:17

-

+1 @puddingfox: Interesting point regarding the CPU overhead to mobile devices. – blunders Jun 06 '11 at 02:02

-

1@puddingfox: I used to work as a PM for mobile development, and as I see it, if security is required then the current favorite is to use a platform-specific native app, which uses a encrypted compressed data-only REST/SOAP API to talk to the web service. The SSL setup use fx 2048-bit (remember, after setup you will switch to fx 128 bit AES), but **CPU load for encryption is not a real problem**. The real problems with in-browser apps/SSL are network latency, and buggy Javascript support on mobile browsers. That's why native apps are preferred. – Jun 06 '11 at 07:19

-

"slight" overhead? I think not. Latency is the killer for web based applications. This is made worse by the history of problems on MSIE's ssl implementation (now mostly resolved). There are also additional costs related to SSL (buying a cert is just the start). – symcbean Jun 06 '11 at 09:28

-

11@puddingfox: a browser will open only a few SSL connections to a given site (e.g. 3 or 4), using HTTP keep-alive to send several successive HTTP requests within each. Moreover, only the very first one needs asymmetric key exchange (the one where a 2048-bits-or-so key is involved); the other connections will use the SSL/TLS "resume session" feature (faster handshake, less messages, symmetric crypto only). Finally, most SSL/TLS server use a RSA key and the client part of RSA is cheap (the server incurs most of the cost in RSA). – Thomas Pornin Jun 06 '11 at 10:58

-

1`This is a security model which does not make sense in many situations... you do not really care...`- that's highly subjective. I do care. – nicodemus13 Jan 08 '14 at 20:49

-

3Good answer. However, there is a reason why someone sufficiently paranoid might want to view youtube videos over https: viewing them over https would mean that an eavesdropper would not easily be able to compile a record of which videos you watch. – Warren Dew Apr 13 '14 at 06:20

-

3Regarding the assertion that `you do not really care about whether the video really comes from youtube.com`, this is wrong. If I'm watching a video of Bruce Schneier talking about cryptography and I might make decisions based on his opinion, then I want to know that the message has not been altered in transit. – dotancohen Nov 04 '14 at 13:59

-

1@dotancohen: that's integrity which is separate issue and can be solved without using encryption. A digital signature of the video stream is sufficient to establish that the video is unaltered from its source. Unfortunately digitally signing cacheable, unencrypted contents isn't widely deployed, as there is no widely deployed infrastructure for it, AFAICT. – Lie Ryan Feb 10 '15 at 04:00

-

@LieRyan: Thanks, I see what you mean. There is an additional obstacle: video streams are cacheable, unencrypted _streaming_ contents, so a SHA1 hash of the contents is not possible to provide. Perhaps IPSec could be the answer. – dotancohen Feb 10 '15 at 06:59

-

@dotancohen: details vary, but what most video streaming technology essentially do is chop up the video to small chunks. Those chunks can be individually signed. The cache already needs to understand how the stream is chunked to be able to cache streams anyway, so half of the work are already there. There are also some stream systems that use HTTP Range Request for chunking which is already widely understood by standard HTTP cache and proxy. – Lie Ryan Feb 10 '15 at 07:59

-

1There are also many other highly cacheable contents that can benefit from digital signature adding integrity and authentication without necessarily needing confidentiality and which doesn't have the problem of needing to be a seekable stream like videos. It would be really good if there is a standardized mechanism in HTTP to add digital signatures which browsers can check to ensure integrity and which allows signed contents to be cached by intermediate caches. This will be an option in the middle of HTTPS and HTTP. – Lie Ryan Feb 10 '15 at 08:02

-

1

-

"Given the current market share of desktop systems using WinXP" Windows XP has since become unsupported, and we can assume the client is under a zero day attack that keeps the client from being able to maintain any sort of confidentiality. So how could this be rewritten? – Damian Yerrick Feb 19 '15 at 00:34

-

1"even the fairest of CA, operated by the Buddha himself, would still have to charge a fee for a certificate" StartSSL doesn't charge a fee for an individual TLS certificate. Nor will Let's Encrypt. – Damian Yerrick Feb 19 '15 at 00:37

-

@tepples, what matters to website operators is not so much whether whether Windows XP is supported by MS or not. Instead, they care about how many users won't be able to use your website if you turn on SNI (e.g., market share of Windows XP). This has evolved over time. Many sites are willing to shut out Win XP users who are using IE, but not all -- and there are some market segments where a non-trivial fraction of users are using IE on Win XP. So, this is not a black-and-white issue -- site operators have to make a judgement call -- and Thomas's remarks remain helpful and largely valid. – D.W. Feb 19 '15 at 00:56

-

1_Inertia_ is a much stronger force than anything else in the computing industry. I battle against it daily. – Thomas Pornin Jan 26 '16 at 22:01

-

3[What about accuracy?](http://goo.gl/Inw9GL) You go to Youtube for vids but it gives you contents from Facebook instead. You go to Facebook and it shows you Google instead. You go to Google and it shows you something that is not-safe-for-life. Or stuff that could put you in jail. Like... a virus download or zero-day exploit which uses your computer to share pirated stuff, or broadcast anti-state speeches (a grave or capital offense in more than several countries), or export crypto, or release state secrets, or *anything* for that matter. **Everyone needs HTTPS**, they just don't know that yet. – Pacerier Jan 26 '16 at 22:23

-

@Thomas, The conclusion *"HTTPS is not the default simply because HTTP was there first"* isn't convincing at all. So many things has changed *since then* and defaulting to HTTPS has no compat problem. I contend **the reason is speed and speed alone**. Either that, or [state-monitoring](https://goo.gl/h5eDpz). If we presume (as your conclusion claims) that speed and latency weren't **the reason**, then surely Chrome could fetch the HTTPS page first and fall back on HTTP when connection times-out? (SNI is a non-issue too, as Chrome has it.) – Pacerier Jan 26 '16 at 22:23

-

@Pacerier falling back is where it would fail completely. Think about MitM attacks. MitM may just prevent access via port 443, and when your smart chrome falls back to plaintext, they get your data. – Display Name Apr 19 '17 at 05:25

-

TLS solves 3 different problems. 1) authentication - as in the server is really youtube.com, is done during handshake 2) encryption 3) integrity checking - each message gets a MAC (message authentication code, this is ~ symmetrical signature). A TLS cipher suite has 3 parts, one for each. You can have a suite that does only one, or only 2 of those things. Saying that youtube video does not require encryption means that everyone will be able to map your vidoes to your IP. You may not want it. Further, youtube sends you not only videos but enough other sensitive account-specific information. – Effie Sep 08 '21 at 12:45

While there are great answers already given, I believe that one aspect is overlooked so far.

Here it is: Plain HTTP is the default protocol for the web because the majority of information on the web doesn't need security.

I don't mean to belittle the question, or the security concerns of some web sites/applications. But we can at times forget how much web traffic:

- contains only completely public information

- or has little or no value

- or where having more visitors is seen as increasing the value of the site (news media, network effect sites)

A few quick examples, I'm sure you can quickly make more in your mind:

- Almost all company websites, sometimes called "brochure-ware sites", listing public information about a company.

- Almost all of the news media, blogs, TV stations, etc that have chosen advertisement support as their primary monetization strategy.

- Services which may offer logins and additional personalization, but who also give away their content for free to anyone browsing anonymously (YouTube fx).

-

1I agree, there's no reason to leave anything overlooked. One thing I've wondered, and relates to your point on public information. Are URLs viewed during HTTPS transactions to one or more websites from a single IP distinguishable? For example, say the following are HTTPS URLs to two websites by one IP over 5 mins: "A.com/1", "A.com/2", "A.com/3", "B.com/1", "B.com/2"; would monitoring of packets reveal nothing, reveal only the IP had visited "A.com" and "B.com", reveal a complete list of all HTTPS URLs visited, only reveal IP's of "A.com" and "B.com", or something else? – blunders Jun 06 '11 at 00:15

-

3@blunders: Comments aren't the best places to ask new questions. Have a look at the following link, or open a new question. http://security.stackexchange.com/questions/2914/can-my-company-see-what-https-sites-i-went-to – Jun 06 '11 at 10:36

-

+1 @Jesper Mortensen: Thanks, agree. Reviewed the other question, and posted this question: [Are URLs viewed during HTTPS transactions to one or more websites from a single IP distinguishable?](http://security.stackexchange.com/questions/4388/are-urls-viewed-during-https-transactions-to-one-or-more-websites-from-a-single-i) – blunders Jun 06 '11 at 12:23

-

8A telephone number on a "brochure-ware site" might be completely public information. That doesn't mean being able to spoof a telephone number on that website isn't a security risk. – Christian Sep 09 '14 at 15:49

-

3@JesperMortensen, You are confusing "doesn't need security" with "doesn't need privacy". Yes, the data is public, that doesn't mean that we can avoid HTTPS (the mitm can simply return a bogus misleading page). – Pacerier Feb 16 '15 at 09:55

-

1@JesperMortensen, Regarding *"Almost all company websites, sometimes called brochure-ware sites, listing public information about a company"*, and without HTTP a hacker can hijack that page and insert whatever he wants into it. Is that acceptable? – Pacerier Mar 28 '15 at 23:55

-

3@JesperMortensen, Ok I realized this third comment is a few months late, but this is important: **HTTPS is not about security alone.** It's about accuracy too. You stated that web doesn't need security, but does the web need accuracy? (Just imagine [how much mayhem](http://security.stackexchange.com/questions/4369/why-is-https-not-the-default-protocol#comment200128_4376) there would be when we visit `a.com` but get contents from `b.com` and vice-versa. You go to `youtube.com` expecting to see some videos but it redirects you to `bing.com`.) – Pacerier Jan 26 '16 at 21:52

-

What you describing is called authentication, which is a security goal. When you talk to a.com you want to make sure that you are indeed talking to a.com. HTTPS does have authentication mechanism where the server provides you a certificate that he is indeed a.com (how well this works is another issue). Every web-site requires privacy. Even if the information is public, the fact that you, user X requested this information is not. I don't believe that you can hide connecting to a particular server (by IP), you still don't want to broadcast requested URL to anyone. – Effie Sep 08 '21 at 12:53

-

actually, you are rights, when you go and request dns record to a.com you want to make sure that this dns record is actually from a.com, otherwise you will get directed somewhere else. I think in this case https should refuse to connect because this somewhere else connot prove that he is a.com. But DNS security is necessary too. – Effie Sep 08 '21 at 13:14

- It puts significantly more CPU load on the server, especially for static content.

- It's harder to debug with packet captures

- It doesn't support name-based virtual servers

- 10,118

- 1

- 27

- 35

-

+2 @D.W.: Guess it makes sense that it'd be hard to have a universal method for sharing application specific implementations; meaning you couldn't just make a copy of the keys and the configs to render the decryption built into the debugger. And yes, I'd seen that listed in @Thomas answer, which was posted after @Mike Scott; left it as is, since I'd wondered if @Mike Scott had another reason. Cheers! – blunders Jun 06 '11 at 18:47

-

@D.W. Windows XP isn't supported either. Its end of support in April 2014 breaks the last major barrier to SNI deployment. – Damian Yerrick Feb 19 '15 at 00:40

-

@tepples, my comment was written 4 years ago. Obviously, the situation with Windows XP has evolved over time. (And, it's not relevant whether Windows XP is supported or not. What's relevant is how many users use Windows XP -- i.e., how many users won't be able to use your website if you turn on SNI. As I wrote in my comment, see Thomas Pornin's answer.) – D.W. Feb 19 '15 at 00:51

-

@D.W. I was pointing out that your comment had since become outdated. I apologize for not being more explicit about this. But even if you had a lot of XP users, what's the point in making a secure connection to a machine that is itself likely to be compromised with a zero-day? – Damian Yerrick Feb 19 '15 at 00:57

-

@tepples, it is not that simple. What is the value of that user to the site owner? The answer will depend on the site. They don't lose that user, and might be able to monetize that user (e.g., that user might want to buy something from them, so they might lose a sale if they block such users). I'm largely sympathetic to your argument, but again, what I'm saying is this is not so cut-and-dry as you are trying to make it out to be. If you want to persuade others, it's important to understand the considerations that drive their decisions. – D.W. Feb 19 '15 at 00:59

-

@tepples Just because XP is at end of support doesn't mean it isn't widely used. That's a non-starter of an answer; XP users still need to buy stuff, so they still need SSL, which means SNI isn't a good solution. – Alice Feb 19 '15 at 02:47

-

@D.W. I have opened [another question about these considerations](http://security.stackexchange.com/questions/82066/can-serving-https-to-internet-explorer-on-windows-xp-be-made-secure). – Damian Yerrick Feb 20 '15 at 00:02

Http was always the default. Initially https was not needed for anything, it was pretty much an addition tacked on as it became obvious security was needed in some circumstances.

Even now, there are so many web sites which do not need https that it is still not a convincing argument to replace http entirely.

With ever more effective mechanisms for running TLS secured connections, the CPU overhead is becoming much less of an issue.

- 61,367

- 12

- 115

- 320

No one has pointed out a clear problem that arises from using http as default, rather than https.

Hardly anyone bothers to write the full uri when requesting a resource that needs to be encrypted and/or signed for various purposes.

Take gmail as an example, when users are visiting gmail.com, they are in fact visiting the default protocol of http, rather than https. At this point security has failed in scenarios where the adversary is intercepting the traffic. Why? Because its possible to strip html from https request, and point them to http.

If https was in fact the default protocol, your sessions to websites would have been protected.

To the question why http is chosen over https, the various answers above applies. The world is just not ready for widespread use of encryption yet.

- 5,759

- 1

- 27

- 46

-

2You're describing the "SSL stripping" attack. Browsers have since implemented HTTP Strict Transport Security (HSTS) as a countermeasure, including HSTS preload lists and HTTPS Everywhere (essentially a third-party HSTS preload list). – Damian Yerrick Feb 19 '15 at 00:43

-

1@tepples HSTS is worse than useless, as it can also be stripped while providing a false sense of security of server owners. – Alice Feb 19 '15 at 02:48

-

1

-

2@Dogeatcatworld, The question is asking **why** do browsers change the user's request (typing in the url) from `web.com` to `http://web.com` instead of `https://web.com`? – Pacerier Mar 28 '15 at 23:58

-

3@Pacerier The first time a user follows a link using the `https:` scheme to a site using HSTS that isn't in the preload list, an HTTP proxy rewriting all links can rewrite the link to instead use the `http:` scheme. That's why the preload list exists, but no preload list is exhaustive. So long as the user stays behind stripping proxies, visits only sites not in the browser's preload list, never manually keys in the `https:` scheme, and never notices the lack of a lock icon in the right place, the user is unaware of any attack. – Damian Yerrick May 01 '15 at 15:52

In addition to the reasons others have given already:

Additional work required to set up HTTPS on the server

The server administrator needs to configure certificates for each domain. This involves interacting with a certificate authority to prove you are the genuine owner of the domain and obtain certificate renewals. This might mean manually generating certificate signing requests and purchasing renewals, or setting up an automated process to do so (such as certbot using Let's Encrypt). In either case, it's more work than not using HTTPS.

Additional IP addresses required

This is not really an issue since SNI (Server Name Identification) support became widespread in browsers and SSL client libraries.

Traditionally, however, it was necessary to use a different IP address for each distinct site using SSL on a particular server and port. This intefered with the ability to do name based hosting (virtual hosting) - a widely used practice allowing many different domains to be hosted from the same IP address. With HTTPS, regular name based hosting doesn't work because the server would need to know what hostname to present in the SSL/TLS validation layer before the HTTP request, containing the hostname, can be decrypted.

Server Name Identification (SNI), which effectively implements name-based hosting at the SSL/TLS layer, removes this limitation.

Slow pace of change

HTTPS was a modification to an existing protocol, HTTP, which was already very much entrenched before many people were starting to think about security. Once a technology has become established and as ubiquitous as HTTP was, it can take a very long time for the world to move to its successor, even if the reasons for changing are compelling.

- 1,465

- 11

- 16

-

Hi fjw - this is a very old question, with good answers, and an accepted answer. Your answer doesn't bring anything new - I'd encourage you to contribute by answering new questions. – Rory Alsop Sep 09 '14 at 13:29

-

Do you really quality this as a low-quality answer? I'm really sorry, I came upon this as I was researching something and felt that the existing answers failed to address what I thought were some important points. I'd strongly disagree that this is a "low quality answer". – thomasrutter Sep 09 '14 at 14:32

-

It was flagged to me by community members, and i agree with them. My comment above explains my point. – Rory Alsop Sep 09 '14 at 14:46

Thomas has already written an excellent answer, but I thought I'd offer a couple more reasons why HTTPS is not more widely used...

Not needed. As Jesper's answer insightfully points out "the majority of information on the web doesn't need security". However, with the growing amount of tracking taking place by search engines, ad companies, country-level internet filters and other "Big Brother" programs (eg. NSA); it is raising the need for greater privacy measures.

Speed. It often feels slow because of the extra round trips and extra requests for certificate revocation lists (OCSP etc.). Thankfully SPDY (created by Google, and now supported in all major browsers), and some interesting work from CloudFlare are helping shift this.

Price of certificates. Most certificate authorities charge exhorbitant amounts of money (hundreds of dollars) for a certificate. Thankfully there are free options, but these don't get as much publicity (not sure why?).

Price of IP addresses. Until IPv6 becomes widespread, websites will face the rising scarcity (and thus cost) of IPv4 addresses. SNI is making it possible to use multiple certificates on a single IP address, but with no SNI support in Windows XP or IE 6, most sites still need a dedicated IP address to provide SSL.

Increase in server CPU usage.This is a common belief, but according to Google "SSL/TLS is not computationally expensive any more".

- 440

- 5

- 10

-

-

1A week after you posted this answer, Windows XP itself became unsupported. What does this change? – Damian Yerrick Feb 19 '15 at 00:44

-

@tepples nothing, as those people were not automagically upgraded to Windows 7, to my knowledge. – Alice Feb 19 '15 at 02:49

-

1[StartSSL has now gotten some publicity](http://arstechnica.com/security/2016/09/firefox-ready-to-block-certificate-authority-that-threatened-web-security/) but not the way you presumably wanted :-) – dave_thompson_085 Jan 18 '17 at 17:20

-

I have to say that I vehemently disagree with the statement that TLS is not computationally expensive. It assumes that sites are all served from powerful hardware. This is simply not true when the web server happens to be running on an embedded device where power use is a prime consideration. – Jon Trauntvein Mar 31 '17 at 22:41

The real answer is that SSL certificates in their current form are comically hard to use. They are so unusable that it threatens the security of certificates, as people take shortcuts to just get stuff done. I say this as somebody who routinely deals with 2-way SSL (PKI certs), the TLS stack incompatibilities that are created by the complexity of the spec, and the crazy number of combinations of configurations (cipher limits, options, language specific library bugs, etc) that are called "TLS".

See the rise of LetsEncrypt as evidence that this is true.

Caddy is a reverse proxy project that uses LetsEncrypt. It can renew certificates while the server runs, and people use really short expirations because renewals are automated.

- 639

- 3

- 9

When HTTP was invented by academics around 1990 to share public information, security was not a consideration. HTTPS was a 1995 afterthought intended to secure e-commerce. Adoption by other websites was slow, with HTTPS perceived not necessary where there was 'nothing to hide'.

However as of 2021:

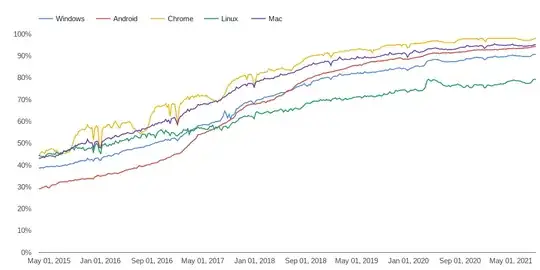

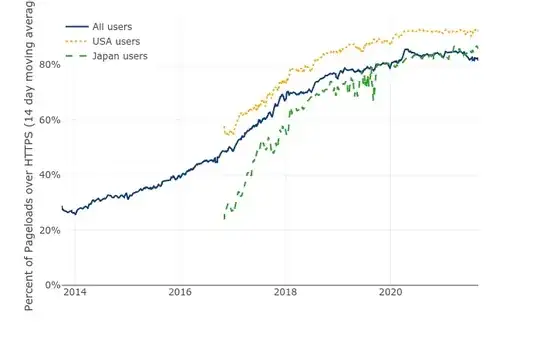

- An estimated 90% of web traffic is HTTPS (graphs below).

- Many popular websites force HTTPS via a preload list shipped with browsers.

- Browsers warn HTTP connections are insecure (example domain).

- When connecting to an unknown domain, some browsers such as Chrome attempt HTTPS before fallback to HTTP (other browsers will presumably follow).

How did this change happen? The global surveillance revelations of 2013 destroyed the 'nothing to hide' argument. The IETF published Pervasive Monitoring Is an Attack. This empowered browsers to announce plans to deprecate HTTP. Websites were given deadlines to prepare, or suffer security warnings.

Simultaneously, nonprofit Let's Encrypt pioneered free certificates from 2015, simplifying setup and reducing expense. Today Let's Encrypt issues "more currently valid certificates than all other browser-trusted CAs combined".

HTTPS performance costs had long been solved by 2013, though they persisted as a myth.

| Browser | Announced plans to deprecate HTTP | HTTP labelled insecure |

|---|---|---|

| Mozilla Firefox | April 2015 | October 2019 |

| Google Chrome | September 2016 | July 2018 |

Graphs showing HTTPS adoption:

- 2,214

- 2

- 22

- 23

-

I'm not sure that this answers the question. This is simply stating that it is now the de facto default. But why, when this question was asked, was it not despite the obvious benefits? – schroeder Sep 03 '21 at 08:41

The simplest explanation and the most reasonable I've found among my colleagues is that it's always been done with HTTP, why change it now.

If it's not broke, don't fix it.

- 41

- 2

-

5

-

iOS 9 breaks it. No http for non-browser apps by default. And no outdated https implementations allowed by default. – gnasher729 Apr 20 '16 at 14:34