I realize this is an old question, but I just ran across it and thought I'd post my perspective for sake of the next guy.

The best answer is caelyx's response: architect the network so only the proxy can resolve external DNS hostnames. As he points out, retrofitting this into a production environment is difficult.

A compromise is to block all udp/53 outbound unless it originates from your internal DNS server and/or allow only to your external DNS server. This mitigates applications that simply throw data over udp/53 to an arbitrary external server. It does not reduce the risk of DNS tunneling, since your internal and external DNS servers will happily forward any RFC-compliant traffic.

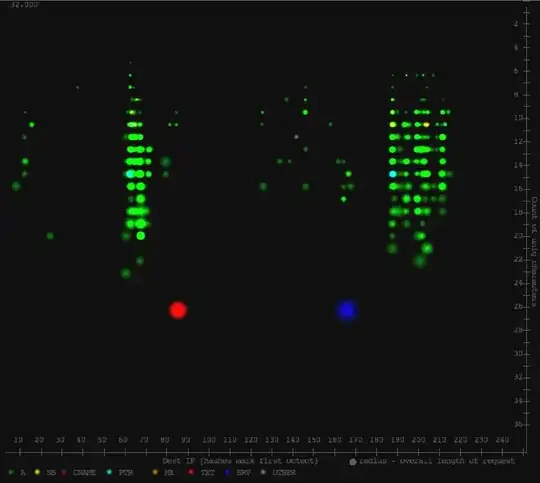

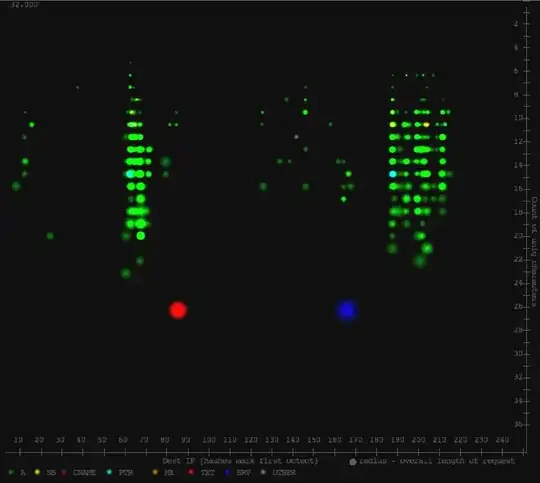

I research this in 2009 and posted my findings at armatum.com. The first post was to characterize "routine" DNS traffic and compare with typical DNS tunnel implementations. The second phase was to build an algorithm to detect the unusual behavior; it started as a clustering algorithm and ended up as a simple visualization of key characteristics:

(click through and check out the video, see the app working in real-time) Those key characteristics ended up focusing on length of hostname queried, type of request and count of unique characters in the hostname requested. Most RFC-compliant DNS tunnel implementations maximize these values to maximize upstream bandwidth, resulting in significantly increased values over typical DNS traffic.

As I noted in the update to the second post, if I were to re-do that work today I'd include the research that's been published since and also use count of subdomains per domain as a key characteristic. That's a clever technique.

I built this as a tool I wanted at my enterprise network perimeter, based on my years serving in that role. As far as I know there are no enterprise-class industry applications/hardware that provide this depth of insight. The most common are "application level firewalls" that ensure RFC compliance --- something you can (usually) enforce with the simpler split DNS network architecture described above.

Of note, I have seen TXT response records banned outright at a large (450k hosts) enterprise perimeter with no significant reduction in utility. Your mail gateways need TXT records for SPF, but the other common uses rarely have a place in the enterprise. I don't advocate this approach, because it "breaks the internet" -- but in dire circumstances I cannot argue with it's practicality.

And finally, yes -- I've seen this technique used live, though it is unusual. (referring to enterprises, not pay-per-use public access points)