How do I recon this situation & what exactly am I addressing?

I construct & architect security operations for a living. I had been addressing Application Security Reports for a decade now & quite sure about what's to be included & where to have them included. Presentation will Matter.

I've given this a fair amount of thought. Looking at all the answers, I feel that the missing parts alongside with the most definitive answer & the knowledge should be focused.

To start off, I would like to go by saying - Please do not confuse between an Assessment & an Audit. Audit has Audit Trails, an Assessment has nitty gritty Technical Details. The Original Post says an Audit was done to the applications which it couldn't be. More technically it were Assessments.

I have picked up several including the Methodology Followed at CERN, ref: http://pwntoken.github.io/enterprise-web-application-security-program/. To my astonishment, most often - the technical details which is as a Security Assessment is Likely to be more helpful to the Developer & It Operations rather than the Business Stakeholders. When you try to Audit an Application or set of Application on a Public Interface, you bring it to the application stakeholder.

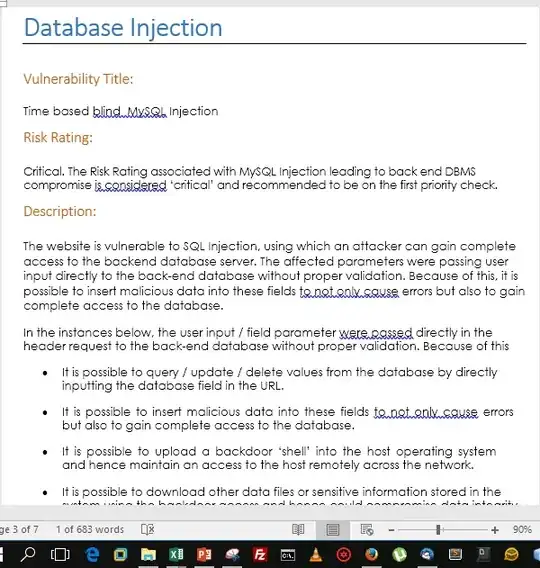

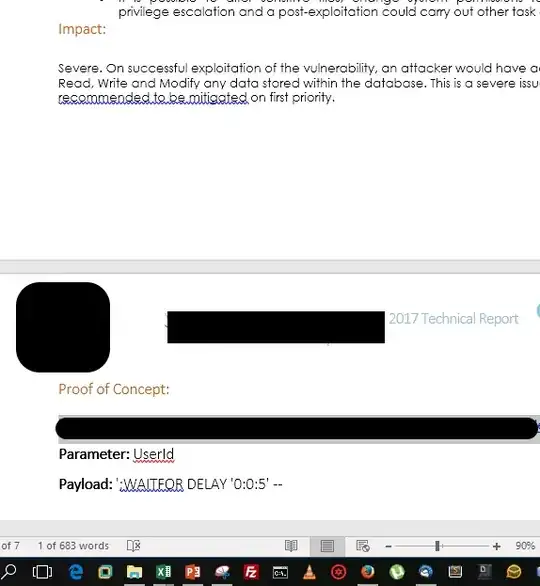

Coming to the points of my sample Application Report, here is how it looks (I apologize for the scribbles as it were absolutely necessary but had to be taken off as per NDA norms):

Let's explain what are these components in key pointers:

- First one is Vulnerability Classification: e.g. for XSS, it could be written as Code Injection. For Shell Injection, Interpreter Injection is a more accurate term. Similarly, for SQL Injections, rather MS-SQLi or MySQli, the Classification should be Database Injection.

- The Next is Vulnerability Title: For Database Injection, it always could be more accurate in one liner like

UNION BASED MySQL Injection Leads to Command Level Compromise.

- Next is Risk Rating: In my opinion, I would go by

WASC or anything, but I preferred our own custom rating circuit. One can look for OWASP, WASC or others if you have been told to stick to a particular methodology. NIST would be one if you're dealing mostly with network security.

- Description: This should be as detailed as possible. It sometimes may happen that a classification set isn't found due to the threat being Business Logic in nature. For those, it's necessary to have a great sense of understanding context & why the attack scenario is put as such.

- Impact: Again, I would say, mention this is hard pointers in bullets. That is healthy & hygienic to business stakeholders as well.

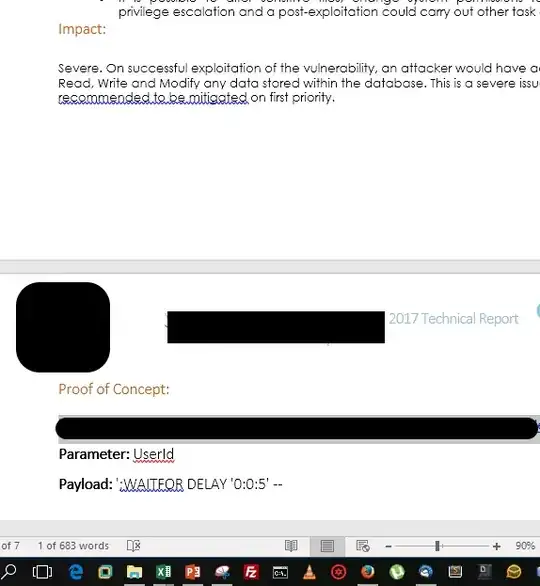

- Proof Of Concept: I think this is pretty self explanatory. But include details including screenshots. Another input would be that you include parameters which are affected & also note down the endpoint in case it's an API which's affected alongside with it's POST parameters if any.

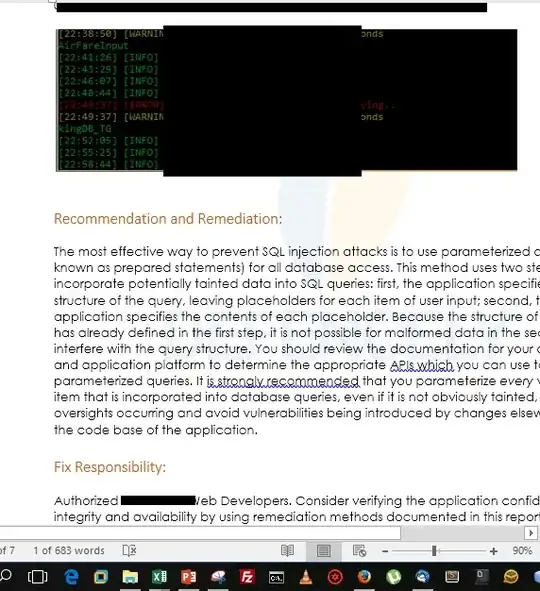

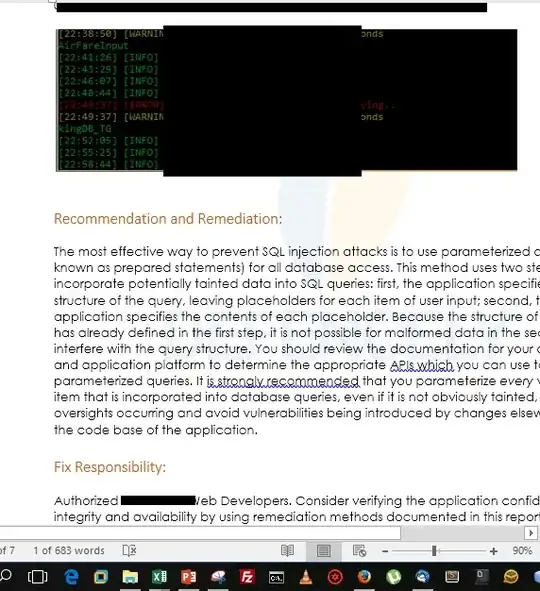

- Recommendation & Remediation: I think, this is explanatory as well. Keep a generic template for the

OWASP Top 10, SANS 15 & WASC Top 26 ones. For the rest, use manual written context based recommendations as it helps your IT Operations.

- Fix Responsibility: Who's fixing. In your case, it's you!

Hope this will help.