The Problem

Just like a regular program, a kernel is susceptible to malicious code being executed in it. Whether this comes in the form of the operating system itself featuring a vulnerability or more commonly a 3rd party driver (which has access to kernel-space) the end result is that unwanted code can be executed in the kernel.

Of course, it is unrealistic to expect a scenario where malicious code will never be executed in the kernel. Vulnerabilities are everywhere and always will be. In an effort to solve this issue, Microsoft came up with the idea to add a new technology to their stack of Virtualization-Based Security (VBS) mechanisms. This technology is known as Kernel Data Protection (KDP) which adds a new kernel boundary by leveraging virtualization technology such that there are sections in memory which even the kernel itself does not have access, as we will later find out, as far as Static KDP is concerned this does not prevent code execution nor does it really intend to.

Microsoft has two tasks they wish to solve. The first is software based exploits coming in from the CPU. The second is exploits coming in from the hardware such as via I/O devices (via device drivers) and network connected devices.

The Solution

The solution is to use a combination of virtualized-based Security technologies along with hardware-based features and a new set of APIs, "KDP" so that some sections of kernel memory can be marked as read-only but not just read-only, but also totally unreadable/unwritable from a lower privilege level.

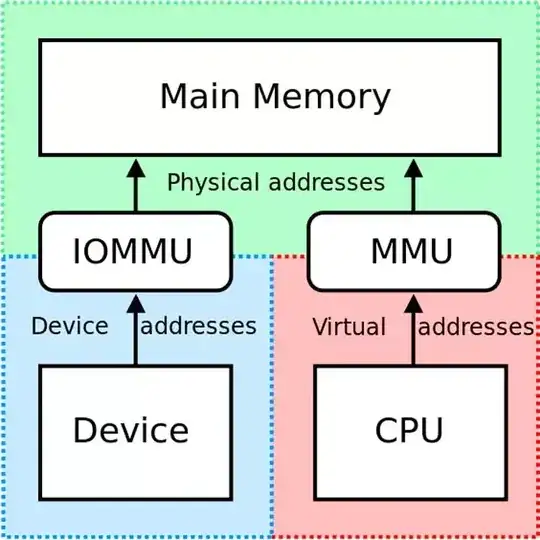

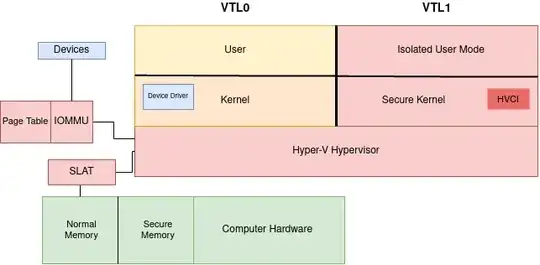

There are two primary technologies used in conjunction with the KDP API to solve this problem. The first is Second Level Address Translation (SLAT) which tries to prevent exploits (read/write primitives) coming in from the CPU. The second technology is an Input Output Memory Management Unit (IOMMU) which tries to prevent exploits (read/write primitives) coming in via vulnerable device drivers.

The Implementation

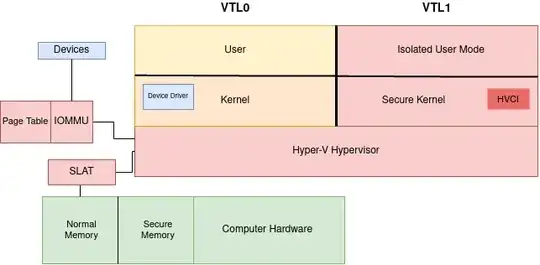

In order to implement this technology it all starts with the Hyper-V hypervisor. Hyper-V makes use of two technologies known as SLAT and an IOMMU in order to effectively "wall off" portions of memory which can then only be accessed by very high privileged code (code in VTL1). As there's quite a lot of technology going on, it helps to view this in a diagram at which point we can look at every individual piece in order to figure out how it fits together to make up the requirements for a functioning KDP API.

In a classic computer model, the kernel communicates directly with the hardware. However, in modern versions of Windows this is not the case. The kernel instead communicates with the Hyper-V hypervisor which sits between the hardware and the kernel, this is a software-based hypervisor which ultimately is what allows all of these other virtual-security technologies to exist and operate.

In this model if the kernel wants to access a portion of memory instead of accessing it directly via the hardware, it will tell the hypervisor that it wishes to access a region of memory at which point the hypervisor will go to the SLAT to retrieve that memory.

You'll notice that the memory is divided into two regions, "normal memory" and "secure memory", having a technology like SLAT allows this to function in the way that you'd expect. By marking a certain region of memory as "secure memory" you can place things in that "secure memory" bucket, which then makes them inaccessible from "normal memory" and only accessible via a call to the hypervisor from "VTL1". This is a key function of Kernel Data Protection.

We'll start by understanding how the Hyper-V Hypervisor allows Windows to create this concept of Virtual Secure Mode (VSM), this is a key technology to make KDP work.

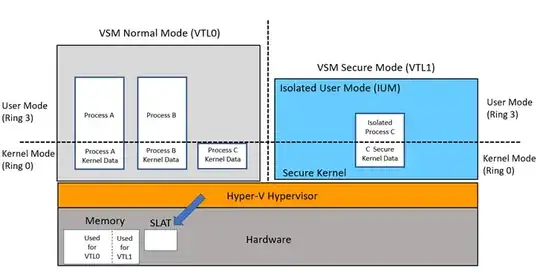

Virtual Secure Mode

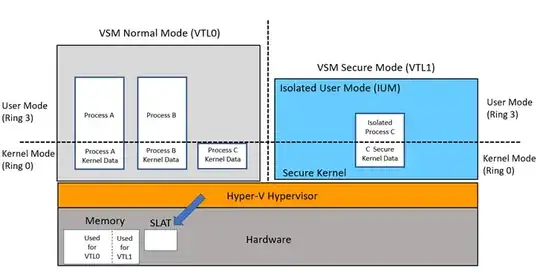

Windows 10 introduced this concept of VSM by leveraging the Hyper-V Hypervisor and SLAT. VSM creates a set of modes known as Virtual Trust Levels (VTL). This software-level architecture creates a new security boundary within Windows to prevent processes in one VTL accessing the memory of processes in another VTL. The overall goal of this additional isolation is the mitigation (but not prevention) of data corruption kernel exploits. An additional goal is the protection of assets such as password hashes and Kerberos keys.

As depicted in the diagram above, there are two VTLs: VTL0 and VTL1. These can also be referred to as "VSM Normal Mode" and "VSM Secure Mode" but for our purposes we will refer to them VTL0 and VTL1.

VTL0 is the "normal computer". This is the boundary within which the user is operating on a daily basis (web browsers, word processing, etc).

VTL1 is the new isolation boundary created by the hypervisor, just like VTL0, VTL1 can be thought of as "another computer". That is to say that just like VTL0, it can execute code in both a user-space context and kernel-space context.

At boot time, the hypervisor uses the SLAT in order to assign memory to each VTL. This continues dynamically as the system runs such that the Secure Memory (VTL1) is protected from Normal Memory (VTL0) and likewise code in VTL1 is protected from code in VTL0. Because VTLs are hierarchical any code running in VTL1 is considered more privileged than code running in VTL0 and as such, VTL1 code has extremely high levels of access and is in-fact the only VTL which can make hypercalls to the hypervisor.

Second Level Address Translation

With VSM explained, we need to understand how SLAT works. As we already covered, at boot time the hypervisor uses SLAT in order to assign both VTLs their own section of memory (noted as "Normal Memory" and "Secure Memory") this memory mapping is managed dynamically as the system runs.

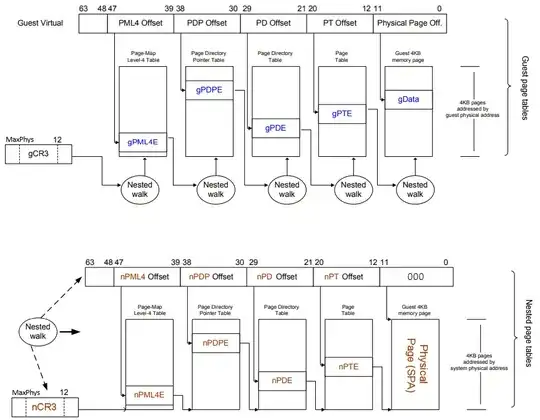

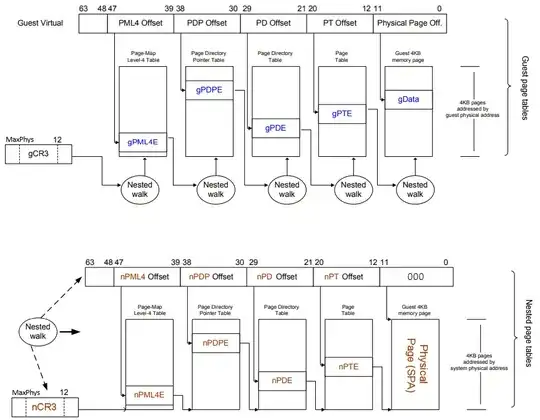

When SLAT is enabled the processor translates an initial virtual address called Guest Virtual Address (GVA) into an intermediate physical address called Guest Physical Address (GPA). This translation is still managed by the page tables addressed by the CR3 control register which managed by the NT kernel. The final result of the translation yields the processor a GPA, with the access protection specified in the Guest Page Table (GPT). Only software which is operating in kernel mode can interact with page tables such is the case that a rootkit could modify the protection of the intermediate physical pages.

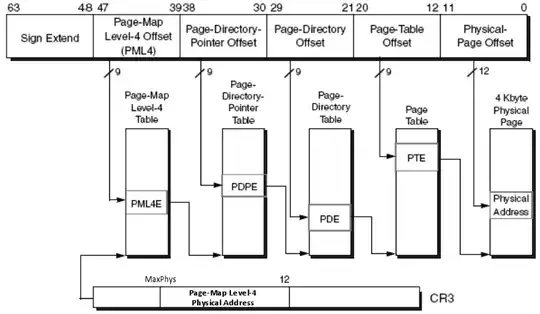

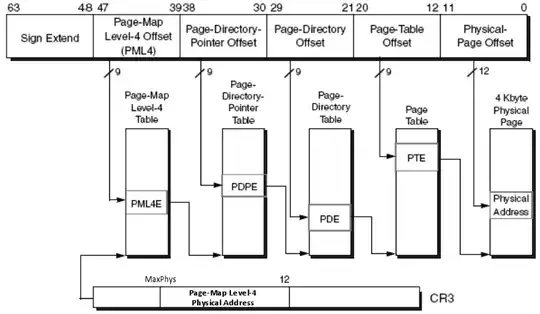

The hypervisor helps the CPU to translate the GPA using the Nested Page Table (NPT). On a non-SLAT system, when a virtual address is not present in the Translation Lookaside Buffer (TLB), the processor needs to consult all the page tables in the hierarchy in order to reconstruct the final physical address. The virtual address is split into four parts. Each part represents the index into a page table of the hierarchy. The physical address of the initial Page-Map-Level-4 (PML4) table is specified by the CR3 register. This is why a processor is always able to translate the address and get the next physical address of the next table in the hierarchy. It is important to know that in each page table entry of the hierarchy the NT kernel specifies a page protection through a set of attributes. The final physical address is accessible only if the sum of the protections specified in each page table entry allows it.

On a SLAT-enabled system, the intermediate physical address specified in the guest's CR3 register needs to be translated to a real System Physical Address (SPA). In this case, the hypervisor configures the nCR3 field of the active Virtual Machine Control Block (VMCB) representing the currently executing VM to the physical address of the NPT. The NPTs are built in a similar way to standard page tables, so the processor needs to scan the entire hierarchy to find the correct physical address. In the figure, we'll note that we have "n" and "g" these indicate the NPT and GPT respectively. Where the NPT are managed by the hypervisor and the GPT are managed by NT kernel.

As shown above, the final translation of a GVA to a SPA goes through two translation types: GVA to GPA, configured by NT kernel and GPA to SPA, configured by the hypervisor.

An NPT entry specifies multiple access protection attributes this allows the hypervisor to further protect the SPA (the NPT can only be accessed by the hypervisor itself). When the processor attempts to read, write or run an address to which the NPTs disallow access, an NPT violation is raised and a VM exit is generated.

Input Output Memory Management Unit

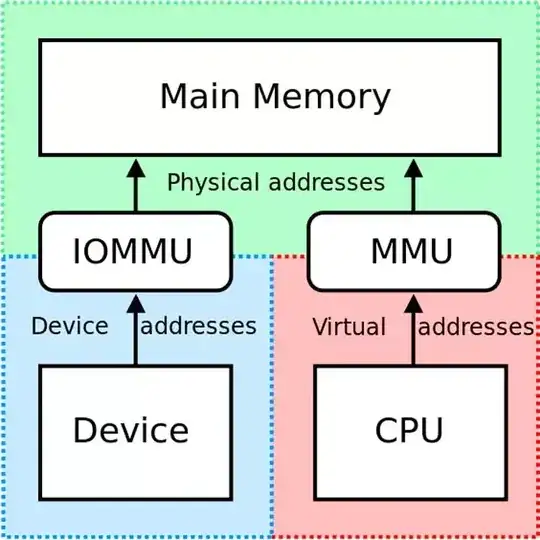

However, SLAT only solves the CPU problem, it doesn't solve a hardware one, namely device based attacks such as Direct Memory Access attacks (DMA attacks). To solve this, Windows leverages an IOMMU which is a type of Memory Manage Unit (MMU). The IOMMU connects a DMA-capable I/O bus to the main memory. Its purpose is to map device-visible virtual addresses to physical addresses in memory via a page table such that when a device wants to access memory it has to go through the IOMMU in order to access it. This page table stores memory addresses as well as access permissions associated with those memory addresses.

Device drivers sit in VTL0 but they need to program connected I/O devices to tell them which regions of memory to access. However, the problem that this model causes is that a device driver can tell a device to access memory in VTL1. By putting the IOMMU between the device and the device driver, it can limit the memory which the device can access.

Since the hypervisor is responsible for the programming of the IOMMU and the hypervisor can only be managed from VTL1 this prevents a situation where an attacker can use vulnerable driver code to perform DMA attacks and access memory to which it should not have access.

Static KDP Implementation

SLAT is the main protection principle which KDP uses to function. Dynamic and static KDP implementations are similar and are both managed by the Secure Kernel (VTL1). The Secure Kernel is the only entity which is able to send the ModifyVtlProtectionMask hypercall to the hypervisor. This call modifies the SLAT access protection for a physical page that is mapped in the lower VTL0.

For Static KDP, the NT kernel (VTL0) verifies that the driver is not a session driver or mapped with large pages. If one of these conditions is true, or if the section is a discard-able section then static KDP cannot be applied. If the entity which called the MmProtectDriverSection API did not request the target image to be unloadable then the NT kernel performs the first call into the Secure kernel which pins the normal address range (NAR) associated with the driver. This pinning operation prevents the address space of the driver from being reused, making it so the driver is not unloadable.

The NT kernel then brings all the pages that belong to the section in memory and makes them private. Those pages are then marked as read-only in the leaf Page Table Entry (PTE) structures. At this stage, the NT kernel can finally call the Secure kernel for protecting the underlying physical pages through the SLAT. The Secure kernel applies this protection in two stages:

- Register all the physical pages that belong to the section and mark them as "owned by VTL0" by adding the proper normal table addresses in the database and updating the underlying secure PFNs which belong to VTL1. This allows the Secure kernel to track the physical pages, which still belong to the NT kernel.

- Apply the read-only protection to the VTL0 SLAT table.

The target image's section is now protected and no entity in VTL0 will be able to write to any of the pages belonging to the section.

Although Microsoft seems to market this as protecting code execution it actually doesn't do that at all. As it's name suggests it is "Data Protection" all this feature does is protect data from being accessed from one kernel to another (NT->Secure). The entire purpose of this is that if you manage to get code execution in the NT kernel you cannot read data that has been defined to be stored in the VTL1 memory space or execute code in VTL1. Nor can you perform any form of memory corruption on the data in the VTL1 memory space. This doesn't prevent kernel exploits. You can still run them in VTL0.1

One thing this does a good job at protecting is Hypervisor-Protected Code Integrity (HVCI) from being disabled. In the past there have been attacks where someone finds a vulnerable piece of driver software and then using that vulnerability they turn off HVCI. However, this is an overall goal of VBS not just KDP specifically. KDP is just one set of APIs to help achieve this goal.

Usefulness in Mitigation

As far as mitigation goes - Static KDP isn't that useful. In-fact, the whole technology stack this makes up is generally more geared towards isolation of data more-so than kernel execution prevention.

Your point you made that surely an attacker can just use a write primitive elsewhere is entirely correct. This doesn't stop code execution & it doesn't totally stop read/write primitives. It only prevents those kind of attacks on areas of memory which have Static KDP applied (like HVCI for example). Unfortunately, it is quite hard to know which areas of memory those are by default, given that Windows is closed source.

I don't think it is fair to call Static KDP an equivalent of Linux Kernel Runtime Guard (LKRG) and in-fact, LKRG is more similar to Hypervisor-Protected Code Integrity. Your assertion is true though that KDP does effectively enforce a write protection on a given area of memory.

Threat Model

Microsoft's main threat model for this was noted as: "protecting keys and hashes" as well as HVCI from being read from lower privilege/overwritten from lower privilege.

Microsoft have noted a few times that they plan to use this technology more going forwards in order to better protect themselves against driver based vulnerabilities, however they have not specifically called out future plans, designs or how they aim to do that. Just that they will use this technology to do it.

1 - This technology, specifically Static KDP seems somewhat like security theater. There is little benefit to using this. This does not stop code execution nor does it prevent or mitigate vulnerable driver code. All it does is allow the OS to say: "Kerberos keys need more security, place them in VTL1 so if VTL0 gets pwned they don't get the keys". Although the name advertises itself as "Kernel Data Protection" the Microsoft blog advertises this feature as somewhat preventing vulnerable code execution even though it does not...

Terms used

- SLAT: Second Level Address Translation

- IOMMU: Input Output Memory Management

- VBS: Virtulization-Based Security

- KDP: Kernel Data Protection

- HVCI: Hyper Visor Code Integrity

- IUM: Isolated User Mode

- VTL: Virtual Trust Level

- VSM: Virtual Secure Mode

- NT Kernel: VTL0 Kernel

- Secure Kernel: VTL1 Kernel

- Memory: VTL0 Memory

- Secure Memory: VTL1 Memory

- PTE: Page Table Entry

- GVA: Guest Virtual Address

- GPA: Guest Physical Address

- TLB: Translation Lookaside Buffer

- NPT: Nested Page Table

- SPA: System Physical Address

- VMCB: Virtual Machine Control Block

- DMA: Direct Memory Access

Further Reading + Sources

- https://docs.microsoft.com/en-us/windows-hardware/design/device-experiences/oem-kernel-dma-protection

- https://docs.microsoft.com/en-us/virtualization/hyper-v-on-windows/tlfs/vsm

- https://docs.microsoft.com/en-us/windows/win32/procthread/isolated-user-mode--ium--processes

- https://docs.microsoft.com/en-us/windows-hardware/design/device-experiences/oem-vbs

- https://www.microsoft.com/security/blog/2020/07/08/introducing-kernel-data-protection-a-new-platform-security-technology-for-preventing-data-corruption/

- https://channel9.msdn.com/Blogs/Seth-Juarez/Windows-10-Virtual-Secure-Mode-with-David-Hepkin

- https://channel9.msdn.com/Blogs/Seth-Juarez/Isolated-User-Mode-in-Windows-10-with-Dave-Probert

- https://docs.microsoft.com/en-us/windows-hardware/drivers/bringup/device-guard-and-credential-guard