Postman and same origin policy aren't obstacles. To understand this, I need to explain why, as a developer, you virtually never trust the client/front end.

Front and back end trust

If someone controls a computer, they control what it sends the server. That's literal: every last byte of it, every last header or request, every last POST field in a form or GET parameter in a URI, every web socket and connection, every last timing (within broad timing limits that aren't an issue here).

They can make that computer send the back end literally, anything whatsoever they want, on demand. Any GET. Any POST. Any header field. Anything. They can include any header. Any origin. Any cookie information they choose and know. Literally, anything. Common exceptions for most use-cases are perhaps physical "black box" encryption cards/keys, and the client's IP address, both of which are trickier barriers if checked during a session - and even the IP can usually be spoofed in various ways, especially if they don't care about a reply.

The upshot is that from a security perspective you can't trust anything a client sends. You can raise the bar quote a lot, enough for most everyday uses. Secure transport (TLS/HTTPS) to make it extremely difficult to modify or intercept or change the traffic if someone controls an intermediate computer it's routed through. A well implemented OS and browser, that stop scripts or malware outside that specific web page from interfering locally. Certificate checking one or both ends. Secured networks that authenticate what may attach.

But every last one of those is raising a bar, not an absolute defence. Every one of those has been broken before, gets broken now at times, will be broken in future. None is guaranteed bug and loophole free either. None can defend against a user, malware, or rootkitted remote access at the client end that deliberately, or ignorantly subverts the defences on the client PC, because such a user can typically change or bypass anything the OS or browser are programmed to do. None is a true perfect defence.

So if you have any software or web based system with a back-end and front-end, its a golden rule that you don't trust the data the client provides. You recheck it when received at the back.end. If the request is to access or send a web page or file, is that okay, should that session be allowed access to that file, is the filename a valid one? If the request is some data or a form to process, are all the fields reasonable and containing valid data, is that session allowed to make those changes.

You don't trust a thing that isn't under your own secured known control.

Server, you'll trust by and large (you manage the OS and security, or have trusted partners who do). But client and wider network no trust at all. And even for the server, you have security checks on it, be it malware and behaviour detection, access controls, or network scanning software, because you could be wrong about that, too.

So you validate at the client browser/app (front-end) for convenience of the client, because most clients are honest and many mistakes can quickly be detected in the browser or app.

But you validate at the server (back-end) to actually do your real checking if the request or data is valid and should be processed or rejected.

That said, your answer is...

You asked how its done. The software to do it can be done many ways - malware, deliberate user act, misconfigured client system/software, intercepting computer/proxy.

But however it's done, this is the basic process that exploits these issues within a client, makes any client packet fundamentally untrustworthy (including origin and referrer fields), and makes it impossible to trust them. It excludes external matters such as certificate misuse, which are outside the OP scope.

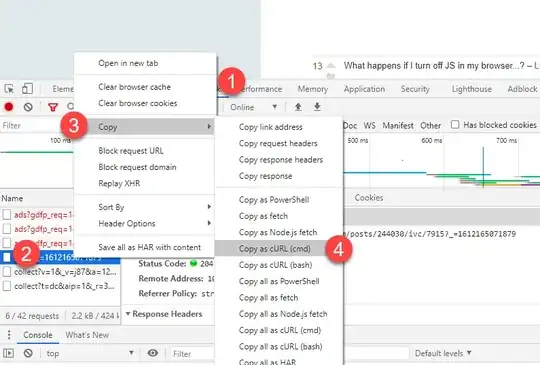

- Study what a genuine reply/packet/request/post looks like in the app.

- Modify the packet using built in browser tools, browser extensions, transparent proxies/proxy apps, or create a hand crafted request based on it, with the different headers needed.

(After all, the back-end doesn't actually know what the "real" values should be, or the "real" origin or referrer is, it only knows what the packet * says * they are, or the origin or referrer are. Which takes between 15 seconds and 2 minutes to modify to anything on earth I want it to be, or well under a millisecond if its done by software.)

- Modify or fake anything else needed, or craft custom versions, of any packets, and send those instead (wither prepared in advance or modified at the time)

- Done.