I think the advice you got to not send the ID token is good. In general, an ID token should never be forwarded to some other entity besides the client that received it. Besides just breaking this cardinal rule, there's no cryptographic or physical relationship between the two tokens. As a result, a substitution attack vector is opened up. In other words, the micro-service receiving the two tokens has no way of knowing that the non-standard presentation of the ID token was replaced by an attacker with their own since that token is not related in some way to the access token it finds in the normal Authorization header of the same request.

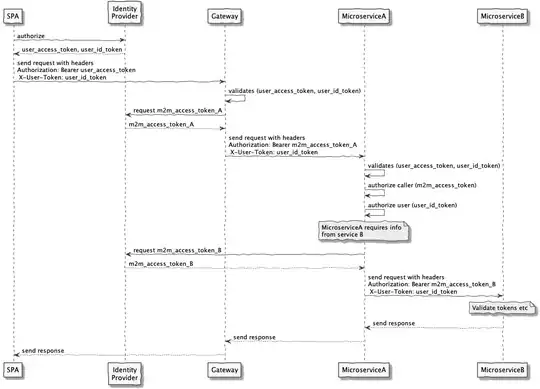

When working in a micro-services environment where you need user context info into the bowels of the mesh, you have three options:

- Share access tokens among the micro-services

- Embed other access tokens in the one initially issued to the client (or the phantom equivalent)

- Exchange one token for another

You'll use option one when multiple micro-services make up the same security context. If they belong to the same bounded security context, then it's OK to share them. If this is the case, they will have the same "audience" and the access token will be audienced to that set of micro-services. An example of a shared context might be a handful of "payments" micro-services that work together to perform payments functionality. These all come into contact with the same product data, user data, etc., so they are effectively the same entity from a security modeling perspective, one could imagine.

When a micro-service calls another in some other security context, it will use one of the other two approaches. The first is the easiest but requires knowledge of a priori of the call from one context to another. In other words, when the token is issued at the authorization server, it must be known that the receiver of that token is going to call a service in a foreign domain (i.e., some other security context). With this knowledge, the authorization server can embed a token for that purpose in the one it issues to the originating micro-service. This is very typical even if it sounds outlandish at first. Think again of that payments service: it's easy to foresee that it will always call an accounts service which may be in a different security context. For this reason, the authorization server may always issue a separate token scoped for accounts and embed that in another that is scoped to payments. It will issue this token within a token to any client that requests an access token with the "payment" scope.

The other choice is to exchange one token for another. This will be done when the calls from one service to another are not known at the moment of token issuance. This can be the case, for instance, when the number of hops is very large, or the route is contextual and user-dependent. When exchanging one token for another, it's important that the scope of access be controlled. You don't want to exchange a low-power token for a very powerful one, for instance. So, think about scope enlargement and try to forbid or greatly control that during an exchange. A good article about batch processing that discusses exchange more can be found on Nordic APIs.

(These three options are orthogonal to whether or not you use the phantom token or split token approaches. One of those may be used in conjunction with these token propagation techniques. If you use those techniques, which you probably should since you have a gateway in the mix, your front-end SPA won't be able to access the actual access token nor any others that may be embedded in it.)