Is it normal to get 3000+ vulnerabilities while scanning server using Nexpose? Or I can reduce the vulnerability to decent count by some tweaks!

-

How many of those 3000 findings are "informational"? – schroeder Dec 30 '20 at 08:11

-

No informational.. everything is a vulnerability – Guru Dec 30 '20 at 11:59

-

2So, I'm confused. Are they actually vulnerabilities? You have sort of asked, "the doctor said I have cancer and that it has spread all over my body. How can I change the report so it's not so bad?" If you have that many vulnerabilities, why would you want to alter the report? Confirm the vulnerabilities and fix them, instead? – schroeder Dec 30 '20 at 14:10

1 Answers

Is it normal?

That depends entirely on the server in question. If it's an ancient machine running ancient software, then I would not be surprized to find thousands of thousands of vulnerabilities. If it was a completely new server set up according to best practices with barely any content on it - then yes, that would definitely be abnormal.

How can I reduce the number of vulnerabilities?

The painfully obvious solution would be to fix the vulnerabilities. You have bought a vulnerability scanner to show you vulnerabilities for a reason, after all.

Can this be tweaked somehow?

Yes. Vulnerability scanners generally have a sense of how "certain" they are that a finding is actually a vulnerability, as opposed to a "maybe could possibly be a vulnerability, perhaps". As certainty decreases, you will more and more likely run into findings that are False Positives - meaning the scanner detected something that is not actually a vulnerability. What you care about are so-called True Positives, meaning the scanner identified something that is actually a vulnerability.

You may now be tempted to turn down the sensitivity of the scanner to reduce the amount of False Positives and leave you with a high density of True Positives. However, this will inevitably increase the number of False Negatives, meaning the scanner did not identify something as a vulnerability, even though it is really there.

How to proceed now?

Whatever path you choose, it will either result in a lot of work, or in a high risk for you.

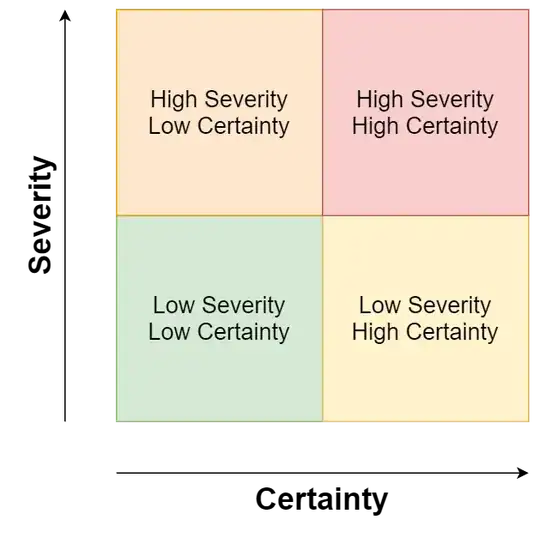

The best possible strategy is now to create a matrix of vulnerabilities, with one axis being the certainty of a vulnerability, ranging from "100% confirmed" to "possibly maybe", and the other axis being the severity of the vulnerability, ranging from "Your server is on fire" to "Could possibly be inconvenient". Here you see a little diagram of what that matrix could look like:

High Severity, High Certainty - These findings need your immediate attention, as they have the most potential to be exploited by attackers.

An example would be an SQL Injection in your web application, allowing attackers to read and modify all data, and perhaps even execute arbitrary code on your server.

High Severity, Low Certainty - These findings should be examined afterwards and determined if the finding actually constitutes a vulnerability or not.

An example could be outdated software on your server with publicly available exploits, determined by version number. This could be misleading, since maintainers may offer security backports, which fix the vulnerability, but do not change the version number. If you are however affected by the vulnerability, attackers may be able to execute arbitrary code on your server, depending on the vulnerability in question.

Low Severity, High Certainty - While these findings are very clear they exist, they don't pose a substantial risk to your server, and thus should be patched "later down the line". Just make sure that it actually happens and that "in a year" will not be perpetually a year away.

An example of this could be configuration flaws for TLS (e.g. support for TLS 1.0), missing security headers, disclosure of version information, etc.. While the scanner can confirm that with high certainty, the impact of these is generally low enough to not require immediate action.

Low Severity, Low Certainty - These findings can usually be ignored. It's not certain if they are actual vulnerabilities to begin with, and even if they were, their impact would be rather low anyways.

I'm struggling to think of a good example here, since they don't really matter that much anyways.

You could, of course, also go down the path where you reduce the sensitivity until you have only a handful of findings, then have your IT tell you that they won't fix them, and finally everybody successfully wasted a lot of time and money and did absolutely nothing to improve security whatsoever. As you may be able to tell, this is not advisable to do - but it is nonetheless the route companies choose to go down. These companies then find themselves in the news for massive data breaches.

What about "Informational" findings?

A lot of vulnerability scanners also include "Informational" findings, which are things like a port scan, a list of ciphersuites supported by the server, etc.. These are not vulnerabilities and don't have a direct impact on you. They're just information you may or may not be interested in.

Compare this to a log file, telling you when a server was started and stopped - it's good to have this information in case you need it, but it doesn't require any actions.

-

And after you did all what was mentioned by @MechMK1 be prepared for EOL systems where no support, patches (in any form) are provided since years, where you have perhaps some internally build software (years ago) including an obsolate stack made of Java, PHP who knows what, not touched since years and then you do what ? 1/2 – cyzczy Jan 13 '21 at 20:45

-

Oh so there's no simple upgrade path for a 10+ Linux systems ? No, because there were so many changes (initd replaced by systemd / firewald, configuration file changed for given services) oh and that nasty PHP 5.2 yeah let's move to PHP 7 (who cares if some functions USED by your old nasty app are deprecated in the 7 release). oh man, and last but not least, the cherry on the cake if you will. All the morons, that will not understand all of that and instead will 'ping' you xx times a day to check for the status. 2/2 – cyzczy Jan 13 '21 at 20:45

-

2+1 I would add as you start investigating, you may be able to knock off a whole bunch at once; for example I find that scanners complain about missing CSRF tokens on every. single. API. endpoint. even though an app has a `Authorization: bearer` token. Great, that's 400 pages of report that I can PageDown through and then ignore in the future. – Mike Ounsworth Feb 13 '21 at 03:12

-

2But in general you're spot or @Mech scanners require a significant time investment to sort through the noise the first time they are run. _(Pet peeve: when a customer sends us an 800 page scan report demanding irately that we fix it, and the report is entirely 404's and TLS errors. Did ... did'ja bother to finish setting up the app before you scanned it???)_ – Mike Ounsworth Feb 13 '21 at 03:18

-

@MikeOunsworth and not just the scanners but the reports, as you point out. – schroeder Feb 13 '21 at 11:22