We want to find unknown, fixable vulnerabilities

The goal of penetration testing is to obtain actionable results - to have someone find vulnerabilities that the organization did not know that they have, but could fix if only they know that they exist.

Social engineering does not fit this purpose, it reveals vulnerabilities that are neither new nor fixable. We already know that employees are vulnerable to spearphishing and other classes of social engineering attacks, so a successful social engineering attack just confirms what we know, it does not provide new knowledge. And we in general consider that the likelihood of people falling for such attacks can be reduced, but not eliminated; so identifying some social engineering vector works on your company does not necessarily mean that there's anything that should or could be changed to prevent such attacks in the future.

You can (and possibly should) run some awareness campaigns, but you should expect that a good social engineering attack will sometimes succeed anyway even if you have done everything that reasonably can be done. An user falling for an attack does not mean that the awareness campaigns were flawed or too limited or that the particular person has 'failed a test' and needs to be penalized (if this seems contentious to you, this is a longer discussion for a separate question).

Detect and mitigate consequences of social engineering

For a security-wise mature organization, the key part of the response to social engineering attacks is to assume that some employees will fall for the "social engineering" part of the attack, and to work on measures that detect such attacks and limit the consequences.

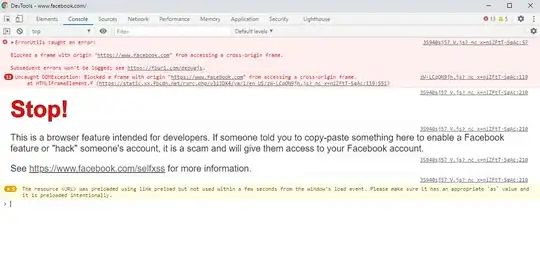

It may be an "assume breach" strategy (which is useful when trying to mitigate insider attacks), but not only that - it can and should also involve technical measures to prevent the breach that assume that the user-facing part may succeed but prevent the attack from getting to the user (various measures to limit spoofed emails or websites), prevent the attack from succeeding even with user 'cooperation' (for example, 2FA systems that won't send the required credentials to a spoofed login page), or mitigate the consequences of the attack (proper access controls so that a random employee compromise does not mean compromise of everything, endpoint monitoring, etc).

You can test attacks and response without involving the users

You can do simulated "social engineering" attacks to test your response without any actual social engineering that harms the actual users in your organization (because, to emphasize this, even simulated social engineering attacks do cause harm to the users they target and victimize). You can test your ability to detect and respond to spearphishing by targeting an "informed accomplice" in the organization that will intentionally click whatever needs to be clicked (because we already know that this part succeeds often enough) if the existing systems and controls in place will allow the payload to get to them, and see how your response works, there is no need to mass-target unwitting employees to gain the same benefit to the systems security audit.

And you can test your ability to mitigate the consequences of social engineering attacks by starting the penetration test from a foothold in your organization - you can give the penetration testers remote access to a workstation and an unprivileged user account credentials (assuming that this would be the result of a successful social engineering attack), you don't need to disrupt the workday of a real user to do this test.