From time to time, questions come up in this board concerning web applications that utilize client-side cryptography (or ‘in-browser’ cryptography), where these applications claim to be designed in such a way that the operators of these applications have ‘zero-access’ to users’ private information. See links to several related questions below. A common example of such an application is Protonmail, which aims to provide end-to-end encrypted email. Protonmail claims that ‘your data is encrypted in a way that makes it inaccessible to us’, and ‘data is encrypted on the client side using an encryption key that we do not have access to’.

In discussions around this subject, the ‘browser crypto chicken-and-egg problem’ frequently comes up. The term was coined in 2011 by security researcher Thomas Ptacek. In essence, the problem is: if you can't trust the server with your secrets, then how can you trust the server to serve secure crypto code? Using Protonmail as an example, one could argue that a rogue Protonmail server admin (or an attacker that has gained access to Protonmail’s servers) could alter the client-side javascript code served by Protonmail’s server, such that the code captures the user’s private keys or plaintext information and sends these secrets back to the server (or somewhere else).

This question is: Can the ‘browser crypto chicken-and-egg problem’ be solved using the following method?

The web application is designed as a single page web application. A static web page is served at the beginning of the user’s session, and this static page remains loaded in the user’s browser throughout the user’s session. Like Protonmail, all cryptography is done in-browser - the user’s plaintext secrets and private encryption key never leave the browser, and only ciphertext is sent to the server. However, unlike Protonmail, where a new page is dynamically generated by the server after each action by the user – users’ requests are sent from the static page to the server by way of client-side AJAX or XHR calls to the server, and the static page is updated with the server’s responses to these calls.

All supporting files depended upon by the static page (e.g. javascript files, css files, etc.) are referenced by the static page using subresource integrity.

The user’s private encryption key (or password from which the private key is derived) is stored by the user. The user enters their private key (or password) via an interface on the static page, which in-turn passes the key to the client-side scripting running in-browser. All in-browser cryptography is handled by the browser's native Web Crypto API.

To mitigate XSS attacks, all external content is sanitized in the client side scripting before being written to the static page; all external content is written to static page elements using the elements’ .innerText attribute (as opposed to .innerHTML), and a strict content security policy (CSP) is applied, prohibiting the use of inline scripting.

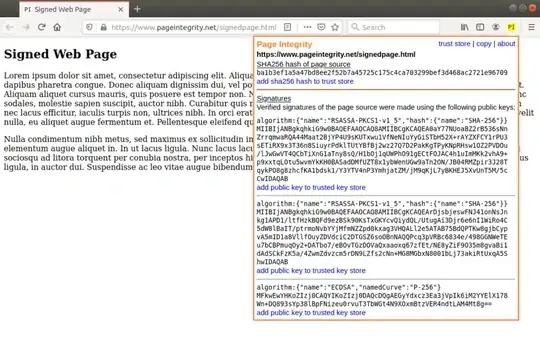

A trusted reviewer (TR) reviews the static page, and all supporting files. TR determines that the client-side code is ‘as advertised’, and at no point does the client-side code send the user’s secrets back to the server (or anywhere else), and at no point does the static page request a new page from the server, and that all of the above have been implemented correctly. TR then signs the static page with his private signing key and makes the signature public.

The user points his browser to the static page. Then, the user clicks the ‘save page as’ feature on his web browser, to save the static page (which is currently loaded in his browser) to his system. Using TR’s public key he verifies TR’s signature on the static page. If the signature is verified, then the user proceeds to use the service by way of the static page already loaded in his web browser.

To summarize: The static page, which has been reviewed and signed by TR, remains loaded in the user’s browser throughout the user’s session, and at no point is it replaced by a new page from the server. The user verifies the integrity of the static page (cryptographically, in a manner similar to the way that the integrity of downloadable files is often verified) at the beginning of his session by verifying TR’s signature of this page using TR’s public key. [It would be nice if browsers (or perhaps a browser extension) had a built-in method for performing this function, but until that day comes, the procedure of step 6 above will suffice]. The use of subresource integrity (SRI) in step 2 ensures that supporting files cannot be modified by the attacker, as doing so would either break the SRI check, or necessitate a change in the root document, which would cause the signature verification in step 6 to fail.

For the sake of this question, assume TR is competent to perform the task at hand, and that the user has a reliable method (e.g. through a trusted third party or some out of band method) of verifying that TR’s public key is true and correct. Also, for the sake of this question, please set aside the possibility of side-channel attacks, such as browser vulnerabilities, a compromise of TR’s device or user’s device, a compromise of TR’s private key or user’s private key, etc.

Given the above, can you think of some way that a rogue server admin (or a hacker that has gained access to the server) would be able to steal the user’s secrets, and if so, how?

Related:

- Can protonmail access my passwords and hence my secrets?

- What’s wrong with in-browser cryptography in 2017?

- Problems with in Browser Crypto

- How To Prove That Client Side Javascript Is Secure?

- How to validate client side safety in a Zero Knowlegde model

- Why is there no web client for Signal?

- index.html integrity

- Javascript crypto in browser

- End to end encryption on top of HTTPS/TLS

Edit 2/27/2021

I've developed a small browser extension for Firefox, incorporating many of the ideas in this question and the following answers and responses, aimed at solving this problem. See my answer below for more info.