While conducting a software security assessment, if you have access to the source code of a compiled application (say C++), would you ever do any analysis upon the compiled version, either with any automated techniques or manually? Is fuzzing the only technique applicable in this situation or are there any other potential advantages to looking at the binary?

6 Answers

It depends on the situation - type of application, deployment model, especially your threat model, etc.

For example, certain compilers can substantially change some delicate code, introducing subtle flaws - such as bypassing certain checks, that do appear in the code (satisfying your code review) but not in the binary (failing the reality test).

Also there are certain code-level rootkits - you mentioned C++, but there are also managed code rootkits for e.g. .NET and Java - that would completely evade your code review, but show up in the deployed binaries.

Additionally, the compiler itself may have certain rootkits, that would allow inserting backdoors into your app. (See some history of the original rootkit - the compiler inserted a backdoor password into the login script; it also inserted this backdoor into the compiler itself when recompiling from "clean" code). Again, missing from the source code but present in the binary.

That said, it is of course more difficult and time consuming to reverse engineer the binary, and would be pointless in most scenarios if you already have the source code.

I want to emphasize this point: if you have the source code, don't even bother with RE until you've cleaned up all the other vulnerabilities you've found via code review, pentesting, fuzzing, threat modeling, etc. And even then, only bother if it's a highly sensitive app or extremely visible.

The edge cases are hard enough to find, and rare enough, that your efforts can be better spent elsewhere.

On the other hand, note that there are some static analysis products that specifically scan binaries (e.g. Veracode), so if you're using one of those it doesnt really matter...

- 72,138

- 22

- 136

- 218

@AviD solid points, totally agree on the root kits on binaries/compilers component.

If you're a knowledgeable sec professional, setting aside the valid points AviD makes, the most vulnerabilities will most likely be in your source code. Having a strong knowledge of programming securely and how reverse engineering is accomplished should give you the best method for fixing/preventing the majority of holes in your source code. Plus, if there is an exploit with a compiler/binary, lots of times there is nothing you as a developer can do to prevent it except to use another compiler/language (which usually is not a viable option).

There are plenty of reasons aside from security-related ones to look into the final binary. Either by means of a debugger, disassembler or a profiler and emulator like Valgrind (which can verify various aspects of a compiled program).

Security and correctness of the program usually go hand in hand.

For me it's first linting the code (i.e. using PCLINT), then building binaries, verifying these with a fuzzer and memcheck (from Valgrind) and that gave me very good results when it comes to robustness and reliability. Only PCLINT in this case has access to the source code.

- 1,604

- 2

- 15

- 20

Binary and source code analysis gives a bit different points of view and thus both of them should be applied. However, binary analysis can be intimidating for humans. Would not say that analysis at source code level can not be frustrating, but analyzing logics of application at assembly level is not that best choice. At binary analysis you are dealing with that what happens in fact and cannot be otherwise. If in source code you can make some assumptions, here is definite state.

However, tests like fuzzing should be run against compiled application to ensure application robustness at least at some level. The code coverage depends on the methodology of fuzzing, application specifics and some others factors - too much to explain here, that's art of fuzzing. Even Microsoft develops tools for fuzzing and applies them in SDL. Here is a paper from Codenomicon as an insight into a process: http://www.codenomicon.com/resources/whitepapers/codenomicon-wp-sdl-20100202.pdf

Looking at the binary is always necessary, as a lot of the security features can only be assessed when an analysis of the complied binary is done. For example the code may hint towards vulnerabilities in the logic of the application the complied binary will tell us if certain compiling features like ASLR, and stack executable are enabled or disabled. This information cannot be obtained by just looking at the source code. While fuzzing is generally a useful technique to brute force vulnerabilities it can be time consuming and resource-heavy, to just rely on it for identifying issues can sometimes lead to false or missed results. Hence a reverse engineering tool like radare2 or hopper is also required to analyse the binary.

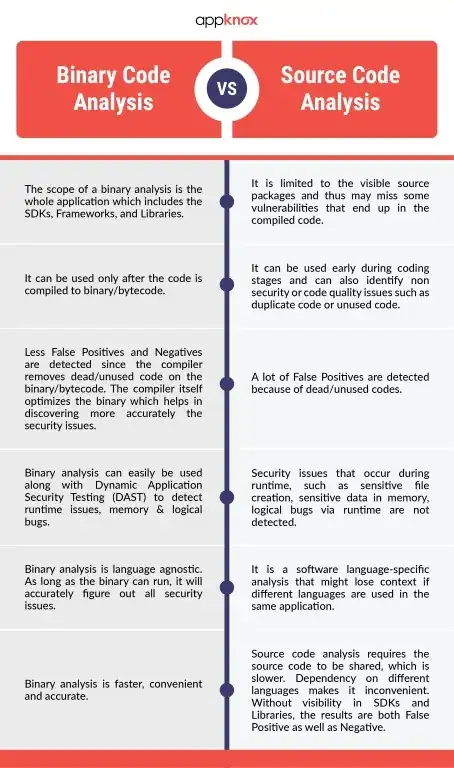

I've also found this resource which compares Binary vs source code analysis, this should help

Disclaimer- I work at Appknox

-

1This is your company. Please disclose your relationship to any links/pics you have in your posts – schroeder Nov 13 '19 at 17:28

There's a point that AviD alluded to but should probably be emphasized, and that is that code review tends to be a much more time and effort effective process than binary review. Particularly with C++ the binary is very difficult even for the average security professional to understand, let alone the average developer.

For interpreted languages this is not as much the case. Unobfuscated Java and .NET bytecode can be transformed back into something fairly human readable.

- 71

- 2

-

In regards to java /.net there are certain techniques that one can use which can confuse the crap out of decompilers to the point where they either refuse to decompile or will return only decompiled bytecode (and not reconstruct the original code. ) – Damian Nikodem Mar 04 '15 at 07:54