We store the URL instead of the image.

In addition, this will add information and privacy risks. Let me show with a visual demo.

If you try to upload any image to StackExchange, you will notice that the image gets hosted by imgur.com. The SE server fetches the images and uploads a copy of it to its private server.

I will use a popular and innocent meme for the experiment. Let's start with the following URL for our show: https://i.imgflip.com/2fv13j.jpg. Plase note that I have to use a deep link for this demo to work.

I want to attach it to this post using StackExchange upload tool. Exactly like the scenario in the question.

Here is our newly upload image!

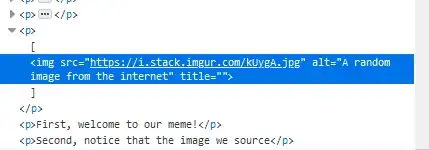

Let's go deeper and investigate futher. Notice that the image is now sourced from imgur.com rather than from imgflip.com. Please be patient with me if the two URLs have similar names. By opening Developer tools, you can see where the image is pointed

Privacy concerns

When you link just any http(s):// online resource, your browser will initiate a connection to that server, sending a lot of information. On a high-traffic website, the owner of the website gets a lot of information about IP addresses of people who visit this Security SE page, along with (if enabled) referral links and third party cookies. Considering that 3rd party cookies are enabled by default, this may leak the user identity if abused the right way.

By owning the image I want to upload to a post, StackExchange prevents imgflip.com from knowing who is displaying their picture.

And, as we are going to see in the second part, to change it in the future.

Risk of deception

Consider that no matter your effort to deploy a static "Front-page-ish" simple website, any URL to a remote resource is always interpreted by the server, on every request. While it may end with .jpg the server may likely be using a scripting language to interpret the request and, in the end, choose what content to serve.

Now that you have profiled your visitors, you have the power to choose what content to display for them, live. Consider the Uber-Greyball case as an example of live deception. Popular dating app Tinder uses a similar soft-ban or greylisting technology

Unknown to [...] authorities, some of the digital cars they saw in the app did not represent actual vehicles. And the Uber drivers they were able to hail also quickly canceled. That was because Uber had tagged [... Mr Police officer ...] — essentially Greyballing them as city officials — based on data collected from the app and in other ways.

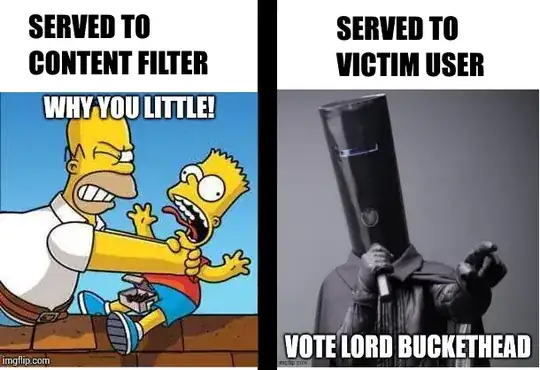

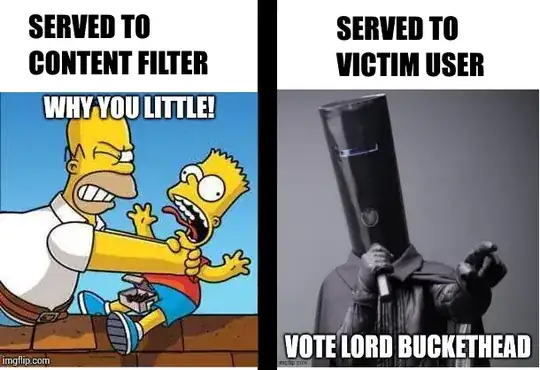

As an example, the server can implement such a logic: decide where to serve an innocuous meme or an undesirable content, e.g. political advertising, based on the user requesting (reminds some Cambridge Analytic-thing?). Neverthles, the URL never changes.

CA's professed advantage is having enough data points on every American to build extensive personality profiles, which its clients can leverage for "psychographic targeting" of ads

request for https://host.com/images/img1.png

if (request comes from any of

StackExchange moderator or

Automated content filter or

Government enforcer or

Anything you can imagine)

{

decide to serve innocuous content

}

else if (request comes from a user you want to decept)

{

decide to serve a targeted advertising or deceptive content somehow

}

Look at this picture to see what may happen with real time filtering. A the same URL, different users see different content. Thanks to Lord Buckethead for keeping myself politically neutral.

At the same URL, we are now able to serve content that is different with regards to who is requesting it.

For these reasons, you have to consider fetching the remote resource in order to take a permanent snapshot of it, with regards to bandwidth and disk space constraints.

I won't discuss here about 1) retaining EXIF tags and 2) re-encoding the image with your own codec to prevent further payload attacks