According to Google, one can add the SHA-1 certificate fingerprint and the name of the package in the Google Developers Console to restrict the usage of his API key to his Android app, so that developers can make sure that their API keys are not being used by other clients than theirs.

According to this and this, programmers should simply add the SHA-1 fingerprint and the package name to their HTTPS-request headers in order for Google to be able to verify that the request comes from the "authorized" app and consequently serve it.

But since the SHA-1 fingerprint and the the package name are publicly known, then anyone else can also very simply add them to his HTTPS-request header and let his program very easily bypass the restriction and verify himself by Google as the "authorized" app!

Therefore, I do not understand the usefulness of this kind of restrictions which are based on infos that are publicly known!

- 81

- 2

-

1Being a client "secret", it will always be replayable/spoofable. Using the package sha1 would probably prevent other people from simply repackaging your app. Also it might be easier to spot rogue apps this way. – domenukk Jun 24 '19 at 16:41

-

@domenukk the package sha1? So do you mean using the SHA-1 hash of the package name instead of the SHA-1 fingerprint of the certificate signing the app? i.e. if the package name were `com.example.stackexchange`, then `1d6b033289a9844b24f62c7c1cdf054726debb58` should be used!? – user9514066 Jun 25 '19 at 12:01

-

No, I mean the fingerprint. It will change when the package gets repackaged by somebody else (since the certificate will be different). – domenukk Jun 25 '19 at 21:39

2 Answers

As domenukk stated in his comment, the Google API key restriction prevents other users from packaging your API key in their app. It is simply a way for Google to ensure that the API calls it is receiving are coming from an application that was packaged by the developer who added said key to his Google API account.

However, as you rightly pointed out, it is easy to circumvent. For example, this answer on SO shows how to build a request using any Android Google API key. So the matter of circumventing the restriction is rather trivial.

However, the benefit is in the cost involved for an attacker. A similar question was asked for HTTP referrers, so I will just quote the relevant part to your question (just replace referrer header with SHA1 fingerprint):

So, just because a malicious agent could copy the referrer header, they may not have the time or inclination to do so. Think of a valid referrer header like a concert wristband. Can you forge them, or sneak around the guard checking wristbands? Sure you can, but it doesn't make the band useless.

It raises the cost for the attacker.

- 146

- 3

Secrets are Public

Therefore, I do not understand the usefulness of this kind of restrictions which are based on infos that are publicly known!

Even if they have not used this type of approach and provided you with a secret, then you would have to use it from inside your mobile app.

Any type of secret you ship inside your mobile app code to identify it as the authorised one to make requests to its own backend or third party ones MUST be considered public from the moment you upload it to the Google Play store.

You may now ask why?

That's because a plethora of Open Source and commercial tools exist to make the task easy enough that even non developers can do it. This can be done by of extracting the secret from the binary or by doing it at runtime with a Man in the Middle (MitM) attack, and/or with an instrumentation framework.

I wrote a series of articles around this topic:

How to Extract an API key from a Mobile App with Static Binary Analysis:

The range of open source tools available for reverse engineering is huge, and we really can't scratch the surface of this topic in this article, but instead we will focus in using the Mobile Security Framework(MobSF) to demonstrate how to reverse engineer the APK of our mobile app. MobSF is a collection of open source tools that present their results in an attractive dashboard, but the same tools used under the hood within MobSF and elsewhere can be used individually to achieve the same results.

During this article we will use the Android Hide Secrets research repository that is a dummy mobile app with API keys hidden using several different techniques.

Steal that Api Key with a Man in the Middle Attack:

In order to help to demonstrate how to steal an API key, I have built and released in Github the Currency Converter Demo app for Android, which uses the same JNI/NDK technique we used in the earlier Android Hide Secrets app to hide the API key.

So, in this article you will learn how to setup and run a MitM attack to intercept https traffic in a mobile device under your control, so that you can steal the API key. Finally, you will see at a high level how MitM attacks can be mitigated.

Can I Mitigate the Risk of Using Secrets in a Mobile App?

Yes, you can, but the success of the different mitigation techniques is depending on the skill set of the attacker and the time he is willing to spend to attack your app.

Before I proceed with some of the possible mitigation techniques I want to make clear that security must be taken with a defence in depth approach. This means adding as many layers as possible in order to make it time consuming and increase the skill set required to bypass them. Defence in depth is nothing new and is used for centuries, like on the medieval castles.

You may now ask what are some of that techniques?

You can start by hiding your secrets in C native code to make it hard to reverse engineer from the binary, that I shown on the article I linked above to steal an API key, where you can read in the introduction:

In order to help demonstrate how to steal an API key, I have built and released in Github the Currency Converter Demo app for Android, which uses the same JNI/NDK technique we used in the earlier Android Hide Secrets app to hide the API key.

So, while it makes difficult to extract it from the binary, doesn't prevent it from being extracting at runtime with a MitM attack.

MitM attacks can be mitigated by using certificate pinning to protect your HTTPS channels, and I wrote the article Securing HTTPS with Certificate Pinning to show how it can be done:

In order to demonstrate how to use certificate pinning for protecting the https traffic between your mobile app and your API server, we will use the same Currency Converter Demo mobile app that I used in the previous article.

In this article we will learn what certificate pinning is, when to use it, how to implement it in an Android app, and how it can prevent a MitM attack.

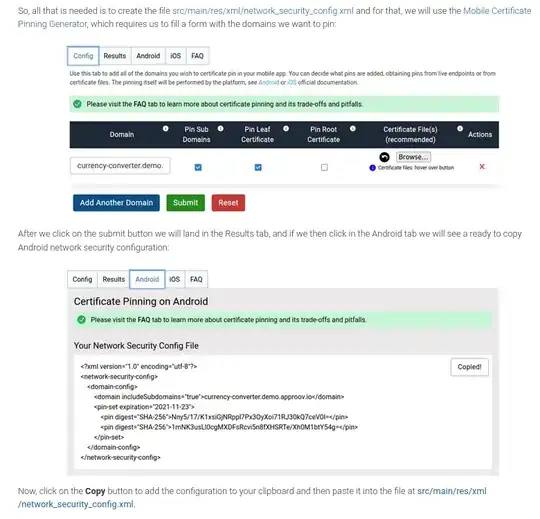

The article explains how easy is to use the Mobile Certificate Pinning Generator, online free tool, to get a proper pinning configuration to copy/paste into you Android manifest:

Don't forget to read the FAQ section to properly understand the benefits, pitfalls and trade-offs of using pinning:

Oh, now I see that you immediately spotted that question about if certificate pinning can be bypassed, and the short answer is YES, and who have guessed, I also wrote the article How to Bypass Certificate Pinning with Frida on an Android App to show how to do it:

Today I will show how to use the Frida instrumentation framework to hook into the mobile app at runtime and instrument the code in order to perform a successful MitM attack even when the mobile app has implemented certificate pinning.

Bypassing certificate pinning is not too hard, just a little laborious, and allows an attacker to understand in detail how a mobile app communicates with its API, and then use that same knowledge to automate attacks or build other services around it.

I see you now thinking of what you already said:

Therefore, I do not understand the usefulness of this kind of restrictions which are based on infos that are publicly known!

But your thinking now may be more on the sense why bother if at the end of the day everything can be byapssed?

Well, It's not impossible to open the doors of your home/car, but you don't leave them open?

Remember is all about defence in depth with many layers as you can afford.

Possible Better Solution

The hope is not lost yet, and security is an ever end cat/mouse game between security researchers and attackers.

I recommend you to read this answer I gave to the question How to secure an API REST for mobile app?, especially the sections Hardening and Shielding the Mobile App, Securing the API Server and A Possible Better Solution.

You will come to understand that you can use Android Device Check as one more layer of defence, but ultimately you best option is to use a Mobile App Attestation approach to guarantee the authenticity of your mobile app requests to a backend.

Do You Want To Go The Extra Mile?

In any response to a security question I always like to reference the excellent work from the OWASP foundation.

For APIS

The OWASP API Security Project seeks to provide value to software developers and security assessors by underscoring the potential risks in insecure APIs, and illustrating how these risks may be mitigated. In order to facilitate this goal, the OWASP API Security Project will create and maintain a Top 10 API Security Risks document, as well as a documentation portal for best practices when creating or assessing APIs.

For Mobile Apps

OWASP Mobile Security Project - Top 10 risks

The OWASP Mobile Security Project is a centralized resource intended to give developers and security teams the resources they need to build and maintain secure mobile applications. Through the project, our goal is to classify mobile security risks and provide developmental controls to reduce their impact or likelihood of exploitation.

OWASP - Mobile Security Testing Guide:

The Mobile Security Testing Guide (MSTG) is a comprehensive manual for mobile app security development, testing and reverse engineering.

- 156

- 3