I'm trying to get my head around how PKCE works in a mobile app and there's something I don't quite understand.

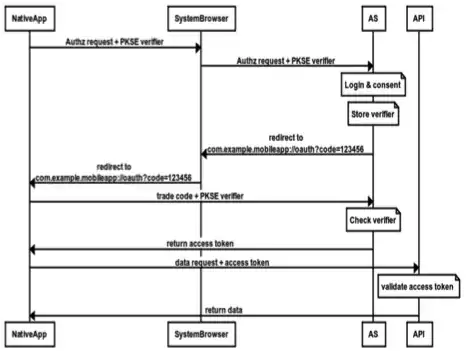

So from what I can gather the client app creates a random cryptographically secure string known as the code-verifier. This is then stored. From this, the app then generates a code challenge. The code challenge is then sent in an API request to a server along with how the challenge was generated e.g S256 or plain. The server stores this challenge along with an appropriate authorization_code for the request in question.

When the client then tries to exchange the code for an access token it also sends the original code-verifier in the request. The server then retrieves the stored challenge and the method originaly used to generate it for this particular code and generates the equivalent s256/plain hash and compares them. If they match, it returns an access token.

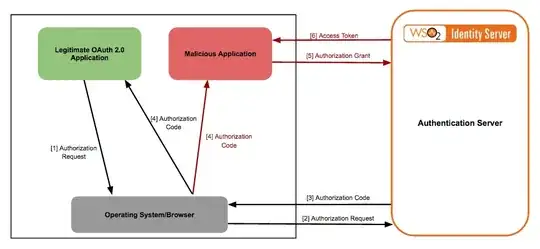

What I don't get is how this is supposed to replace a secret in a client app? Surely if you wanted to spoof this you would just take the client_id as normal and generate your own code-verifier and challenge and you're in the same position as if PKCE wasn't required in the first place. What is PKCE actually trying to solve here if the original idea was that it is basically a 'dynamic secret'? My assumption is it's only there if someone happens to be 'listening' into when the auth_code is returned, but if you're using SSL again is this needed? It’s billed as replacing the fact you shouldn’t store a secret in a public app but the fact the client is responsible for generating rather than a server feels like it’s not actually helping there.