This is an active area of research. I happen to have done some work in this area, so I'll share what I can about the basic idea (this work was with industry partners and I can't share the secret details :) ).

The tl;dr is that it's often possible to identify an encrypted traffic stream as carrying video, and it's often possible to estimate its resolution - but it's complicated, and not always accurate. There are a lot of people working on ways to do this more consistently and more accurately.

Video traffic has some specific characteristics that can distinguish it from other kinds of traffic. Here I refer specifically to video on demand - not live streaming video. Video on demand doesn't often have those priority tags mentioned in this answer. Also I refer specifically to adaptive video, meaning that the video is divided into segments (each about 2-10 seconds long), and each segment of video is encoded at multiple quality levels (quality level meaning: long-term video bitrate, codec, and resolution). As you play the video, the quality level at which the next segment is downloaded depends on what data rate the application thinks your network can support. (That's the DASH protocol referred to in this answer.)

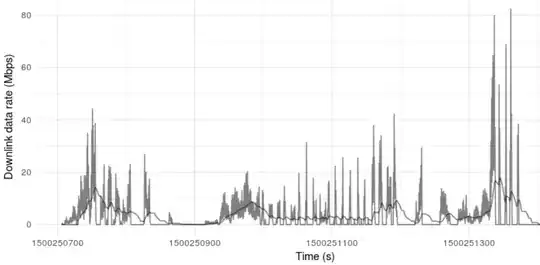

If your phone is playing a video, and you look at the (weighted moving average of) data rate of the traffic going to your phone over time, it might look something like this:

(this is captured from a YouTube session over Verizon. There's the moving average over 15 seconds and also short-term average.)

There are a few different parts to this session:

First, the video application (YouTube player) tries to fill the buffer up to the buffer capacity. During this time, it is pulling data at whatever rate the network can support. At this stage, it's basically indistinguishable from a large file download, unless you can infer that it's video traffic from the remote address (as mentioned in this answer).

Once the buffer is full, then you get "bursts" at sort-of-regular intervals. Suppose your buffer can hold 200 seconds of video. When the buffer has 200 seconds of video in it, the application stops downloading. Then after a segment of video has played back (say 5 seconds), there is room in the buffer again, so it'll download the next segment, then stop again. That's what causes this bursty pattern.

This pattern is very characteristic of video - traffic from other applications doesn't have this pattern - so a network service provider can pretty easily pick out flows that carry video traffic. In some cases, you might not ever observe this pattern - for example, if the video is so short that the entire thing is loaded into the buffer at once and then the client stops downloading. Under those circumstances, it's very difficult to distinguish video traffic from a file download (unless you can figure it out by remote address).

Anyway, once you have identified the flow as carrying video traffic - either by the remote address (not always possible, since major video providers use content distribution networks that are not exclusive to video) or by its traffic pattern (possible if the video session is long, much more difficult if it is so short that the whole video is loaded into the buffer all at once)...

Now, as Hector said, you can try to guess the resolution from the bitrate by looking at the size (in bytes) of each "burst" of data:

From the size per duration you could make a reasonable estimate of the resolution - especially if you keep a rolling average.

But, this can be difficult. Take the YouTube session in my example:

- Not all segments are the same duration - the duration of video requested at a time depends on several factors (the quality level, network status, what kind of device you are playing the video on, and others). So you can't necessarily look at a "burst" and say, "OK, this was X bytes representing 5 seconds of video, so I know the video data rate". Sometimes you can figure out the likely segment duration but other times it is tricky.

- For a given video quality level and segment duration, different segments will have different sizes (depending on things like how much motion takes place in that part of the video).

- Even for the same video resolution, the long-term data rate can vary - a 1080p video encoded with VP9 won't have the same long-term data rate as one encoded with H.264.

- The video quality level changes according to perceived network quality (which is visible to the network service provider) and buffer status (which is not). So you can look at long-term data rates over 30 seconds, but it's possible that the actual video quality level changed several times over that 30 seconds.

- During periods when the buffer is draining or filling as fast as possible (when you don't have those "bursts"), it's much harder to estimate what's going on in the video.

- To complicate things even further: sometimes a video flow will be "striped" across multiple lower-layer flows. Sometimes part of the video will be retrieved from one address, and then it will switch to retrieving the video from a different address.

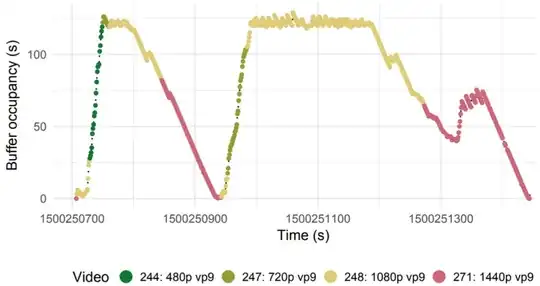

That graph of data rate I showed you just above? Here's what the video resolution was over that time interval:

Here, the color indicates the video resolution. So... you can sort of estimate what's going on just from the traffic patterns. But it's a difficult problem! There are other markers in the traffic that you can look at. I can't say definitively how any one service provider is doing it. But at least as far as the academic state-of-the-art goes, there isn't any way to do this with perfect accuracy, all of the time (unless you have the cooperation of the video providers...)

If you're interested in learning more about the techniques used for this kind of problem, there's a lot of academic literature out there - see for example BUFFEST: Predicting Buffer Conditions and Real-time Requirements of HTTP(S) Adaptive Streaming Clients as a starting point. (Not my paper - just one I happen to have read recently.)