There two tactics to measure and analyze risk and uncertainty in cybersecurity risk.

The first are the sets of foresight tools based on analytical techniques such as Multiple-Scenarios Generation which relies on Quadrant Crunching. The more well-known technique is Cone of Plausibility. There may be ways to incorporate time-series data, such as from SIEMs or log management/archival systems, along with forecasting or backcasting approaches as well.

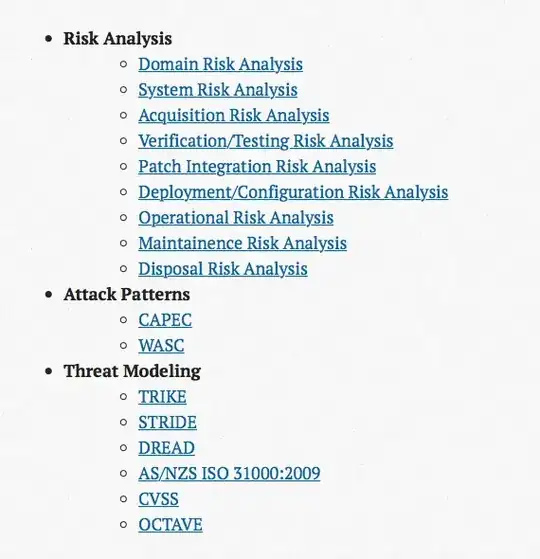

If you use a foresight tool, you will need a panel of cybersecurity experts. This is not something you can buy off-the shelf or outsource. These will need to be domain experts for the business and most will need to organize with an ontology such as the UCO, VERIS, as well as understand the MITRE taxonomies (e.g., CAPEC, ATT&CK, CWE, CVE, MAEC, STIX, CybOX, CPE, CCE) in order to utilize them as a common language. Two models will also be of use as references and guides during this process: the Diamond Model of Intrusion Analysis (N.B., the Diamond Model is taught even in the CCNA Cyber Ops SECOPS 210-255 material from Cisco Systems) and the F3EAD (Find, Fix, Finish, Exploit, Analyze, Disseminate) process to map security operations / incident response teamwork to threat intelligence cross-functional needs.

By using a time-series database (TSDB) such as RRD (Round Robin Database, an older, yet still-relevant standard) or Graphite Whisper (more-modern), you can can perform data smoothing and forecasting operations. While I haven't seen these in cybersecurity products, the concepts can be put into practice with rrdtool (I am familiar with Ganglia) and for Graphite visualization (also familiar with Grafana) and monitoring (familiar with both zabbix and Icinga 2, but obvious Nagios and others) platforms. As seen here -- https://blog.pkhamre.com/visualizing-logdata-with-logstash-statsd-and-graphite/ -- Graphite can take data from common log pushers and metrics aggregators such as LogStash and StatsD. However, when this approach is used, it most likely has to target an on-going campaign from a single adversary during a non-dynamic attack paradigm (e.g., DDoS that occurs every day/week/month) and should deal with outliers and other anomalies (familiar with Etsy Skyline and Twitter BreakoutDetection).

The second set of tools rely heavily on statistical techniques. At least three outcomes can be surveyed: 1) a LEF (Loss-Event Frequency) and LM (Loss Magnitude) can be calculated best by canvassing a panel of domain-specific and internal-org cybersecurity experts by gathering calibrated-confidence intervals in order to produce the probability of damage in dollar (or equivalent) amounts using an Exceedance-Probability (EP) curve against a series of scenarios, 2) another curve, based on the risk tolerance of the organization can be compared against the EP curve to determine the effectiveness of controls, i.e., how effective a mitigation would be and how it fits with the lines-of business, 3) the use of Bayes' Rule to formulate how a Positive-Penetration Test producing Remotely-Exploitable Vulnerabilit(ies) affects the probability of a Major Data Breach. These outcomes are analyzed in the book How To Measure Anything in Cybersecurity Risk. For method 3, it is suggested that multiple methods of penetration testing be utilized in order to provide optimal coverage. There are many theories on how this should be done, but the best I've found is the work from -- http://www.sixdub.net -- and -- http://winterspite.com/security/phrasing/ -- although perhaps there is room for crowdsourced bug-hunting programs in addition to adversarial emulation, blue, red, and purple team activities. Again, by leveraging the Diamond Model (as sixdub describes) and F3EAD process, more-ideal conclusions can be reached.