Imagine I wish to upload my sensitive personal information (photos, document scans, list of passwords, email backups, credit card information, etc.) on Google Drive (or any other cloud service).

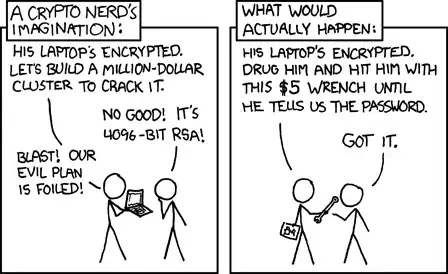

I want to make sure this entire bunch of data is as safe as possible (against hackers that would in some way get their hands on this data, and against Google and its employees, and also in the future, i.e. if I delete this data from Google I want to be sure they won't be able to 'open' it even if they keep its backup forever).

So in this case instead of uploading all this data right away to the cloud, I will instead make one folder containing all the data I want to upload, and then I will compress this entire folder using 7-Zip and of course password-protect it using 7-Zip.

I will do this not once, but a few times, i.e. once I have the 7-Zip password-protected archive ready, I will compress it once again using 7-Zip and use a completely different password. I will do this five times. So in the end my data is compressed five times and it has been password-protected using 7-Zip by five completely different unrelated passwords. So in order to get to my data I have to extract it five times and provide five different passwords.

What I will then do is that, I will take this five-times-password-protected archive, and I will compress it once again using 7-Zip and yet a different sixth password, but in addition to that this time I will also choose to split the archive into smaller chunks.

Let's say in the end I end up with 10 split archives, where each of them is a 200 MB archive except the 10th one being only a 5 MB archive.

The assumption is, all those six passwords are at least 32-character passwords and they are completely unrelated and they all contain lower/upper case, numbers, and symbols.

Now I take those nine 200 MB archives and put them in one container and encrypt the container using VeraCrypt (assuming the three level cascade encryption) and then upload this container to my Google Drive.

I keep the 10th archive (the 5 MB one) on a completely different service (say on Dropbox -- and that Dropbox account is in no way connected/linked to my Google account at all) (also encrypted by VeraCrypt).

- Have I created a security theater? Or have I really made it impossible for anyone to access and extract my data? After all they have to pass one level of encryption by VeraCrypt and even after that the archives are six times password protected and one of the archives (the tenth one) is stored somewhere else!

- If someone gets access to my Google Drive and downloads all those nine archives, is there any way for them to extract the archive without having the last (the tenth) 5 MB archive? Can the data in any way be accessed with one of the split-archives missing?

- Even if someone gets their hand on all those 10 archives together and manages to bypass the VeraCrypt encryption in any way, will it be still feasible to break the six remaining passwords?