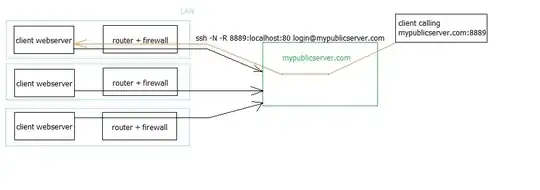

I'm developing system that allows access to client devices behind firewall, using port tunneling via SSH. Every client has dedicated linux based server, with port 80 being tunneled to public server. That way client's can have dynamic IP address and firewall enabled, no port forwarding on router is needed.

Every client has it's own port, eg.

- Client A -> port 8888

- Client B -> port 8889

- Client C -> port 8890

They can connect from anywhere to their own webserver simply by calling http://mypublicserver.com:[their_assigned_port_no]

I can see some security issues, tho.

- Attacker can simply scan http://mypublicserver.com ports (65k of them) and get number of clients currently connected

- Having that, perform any attack on their login screen, DOS their machine, try accessing PhpMyAdmin, brute-force etc.

There is also a limitation, as I cannot assign more than ~60k clients... It isn't a problem at the moment as I don't expect to hit more than 10k of them. Still, having to guess port number is not hard at all. It is also prone to mistype, somebody might be trying to login with his own credentials into somebody else machine because he typed 8989 instead of 8898.

As a defence, I thought of additional string value (hash or something) sent with login page request. If it is missing or incorrect - return 404. That way you can't get to login page if you don't know the string value, but it makes it almost impossible to get without having this site bookmarked, because short string would be easier to remember, but also easier to crack.

Another way (and this is currently my favourite one) is to login via web interface of http://mypublicserver.com. You login with other set of credentials which redirects you to http://mypublicserver.com with authentication key (stored inside mypublicserver's database) sent as POST data. After succesfull login key is invalidated, generated new and sent to mypublicserver from the client's webserver.

But this also means a need to remember 2 sets of login-password, probably leading to confusion ("Why do I need to login twice?!")

I have already:

- changed default location of phpmyadmin

- introduced account lock with more than 10 failed login attempts

What do you think of that? Did I miss something important? How can I secure that system?