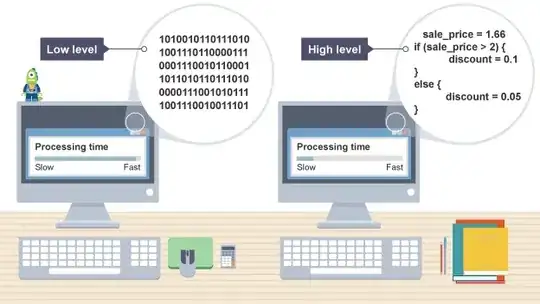

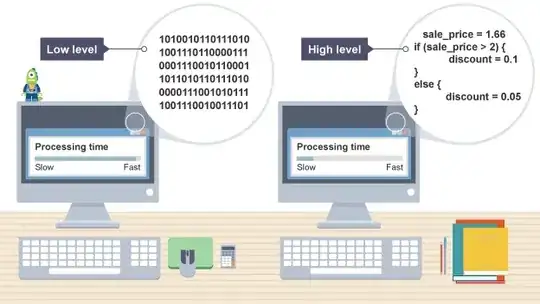

Do high-level programming languages have more vulnerabilities or security risks than low-level programming languages and if so, why?

Image source: http://a.files.bbci.co.uk/bam/live/content/znmb87h/large

Image source: http://a.files.bbci.co.uk/bam/live/content/znmb87h/large

Do high-level programming languages have more vulnerabilities or security risks than low-level programming languages and if so, why?

Image source: http://a.files.bbci.co.uk/bam/live/content/znmb87h/large

Image source: http://a.files.bbci.co.uk/bam/live/content/znmb87h/large

It is possible to be insecure in any language, any time, and only developer attentiveness/awareness can fix this. SQL injection is still a thing. Higher-level languages generally have eval, and if you're dumb enough to eval user input you get what you get.

That being said, its the other way around. Having garbage collection avoids entire classes of security risks related to memory management like buffer overflows. Higher level languages tend to be more concise, less code means fewer places for bugs to hide. Anything that's tedious (and coding in lower-level languages certainly is that) is error-prone.

Because lower-level languages lack the same expressiveness, one has to write a great deal of boilerplate code not just at the beginning but all throughout the codebase. That means, because its not always easy to tell from a glance what the code is supposed to be doing (in business-logic terms), that it will be less self-documenting and will require more documentation in the form of comments or external documents. That introduces another source of error: if the documentation is not updated when the code it references changes you can write other code that is insecure due to the obsolete assumptions in the documentation of the related code.

Lower-level languages lack strong typing, so there are fewer errors a compiler can catch. There have been a few interesting attempts like Nim and Rust to address this, but neither is super-popular yet.

Last, but certainly not least, the real difference between high-level and low-level languages is that high-level languages shift the burden from the programmer to the interpreter/compiler. Those interpreters/compilers are written and maintained by some of the most brilliant lights in the industry, are routinely audited for vulnerabilities, etc. Application code on the other hand, is written by mortals. So shifting the burden from lots of application code written by average programmers to comparatively less code written by exceptional programmers should improve security because there's less code to audit written by more able people. So which would you rather trust, the JVM or Joe Blow's by-hand buffer management in C?

All of this rather begs the question about why people still use low-level languages if they are harder to use and less safe. There are a number of reasons:

1) The golden hammer. 'We have a bunch of C programmers, and oddly enough all of our code is written in C'.

2) Performance. This is usually a red-herring, its almost never worth the tradeoff of safety and speed of development, but in cases like games where you need to eke every bit of perf out of the hardware it can make sense.

3) Obscure platform. Along the lines above, its a whole lot easier to write a C compiler than a Rust compiler or a JVM. If you have to implement the compiler first, starting with C looks a lot more appealing.

According to my extensive search on Google, the very first result gave me this answer.

In short: meh.

The study is from Veracode, and they observe that its mostly a wash. They base it on vulnerability by code density, which might be a good way to measure it, I don't know. What I do know is that you end up with a huge skew because C/C++ will take 10x more lines of code to write a given application than some other languages.

Also, what we see is probably not a true measure of vulnerability-ness, but of the likelihood to find a vulnerability because of popularity or availability of the given application(s). The lowest purported number of vulnerabilities was found in C/C++, followed by iOS (presumably Objective-C?), but then by JavaScript. But if you take into the density aspect, you might be looking at skewed numbers.

Here's the thing: vulnerabilities are just bugs. Languages do not write bugs, but developers do, so it's on the developer to produce bug-free code. As such, the harder it is for the developer to produce bug-free code, the more likely there will be a vulnerability of some sort. So this means low level languages are more likely to have vulnerabilities, regardless of whether they actually do.