There are many scientific publications that deal with cache attacks. Most recently, the CacheBleed attack was published which exploits cache bank conflicts on the Intel Sandy Bridge architecture. Most timing attacks use a similar approach:

- The attacker fills the cache with same random data he controls.

- The attacker waits while his victim is doing some computation (e.g. encryption).

- The attacker continues execution and measures the time to load each set of his data that he's written to cache in step 1. If the victim has accessed some cache sets, it will have evicted some of the attacker's lines, which the attacker observes as increased memory access latency for those lines.

By doing so the attacker can find out what cache set or even what cache line were accessed by the victim.

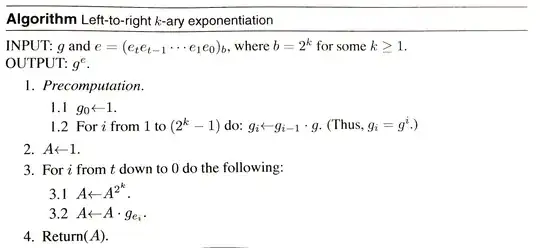

Most papers I've read automatically conclude that the attacker then has the possibility to deduce the data (e.g. a secure private key) that was written to these cache locations. This is what I don't understand:

If I know that victim V accessed a certain cache position, how do I get his data? Many memory addresses map to the same cache position and even if I had the full memory address, I doubt that the attacker could perform a DMA.

As a reference you can take the recently published CacheBleed attack.