People have given great answers here that directly answer your question, but I'd like to give a complementary answer to explain more in depth why GPUs are so powerful for this, and other applications.

As some have pointed out, GPUs are specially designed to be fast with mathematical operations since drawing things onto your screen is all math (plotting vertice positions, matrix manipulations, mixing RBG values, reading texture space etc). However, this isn't really the main driving force behind the performance gain. The main driving force is the parallelism. A high end CPU might have 12 logical cores, where a high end GPU would be packing something like 3072.

To keep it simple, number of logical cores equals the total number of concurrent operations that can take place against a given dataset. Say for example I want to compare or get the sum the values of two arrays. Lets say length of the array is 3072. On the CPU, I could create a new empty array with the same length, then spawn 12 threads that would iterate across the two input arrays at a step equal to the number of threads (12) and concurrently be dumping the sum of the values into the third output array. This would take 256 total iterations.

With the GPU however, I could from the CPU upload those same values into the GPU then write a kernel that could have 3072 threads spawned against that kernel at the same time and have the entire operation completed in a single iteration.

This is handy for working against any data that can, by its nature, support being "worked on" in a parallelizable fashion. What I'm trying to say is that this isn't limited to hacking/evil tools. This is why GPGPU is becoming more and more popular, things like OpenCL, OpenMP and such have come about because people have realized that we programmers are bogging down our poor little CPUs with work when there is a massive power plant sitting in the PC barely being used by contrast. It's not just for cracking software. For example, once I wrote an elaborate CUDA program that took the lotto history for the last 30 years and calculated prize/win probabilities with tickets of various combinations of all possible numbers with varying numbers of plays per ticket, because I thought that was a better idea than using these great skills to just get a job (this is for laughs, but sadly is also true).

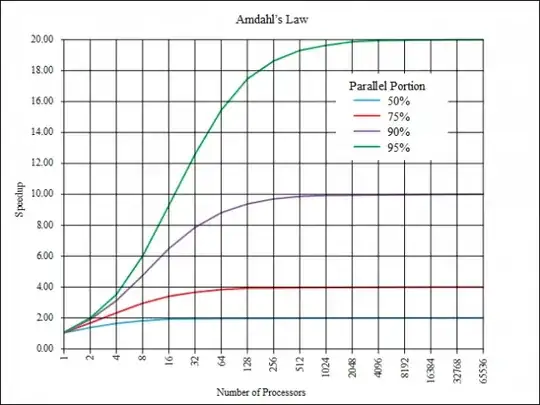

Although I don't necessarily endorse the people giving the presentation, this presentation gives a very simple but rather accurate illustration of why the GPU is so great for anything that can be parallelized, especially without any form of locking (which holds up other threads, greatly diminishing the positive effects of parallelism).