Suppose I have a very large encrypted .csv file (too large to fit in memory). Is there a way to decrypt N bytes of the cyphertext? My goal is to process each row of the .csv in memory without ever writing to disk.

- 153

- 7

-

The question would be better asked on SE.Crypto. Beside that, the fact that you write to memory does not imply that you will never be writing to disk. Paging happens. – WoJ Feb 05 '16 at 19:44

2 Answers

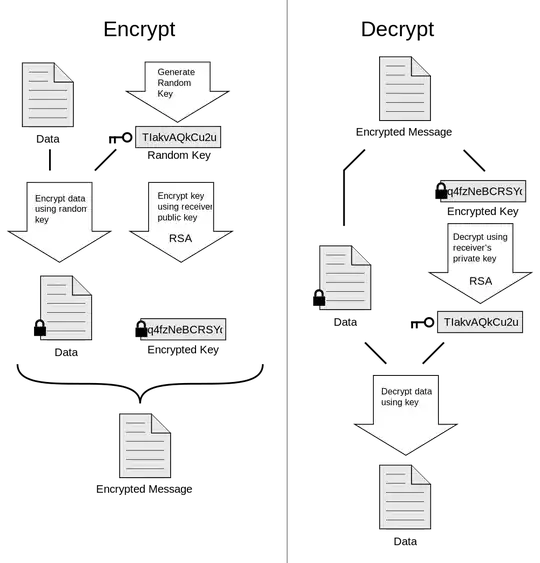

PGP Uses both asymmetric and symmetric encryption.

Data is encrypted with a random key using symmetric encryption using on of these algorithms:

As per RFC 4880:

- IDEA

- TripleDES

- CAST5 (128 bit key, as per [RFC2144])

- Blowfish (128 bit key, 16 rounds)

- AES with 128-bit key

- AES with 192-bit key

- AES with 256-bit key

- Twofish with 256-bit key

And in RFC 5581:

- Camellia 128-bit key

- Camellia 192-bit key

- Camellia 256-bit key

Then this key, called "session key" is encrypted using the public key of the recipient.

Wikipedia: PGP

Now looking at RFC 4880 Section 13.9 for OpenPGP, it says that a variation of CFB mode is used for the ciphers.

CFB Decryption looks like this :

As shown in the image, you start with iv, key and first block of cipher text and as you go forward you have your plain text block by block decrypted.

So in theory it is possible to have the message decrypted part by part but I haven't seen a tool doing this out of the box probably due to lack of use case. But one can go ahead and develop a tool to do this.

-

1Most GPG versions, including gpg 1.4.18 for Debian, and gpg4win, also support Camellia, a major Japanese/EU standard symmetric algorithm. – Anti-weakpasswords Feb 06 '16 at 17:01

My goal is to process each row of the .csv in memory without ever writing to disk.

Yes, it is possible. In Linux, if you pipe the output of gpg to your program, gpg would only write to the pipe as much as your program can handle plus a small extra amount to fill the pipe buffer. If your program is taking its time to process the CSV and isn't reading from the pipe, decryption and writing to the file is suspended until you read from the pipe again. This isn't unique to gpg though, this is how Unix/Linux pipes work.

This way, as long as your CSV parser can handle incremental parsing (CSV is easy to parse incrementally), you should be able to easily handle files much larger than the memory you have.

PGP isn't designed for random access though; so if your parsing requires random access to the file, then PGP probably isn't the appropriate way to do this.

- 31,089

- 6

- 68

- 93