16

2

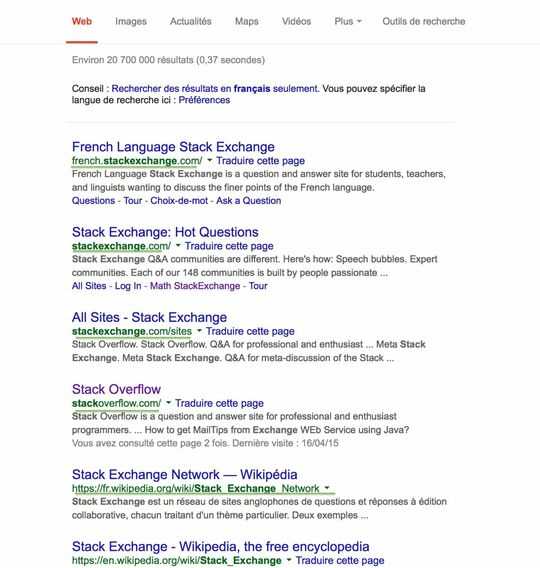

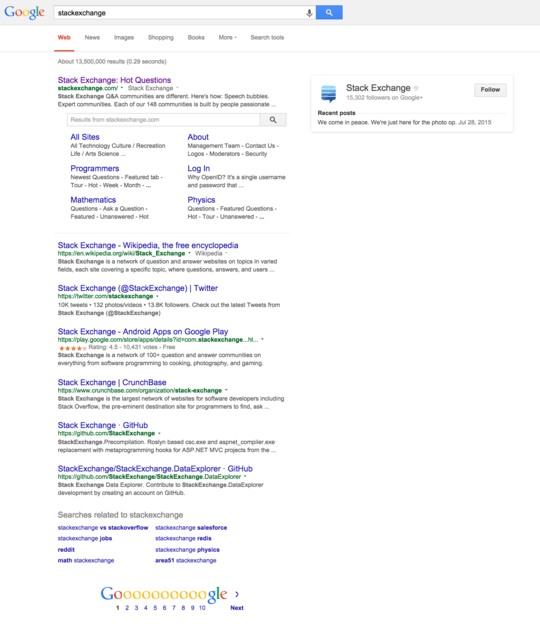

When you search something on google, within the results page, the user can see green links, for the first page of results.

In the shortest form possible, in bytes, using any language, display those links to stdout in the form of a list. Here is an example, for the first results of the stack exchange query :

Input :

you choose : the URL (www.google.com/search?q=stackexchange&ie=utf-8&oe=utf-8) or just stackexchange

Output :

french.stackexchange.com/, stackoverflow.com/, fr.wikipedia.org/wiki/Stack_Exchange_Network, en.wikipedia.org/wiki/Stack_Exchange,...

Rules :

You may use URL shorteners or other search tools/APIs as long as the results would be the same as searching https://www.google.com.

It's ok if your program has side effects like opening a web browser so the cryptic Google html/js pages can be read as they are rendered.

You may use browser plugins, userscripts...

If you can't use stdout, print it to the screen with, eg. a popup or javascript alert !

You don't need the ending / or the starting http(s)://

You should not show any other link

Shortest code wins !

Good luck !

EDIT : This golf ends the 07/08/15.

Since you are using

google.fr, do we have to use that as well? – Beta Decay – 2015-07-31T12:31:51.107You can use any google you want. I'm french, so I used .fr, but you could use .com or .anything :) Dosn't matter – WayToDoor – 2015-07-31T12:37:37.843

And shortened URLs such as

– Beta Decay – 2015-07-31T12:40:22.760gogle.deare fine as well?You may use URL shorteners or other search tools/APIs as long as the results would be the same as searching https://www.google.com, so yes

– WayToDoor – 2015-07-31T12:41:53.793I've made some minor formatting changes - feel free to reverse any that are not how you want. – trichoplax – 2015-07-31T12:49:26.030

6

In case you're tempted: remember you can't parse HTML with regex

– Luis Mendo – 2015-07-31T15:16:01.040I can't find an online Python interpreter that will let me use

urllibor anything web related. – mbomb007 – 2015-07-31T16:55:12.263@mbomb007 I'm pretty sure IdeOne supports urllib – Beta Decay – 2015-08-02T16:02:40.700

The method I found for using

urllibrequiredimport jsonas well. You seem to have done it without that. – mbomb007 – 2015-08-03T18:44:36.203can we use google.search API libraries? – cat – 2016-04-26T00:02:05.010

Yup :) (But, by the way, did you see the date of the challenge ? It's cool, but, a few months old :p) – WayToDoor – 2016-04-26T07:52:20.527