x86 16/32/64-bit machine code: 11 bytes, score= 3.66

This function returns the current mode (default operand-size) as an integer in AL. Call it from C with signature uint8_t modedetect(void);

NASM machine-code + source listing (showing how it works in 16-bit mode, since BITS 16 tells NASM to assemble the source mnemonics for 16-bit mode.)

1 machine global modedetect

2 code modedetect:

3 addr hex BITS 16

5 00000000 B040 mov al, 64

6 00000002 B90000 mov cx, 0 ; 3B in 16-bit. 5B in 32/64, consuming 2 more bytes as the immediate

7 00000005 FEC1 inc cl ; always 2 bytes. The 2B encoding of inc cx would work, too.

8

9 ; want: 16-bit cl=1. 32-bit: cl=0

10 00000007 41 inc cx ; 64-bit: REX prefix

11 00000008 D2E8 shr al, cl ; 64-bit: shr r8b, cl doesn't affect AL at all. 32-bit cl=1. 16-bit cl=2

12 0000000A C3 ret

# end-of-function address is 0xB, length = 0xB = 11

Justification:

x86 machine code doesn't officially have version numbers, but I think this satisfies the intent of the question by having to produce specific numbers, rather than choosing what's most convenient (that only takes 7 bytes, see below).

The original x86 CPU, Intel's 8086, only supported 16-bit machine code. 80386 introduced 32-bit machine code (usable in 32-bit protected mode, and later in compat mode under a 64-bit OS). AMD introduced 64-bit machine code, usable in long mode. These are versions of x86 machine language in the same sense that Python2 and Python3 are different language versions. They're mostly compatible, but with intentional changes. You can run 32 or 64-bit executables directly under a 64-bit OS kernel the same way you could run Python2 and Python3 programs.

How it works:

Start with al=64. Shift it right by 1 (32-bit mode) or 2 (16-bit mode).

16/32 vs. 64-bit: The 1-byte inc/dec encodings are REX prefixes in 64-bit (http://wiki.osdev.org/X86-64_Instruction_Encoding#REX_prefix). REX.W doesn't affect some instructions at all (e.g. a jmp or jcc), but in this case to get 16/32/64 I wanted to inc or dec ecx rather than eax. That also sets REX.B, which changes the destination register. But fortunately we can make that work but setting things up so 64-bit doesn't need to shift al.

The instruction(s) that run only in 16-bit mode could include a ret, but I didn't find that necessary or helpful. (And would make it impossible to inline as a code-fragment, in case you wanted to do that). It could also be a jmp within the function.

16-bit vs. 32/64: immediates are 16-bit instead of 32-bit. Changing modes can change the length of an instruction, so 32/64 bit modes decode the next two bytes as part of the immediate, rather than a separate instruction. I kept things simple by using a 2-byte instruction here, instead of getting decode out of sync so 16-bit mode would decode from different instruction boundaries than 32/64.

Related: The operand-size prefix changes the length of the immediate (unless it's a sign-extended 8-bit immediate), just like the difference between 16-bit and 32/64-bit modes. This makes instruction-length decoding difficult to do in parallel; Intel CPUs have LCP decoding stalls.

Most calling conventions (including the x86-32 and x86-64 System V psABIs) allow narrow return values to have garbage in the high bits of the register. They also allow clobbering CX/ECX/RCX (and R8 for 64-bit). IDK if that was common in 16-bit calling conventions, but this is code golf so I can always just say it's a custom calling convention anyway.

32-bit disassembly:

08048070 <modedetect>:

8048070: b0 40 mov al,0x40

8048072: b9 00 00 fe c1 mov ecx,0xc1fe0000 # fe c1 is the inc cl

8048077: 41 inc ecx # cl=1

8048078: d2 e8 shr al,cl

804807a: c3 ret

64-bit disassembly (Try it online!):

0000000000400090 <modedetect>:

400090: b0 40 mov al,0x40

400092: b9 00 00 fe c1 mov ecx,0xc1fe0000

400097: 41 d2 e8 shr r8b,cl # cl=0, and doesn't affect al anyway!

40009a: c3 ret

Related: my x86-32 / x86-64 polyglot machine-code Q&A on SO.

Another difference between 16-bit and 32/64 is that addressing modes are encoded differently. e.g. lea eax, [rax+2] (8D 40 02) decodes as lea ax, [bx+si+0x2] in 16-bit mode. This is obviously difficult to use for code-golf, especially since e/rbx and e/rsi are call-preserved in many calling conventions.

I also considered using the 10-byte mov r64, imm64, which is REX + mov r32,imm32. But since I already had an 11 byte solution, this would be at best equal (10 bytes + 1 for ret).

Test code for 32 and 64-bit mode. (I haven't actually executed it in 16-bit mode, but the disassembly tells you how it will decode. I don't have a 16-bit emulator set up.)

; CPU p6 ; YASM directive to make the ALIGN padding tidier

global _start

_start:

call modedetect

movzx ebx, al

mov eax, 1

int 0x80 ; sys_exit(modedetect());

align 16

modedetect:

BITS 16

mov al, 64

mov cx, 0 ; 3B in 16-bit. 5B in 32/64, consuming 2 more bytes as the immediate

inc cl ; always 2 bytes. The 2B encoding of inc cx would work, too.

; want: 16-bit cl=1. 32-bit: cl=0

inc cx ; 64-bit: REX prefix

shr al, cl ; 64-bit: shr r8b, cl doesn't affect AL at all. 32-bit cl=1. 16-bit cl=2

ret

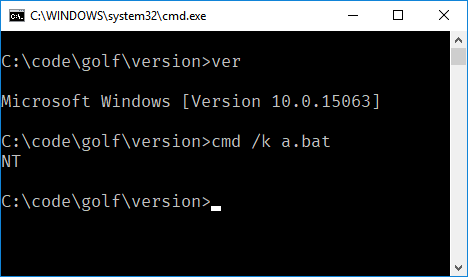

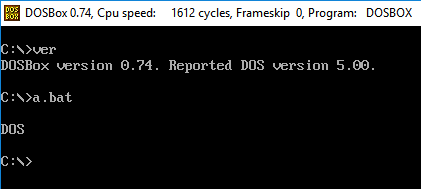

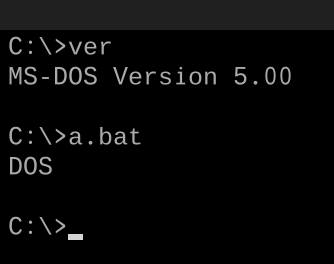

This Linux program exits with exit-status = modedetect(), so run it as ./a.out; echo $?. Assemble and link it into a static binary, e.g.

$ asm-link -m32 x86-modedetect-polyglot.asm && ./x86-modedetect-polyglot; echo $?

+ yasm -felf32 -Worphan-labels -gdwarf2 x86-modedetect-polyglot.asm

+ ld -melf_i386 -o x86-modedetect-polyglot x86-modedetect-polyglot.o

32

$ asm-link -m64 x86-modedetect-polyglot.asm && ./x86-modedetect-polyglot; echo $?

+ yasm -felf64 -Worphan-labels -gdwarf2 x86-modedetect-polyglot.asm

+ ld -o x86-modedetect-polyglot x86-modedetect-polyglot.o

64

## maybe test 16-bit with BOCHS somehow if you really want to.

7 bytes (score=2.33) if I can number the versions 1, 2, 3

There are no official version numbers for different x86 modes. I just like writing asm answers. I think it would violate the question's intent if I just called the modes 1,2,3, or 0,1,2, because the point is to force you to generate an inconvenient number. But if that was allowed:

# 16-bit mode:

42 detect123:

43 00000020 B80300 mov ax,3

44 00000023 FEC8 dec al

45

46 00000025 48 dec ax

47 00000026 C3 ret

Which decodes in 32-bit mode as

08048080 <detect123>:

8048080: b8 03 00 fe c8 mov eax,0xc8fe0003

8048085: 48 dec eax

8048086: c3 ret

and 64-bit as

00000000004000a0 <detect123>:

4000a0: b8 03 00 fe c8 mov eax,0xc8fe0003

4000a5: 48 c3 rex.W ret

Sandbox post. Related. – MD XF – 2017-08-16T04:27:36.660

Language version built-in allowed? – user202729 – 2017-08-16T04:30:47.950

@user202729

Your program may not use a builtin macro or custom compiler flags to determine the language version.– MD XF – 2017-08-16T04:31:28.577So you must use some function that behave differently in versions to determine version number?

LogoVersionin LOGO (11 byte) fail. --- Is usage of bugs in previous versions which is fixed in newer versions allowed? – user202729 – 2017-08-16T04:34:23.717Can we output 3.0 for Python 3? – fireflame241 – 2017-08-16T04:34:33.640

@fireflame241 on Python 3.0, not on Python 3.1 or anything else. – MD XF – 2017-08-16T04:34:54.807

4What is a language version number? Who determines it? – Post Rock Garf Hunter – 2017-08-16T04:34:58.403

@WheatWizard The author of the language determines this. It should be marked on some corresponding webpage or document. – MD XF – 2017-08-16T04:35:33.330

@user202729 Yes, you must use some functionality that differs between language versions. Usage of bugs in previous versions is allowed, but if they don't work in newer versions they don't count toward your score. – MD XF – 2017-08-16T04:36:09.340

9I think that inverse-linear in the number of version does not welcome answers with high number of versions. – user202729 – 2017-08-16T05:23:27.387

6

@user202729 I agree. Versatile Integer Printer did it well - score was

– Mego – 2017-08-16T05:37:26.927(number of languages)^3 / (byte count).Related. – Dom Hastings – 2017-08-16T06:31:40.920

Related – Peter Taylor – 2017-08-16T07:14:42.307

6What is the version for a language? Isn't we define a language as its interpreters / compilers here? Let we say, there is a version of gcc which has a bug that with certain C89 codes it produce an executable which behavior violate the C89 specification, and it was fixed on the next version of gcc. Should this count a valid solution if we write a piece of code base on this bug behavior to tell which gcc version is using? It targeting on different version of compiler, but NOT different version of language. – tsh – 2017-08-16T07:21:39.350

6I don't get this. First you say "Your program's output should only be the version number.". Then you say "If you choose to print the full name or minor version numbers, e.g. C89 as opposed to C99, it must only print the name." So the first rule is not actually a requirement? – pipe – 2017-08-16T09:13:17.633

1

Your program may not use a builtin, macro, or custom compiler flags to determine the language version.So... no way to complete this challenge in C++ ? In my knowledge, the only way to programmatically detect the C++ language version is by using the__cplusplusmacro... – HatsuPointerKun – 2017-08-16T11:35:16.1733@HatsuPointerKun Try using differences between versions. – Erik the Outgolfer – 2017-08-16T14:02:13.887

This was a fun question, I really enjoyed reading the answers. Thanks for asking! – user6014 – 2017-08-22T16:09:34.817

1

Your program may use whatever method you like to determine the version number.Then:Your program may not use a builtin, macro, or custom compiler flags to determine the language version.Please don't condradict yourself in the spec. – Grimmy – 2019-05-21T16:36:20.840@HatsuPointerKun That's to prevent "Python 2 --version and Python 3 --version, 0 bytes", which is no fun at all.

– Khuldraeseth na'Barya – 2019-08-27T15:47:57.943hmm... what's correct output if the program is written in x86 machine code? – 640KB – 2019-08-28T14:32:08.910