Analog vs. Digital

All electronics come in two forms: analog and digital. Either can do what we want, but there are advantages/disadvantages to using them.

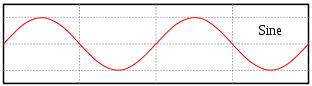

Analog

Analog electronics use a continuous signal. A continuous signal is where if you had a paper of infinite length, you could draw the signal without ever lifting your hand. The signal (as far as electronics are concerned) essentially represents the voltage or current at that particular point in time. Since the real world is analog, it was probably easier to make electronics analog at first. But it's losing ground as the dominant form of signaling due to everything also capable of being digitized. However, at the end of the day, what you get out is analog, because the world is analog.

The defining quality of an analog signal is that it's easy to amplify, shrink, and you could still make sense of it with filters, even if the signal appears to look like garbage. As an example, when tuning into a radio station, it's first static, then static and the radio station, and then finally the radio station as the tuner can filter out the proper frequency from the "noisy" signal. The radio waves that also travel in the air are very low power, but the correct signal is filtered out before being amplified and making its way to the speakers. Another advantage of this is that when the signals degrade, it's a gradual degradation.

The problems with analog signals, in regards to electronics, is that they consume more power as components are rarely completely off. If you're designing or analyzing analog circuits, it also makes for some Mind Screw math such as Fourier series and Laplace transforms. Another problem is that due to the random nature of the universe, it's very hard, if not impossible, to replicate the signal perfectly.

Digital

Digital electronics use a discrete signal, that is, there are hard values with nothing in between them. You couldn't take a pencil and draw a digital signal without lifting it up. Despite what the picture on the right shows, vertical lines are just representations. A digital signal can either be a binary signal, in which case an "on or off" state, or a series of defined levels.

In a binary digital system, typically 0 volts is the off state, while some other voltage (commonly, 1.5V, 3.3V, 5V, and 12V, usually based on what batteries can provide) are used as the on state. Well, mostly. It's poor circuit design to set the threshold at what the operating voltage is supposed to be, and thus you could run most circuits within 20% below their rated operating voltage. Too much voltage though, and it explodes.

The defining characteristic is that digital signal can be copied perfectly, in the sense that small bits of noise won't kill the signal. In regards to electronics, digital signals require less average power, since they can be in a state that's fully off (or mostly off)

The problem with digital signals though is that if part of the signal is trashed, then the entire signal has to be thrown away unless something to correct it is available. This is akin to handwriting, where if someone writes a letter sloppily, forgets a letter, or misspells the word, you may not be able to make out what the word really is. And if you're in a part of the world that has digital TV, you can find it very annoying that poor reception means the channel cuts out completely, rather than just getting static-y like in an analog system.