Test score

A test score is a piece of information, usually a number, that conveys the performance of an examinee on a test. One formal definition is that it is "a summary of the evidence contained in an examinee's responses to the items of a test that are related to the construct or constructs being measured."[1]

Test scores are interpreted with a norm-referenced or criterion-referenced interpretation, or occasionally both. A norm-referenced interpretation means that the score conveys meaning about the examinee with regards to their standing among other examinees. A criterion-referenced interpretation means that the score conveys information about the examinee with regard to a specific subject matter, regardless of other examinees' scores.[2]

Types of test scores

There are two types of test scores: raw scores and scaled scores. A raw score is a score without any sort of adjustment or transformation, such as the simple number of questions answered correctly. A scaled score is the result of some transformation(s) applied to the raw score.

The purpose of scaled scores is to report scores for all examinees on a consistent scale. Suppose that a test has two forms, and one is more difficult than the other. It has been determined by equating that a score of 65% on form 1 is equivalent to a score of 68% on form 2. Scores on both forms can be converted to a scale so that these two equivalent scores have the same reported scores. For example, they could both be a score of 350 on a scale of 100 to 500.

Two well-known tests in the United States that have scaled scores are the ACT and the SAT. The ACT's scale ranges from 0 to 36 and the SAT's from 200 to 800 (per section). Ostensibly, these two scales were selected to represent a mean and standard deviation of 18 and 6 (ACT), and 500 and 100. The upper and lower bounds were selected because an interval of plus or minus three standard deviations contains more than 99% of a population. Scores outside that range are difficult to measure, and return little practical value.

Note that scaling does not affect the psychometric properties of a test; it is something that occurs after the assessment process (and equating, if present) is completed. Therefore, it is not an issue of psychometrics, per se, but an issue of interpretability.

Scoring information loss

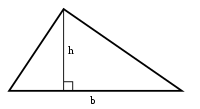

Area = 1/2(Base × Height)

= 1/2(5 cm × 3 cm)

= 7.5 cm2

When tests are scored right-wrong, an important assumption has been made about learning. The number of right answers or the sum of item scores (where partial credit is given) is assumed to be the appropriate and sufficient measure of current performance status. In addition, a secondary assumption is made that there is no meaningful information in the wrong answers.

In the first place, a correct answer can be achieved using memorization without any profound understanding of the underlying content or conceptual structure of the problem posed. Second, when more than one step for solution is required, there are often a variety of approaches to answering that will lead to a correct result. The fact that the answer is correct does not indicate which of the several possible procedures were used. When the student supplies the answer (or shows the work) this information is readily available from the original documents.

Second, if the wrong answers were blind guesses, there would be no information to be found among these answers. On the other hand, if wrong answers reflect interpretation departures from the expected one, these answers should show an ordered relationship to whatever the overall test is measuring. This departure should be dependent upon the level of psycholinguistic maturity of the student choosing or giving the answer in the vernacular in which the test is written.

In this second case it should be possible to extract this order from the responses to the test items.[3] Such extraction processes, the Rasch model for instance, are standard practice for item development among professionals. However, because the wrong answers are discarded during the scoring process, analysis of these answers for the information they might contain is seldom undertaken.

Third, although topic-based subtest scores are sometimes provided, the more common practice is to report the total score or a rescaled version of it. This rescaling is intended to compare these scores to a standard of some sort. This further collapse of the test results systematically removes all the information about which particular items were missed.

Thus, scoring a test right–wrong loses 1) how students achieved their correct answers, 2) what led them astray towards unacceptable answers and 3) where within the body of the test this departure from expectation occurred.

This commentary suggests that the current scoring procedure conceals the dynamics of the test-taking process and obscures the capabilities of the students being assessed. Current scoring practice oversimplifies these data in the initial scoring step. The result of this procedural error is to obscure diagnostic information that could help teachers serve their students better. It further prevents those who are diligently preparing these tests from being able to observe the information that would otherwise have alerted them to the presence of this error.

A solution to this problem, known as Response Spectrum Evaluation (RSE),[4] is currently being developed that appears to be capable of recovering all three of these forms of information loss, while still providing a numerical scale to establish current performance status and to track performance change.

This RSE approach provides an interpretation of every answer, whether right or wrong, that indicates the likely thought processes used by the test taker.[5] Among other findings, this chapter reports that the recoverable information explains between two and three times more of the test variability than considering only the right answers. This massive loss of information can be explained by the fact that the "wrong" answers are removed from the information being collected during the scoring process and are no longer available to reveal the procedural error inherent in right-wrong scoring. The procedure bypasses the limitations produced by the linear dependencies inherent in test data.

References

- Thissen, D., & Wainer, H. (2001). Test Scoring. Mahwah, NJ: Erlbaum. Page 1, sentence 1.

- Iowa Testing Programs guide for interpreting test scores Archived 2008-02-12 at the Wayback Machine

- Powell, J. C. and Shklov, N. (1992) The Journal of Educational and Psychological Measurement, 52, 847–865

- "Welcome to the Frontpage". Archived from the original on 30 April 2015. Retrieved 2 May 2015.

- Powell, Jay C. (2010) Testing as Feedback to Inform Teaching. Chapter 3 in; Learning and Instruction in the Digital Age, Part 1. Cognitive Approaches to Learning and Instruction. (J. Michael Spector, Dirk Ifenthaler, Pedro Isaias, Kinshuk and Demetrios Sampson, Eds.), New York: Springer. ISBN 978-1-4419-1551-1, doi:10.1007/978-1-4419-1551-1