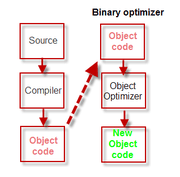

Object code optimizer

An object code optimizer, sometimes also known as a post pass optimizer or, for small sections of code, peephole optimizer, takes the output from a source language compile step - the object code or binary file - and tries to replace identifiable sections of the code with replacement code that is more algorithmically efficient (usually improved speed).

Examples

- "IBM Automatic Binary Optimizer for z/OS[1]" (ABO) was introduced in 2015 as a cutting-edge technology designed to optimize the performance of COBOL applications on IBM Z[2] mainframes without the need for recompiling source. It uses advanced optimization technology shipped in the latest Enterprise COBOL[3]. ABO optimizes compiled binaries without affecting program logic. As a result, the application runs faster but behavior remains unchanged so testing effort could be reduced. Clients normally don't recompile 100 percent of their code when they upgrade to new compiler or IBM Z hardware levels, so code that's not recompiled wouldn't be able to take advantage of features in new IBM Z hardware. Now with ABO, clients have one more option to reduce CPU utilization and operating costs of their business-critical COBOL applications.

- The earliest "COBOL Optimizer" was developed by Capex Corporation in the mid 1970s for COBOL. This type of optimizer depended, in this case, upon knowledge of 'weaknesses' in the standard IBM COBOL compiler, and actually replaced (or patched) sections of the object code with more efficient code. The replacement code might replace a linear table lookup with a binary search for example or sometimes simply replace a relatively slow instruction with a known faster one that was otherwise functionally equivalent within its context. This technique is now known as strength reduction. For example, on the IBM/360 hardware the

CLIinstruction was, depending on the particular model, between twice and 5 times as fast as aCLCinstruction for single byte comparisons.[4][5]

Advantages

The main advantage of re-optimizing existing programs was that the stock of already compiled customer programs (object code) could be improved almost instantly with minimal effort, reducing CPU resources at a fixed cost (the price of the proprietary software). A disadvantage was that new releases of COBOL, for example, would require (charged) maintenance to the optimizer to cater for possibly changed internal COBOL algorithms. However, since new releases of COBOL compilers frequently coincided with hardware upgrades, the faster hardware would usually more than compensate for the application programs reverting to their pre-optimized versions (until a supporting optimizer was released).

Other optimizers

Some binary optimizers do executable compression, which reduces the size of binary files using generic data compression techniques, reducing storage requirements and transfer and loading times, but not improving run-time performance. Actual consolidation of duplicate library modules would also reduce memory requirements.

Some binary optimizers utilize run-time metrics (profiling) to introspectively improve performance using techniques similar to JIT compilers.

Recent developments

More recently developed 'binary optimizers' for various platforms, some claiming novelty but, nevertheless, essentially using the same (or similar) techniques described above, include:

- IBM Automatic Binary Optimizer for z/OS (ABO) (2015)[6]

- The Sun Studio Binary Code Optimizer[7] - which requires a profile phase beforehand

- Design and Engineering of a Dynamic Binary Optimizer - from IBM T. J. Watson Res. Center (February 2005)[8][9]

- QuaC: Binary Optimization for Fast Runtime Code Generation in C[10] - (which appears to include some elements of JIT)

- DynamoRIO

- COBRA: An Adaptive Runtime Binary Optimization Framework for Multithreaded Applications[11]

- Spike Executable Optimizer (Unix kernel)[12]

- "SOLAR" Software Optimization at Link-time And Run-time

See also

- Binary recompilation

- Binary translation

- Dynamic dead code elimination

References

- https://www.ibm.com/products/automatic-binary-optimizer-zos

- https://www.ibm.com/it-infrastructure/z

- https://www.ibm.com/us-en/marketplace/ibm-cobol

- http://www.bitsavers.org/pdf/ibm/360/A22_6825-1_360instrTiming.pdf

- http://portal.acm.org/citation.cfm?id=358732&dl=GUIDE&dl=ACM

- https://www.ibm.com/products/automatic-binary-optimizer-zos

- http://developers.sun.com/solaris/articles/binopt.html

- Duesterwald, E. (2005). "Design and Engineering of a Dynamic Binary Optimizer". Proceedings of the IEEE. 93 (2): 436–448. doi:10.1109/JPROC.2004.840302.

- http://portal.acm.org/citation.cfm?id=1254810.1254831

- http://www.eecs.berkeley.edu/Pubs/TechRpts/1994/CSD-94-792.pdf

- Kim, Jinpyo; Hsu, Wei-Chung; Yew, Pen-Chung (2007). "COBRA: An Adaptive Runtime Binary Optimization Framework for Multithreaded Applications". 2007 International Conference on Parallel Processing (ICPP 2007). p. 25. doi:10.1109/ICPP.2007.23. ISBN 978-0-7695-2933-2.

- http://www.cesr.ncsu.edu/fddo4/papers/spike_fddo4.pdf