NASA-TLX

The NASA Task Load Index (NASA-TLX) is a widely used,[1] subjective, multidimensional assessment tool that rates perceived workload in order to assess a task, system, or team's effectiveness or other aspects of performance. It was developed by the Human Performance Group at NASA's Ames Research Center over a three-year development cycle that included more than 40 laboratory simulations.[2][3] It has been cited in over 4,400 studies,[4] highlighting the influence the NASA-TLX has had in human factors research. It has been used in a variety of domains, including aviation, healthcare and other complex socio-technical domains.[1]

Scales

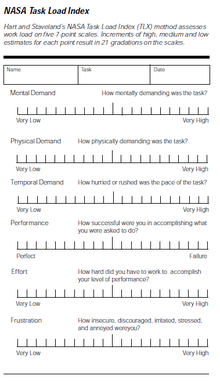

NASA-TLX originally consisted of two parts: the total workload is divided into six subjective subscales that are represented on a single page, serving as one part of the questionnaire:

- Mental Demand

- Physical Demand

- Temporal Demand

- Performance

- Effort

- Frustration

There is a description for each of these subscales that the subject should read before rating. They are rated for each task within a 100-points range with 5-point steps. These ratings are then combined to the task load index. Providing descriptions for each measurement can be found to help participants answer accurately.[5] These descriptions are as follows:

- Mental Demand

- How much mental and perceptual activity was required? Was the task easy or demanding, simple or complex?

- Physical Demand

- How much physical activity was required? Was the task easy or demanding, slack or strenuous?

- Temporal Demand

- How much time pressure did you feel due to the pace at which the tasks or task elements occurred? Was the pace slow or rapid?

- Overall Performance

- How successful were you in performing the task? How satisfied were you with your performance?

- Effort

- How hard did you have to work (mentally and physically) to accomplish your level of performance?

- Frustration Level

- How irritated, stressed, and annoyed versus content, relaxed, and complacent did you feel during the task?

Analysis

The second part of TLX intends to create an individual weighting of these subscales by letting the subjects compare them pairwise based on their perceived importance. This requires the user to choose which measurement is more relevant to workload. The number of times each is chosen is the weighted score.[6] This is multiplied by the scale score for each dimension and then divided by 15 to get a workload score from 0 to 100, the overall task load index. Many researchers eliminate these pairwise comparisons, though, and refer to the test as "Raw TLX" then.[7] There has been evidence evaluating and supporting this shortened version over the full one since it might increase experimental validity.[8]

When using the "raw TLX", individual subscales may be dropped if less relevant to the task.[1][7]

Administration

The Official NASA-TLX can be administered using a paper and pencil version, or using the Official NASA TLX for Apple iOS App.[9] There are also numerous unofficial computerized implementations of the NASA TLX. These unofficial versions may collect Personally Identifiable Information (PII), which is a violation of NASA Human Subject Research Guidelines for the Collection of PII[10] as set down by the NASA Independent Review Board (IRB).[11]

If a participant is required to answer the TLX questions multiple times, they only need to answer the 15 pairwise comparisons once per task type.[3] If a participant's workload needs to be measured for intrinsically different tasks, then revisiting the pairwise comparisons may be required. In every case, the subject should answer all 6 subjective rating subscales. It is these successive ratings that are then scored using the original pairwise questions as weighting factors, that leads to an understanding of the overall workload change.[2]

While there are multiple ways to administer the NASA-TLX, some may change the results of the test. One study showed that a paper-and-pencil version led to less cognitive workload than processing the information on a computer screen.[12] To overcome the delay in administrating the test, the Official NASA TLX Apple iOS App[9] can be used to capture both the pairwise question answers and a subjects subjective subscale input, as well as calculating the final weighted and unweighted results. A feature found in the Official NASA TLX App is a new computer interface response rating scale, termed a Subjective Analogue Equivalent Rating (SAER) scale, that provides the closest possible user experience to that found in the paper and pencil version of NASA TLX. No other computerized version of the NASA TLX has successfully implemented this critical element for properly capturing a user subjective input. This can be seen in many unofficial computerized (both web and software application) versions that use an anchored or locking scale. This defeats the subjective purpose of the original paper and pencil implementation of the NASA TLX.

See also

References

- Colligan, L; Potts, HWW; Finn, CT; Sinkin, RA (July 2015). "Cognitive workload changes for nurses transitioning from a legacy system with paper documentation to a commercial electronic health record". International Journal of Medical Informatics. 84 (7): 469–476. doi:10.1016/j.ijmedinf.2015.03.003. PMID 25868807. (subscription required)

- NASA (1986). Nasa Task Load Index (TLX) v. 1.0 Manual

- Hart, Sandra G.; Staveland, Lowell E. (1988). "Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research" (PDF). In Hancock, Peter A.; Meshkati, Najmedin (eds.). Human Mental Workload. Advances in Psychology. 52. Amsterdam: North Holland. pp. 139–183. doi:10.1016/S0166-4115(08)62386-9. ISBN 978-0-444-70388-0.

- External link to Google Scholar. .

- Schuff, D; Corral, K; Turetken, O (December 2011). "Comparing the understandability of alternative data warehouse schemas: An empirical study". Decision Support Systems. 52 (1): 9–20. doi:10.1016/j.dss.2011.04.003. (subscription required)

- Rubio, S; Diaz, E; Martin, J; Puente, JM (January 2004). "Evaluation of subjective mental workload: A comparison of SWAT, NASA-TLX, and workload profile methods". Applied Psychology. 53 (1): 61–86. doi:10.1111/j.1464-0597.2004.00161.x. (subscription required)

- Hart, Sandra G. (October 2006). "NASA-Task Load Index (NASA-TLX); 20 Years Later" (PDF). Proceedings of the Human Factors and Ergonomics Society Annual Meeting. 50 (9): 904–908. doi:10.1177/154193120605000909.

- Bustamante, EA; Spain, RD (September 2008). "Measurement Invariance of the NASA TLX". Proceedings of the Human Factors and Ergonomics Society Annual Meeting. 52 (19): 1522–1526. doi:10.1177/154193120805201946. (subscription required)

- Division, Human Systems Integration. "Human Systems Integration Division @ NASA Ames - Outreach & Publications". hsi.arc.nasa.gov. Retrieved 2018-05-09.

- "NPD 1382.17J - main". nodis3.gsfc.nasa.gov. Retrieved 2018-05-09.

- MD, Kathleen McMonigal. "IRB - Conducting Research - Working with Other NASA Centers and Other Institutions". irb.nasa.gov. Retrieved 2018-05-09.

- Noyes, JM; Bruneau, DPJ (April 2007). "A self-analysis of the NASA-TLX workload measure". Ergonomics. 50 (4): 514–519. doi:10.1080/00140130701235232. PMID 17575712. (subscription required)