Mutual exclusion

In computer science, mutual exclusion is a property of concurrency control, which is instituted for the purpose of preventing race conditions. It is the requirement that one thread of execution never enters its critical section at the same time that another concurrent thread of execution enters its own critical section, which refers to an interval of time during which a thread of execution accesses a shared resource, such as shared memory.

The requirement of mutual exclusion was first identified and solved by Edsger W. Dijkstra in his seminal 1965 paper "Solution of a problem in concurrent programming control",[1][2] which is credited as the first topic in the study of concurrent algorithms.[3]

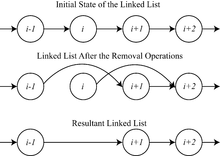

A simple example of why mutual exclusion is important in practice can be visualized using a singly linked list of four items, where the second and third are to be removed. The removal of a node that sits between 2 other nodes is performed by changing the next pointer of the previous node to point to the next node (in other words, if node is being removed, then the next pointer of node is changed to point to node , thereby removing from the linked list any reference to node ). When such a linked list is being shared between multiple threads of execution, two threads of execution may attempt to remove two different nodes simultaneously, one thread of execution changing the next pointer of node to point to node , while another thread of execution changes the next pointer of node to point to node . Although both removal operations complete successfully, the desired state of the linked list is not achieved: node remains in the list, because the next pointer of node points to node .

This problem (called a race condition) can be avoided by using the requirement of mutual exclusion to ensure that simultaneous updates to the same part of the list cannot occur.

The term mutual exclusion is also used in reference to the simultaneous writing of a memory address by one thread while the aforementioned memory address is being manipulated or read by one or more other threads.

Problem description

The problem which mutual exclusion addresses is a problem of resource sharing: how can a software system control multiple processes' access to a shared resource, when each process needs exclusive control of that resource while doing its work? The mutual-exclusion solution to this makes the shared resource available only while the process is in a specific code segment called the critical section. It controls access to the shared resource by controlling each mutual execution of that part of its program where the resource would be used.

A successful solution to this problem must have at least these two properties:

- It must implement mutual exclusion: only one process can be in the critical section at a time.

- It must be free of deadlocks: if processes are trying to enter the critical section, one of them must eventually be able to do so successfully, provided no process stays in the critical section permanently.

Deadlock freedom can be expanded to implement one or both of these properties:

- Lockout-freedom guarantees that any process wishing to enter the critical section will be able to do so eventually. This is distinct from deadlock avoidance, which requires that some waiting process be able to get access to the critical section, but does not require that every process gets a turn. If two processes continually trade a resource between them, a third process could be locked out and experience resource starvation, even though the system is not in deadlock. If a system is free of lockouts, it ensures that every process can get a turn at some point in the future.

- A k-bounded waiting property gives a more precise commitment than lockout-freedom. Lockout-freedom ensures every process can access the critical section eventually: it gives no guarantee about how long the wait will be. In practice, a process could be overtaken an arbitrary or unbounded number of times by other higher-priority processes before it gets its turn. Under a k-bounded waiting property, each process has a finite maximum wait time. This works by setting a limit to the number of times other processes can cut in line, so that no process can enter the critical section more than k times while another is waiting.[4]

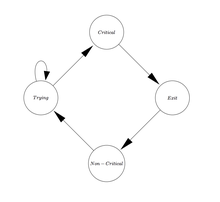

Every process's program can be partitioned into four sections, resulting in four states. Program execution cycles through these four states in order:[5]

- Non-Critical Section

- Operation is outside the critical section; the process is not using or requesting the shared resource.

- Trying

- The process attempts to enter the critical section.

- Critical Section

- The process is allowed to access the shared resource in this section.

- Exit

- The process leaves the critical section and makes the shared resource available to other processes.

If a process wishes to enter the critical section, it must first execute the trying section and wait until it acquires access to the critical section. After the process has executed its critical section and is finished with the shared resources, it needs to execute the exit section to release them for other processes' use. The process then returns to its non-critical section.

Enforcing mutual exclusion

Hardware solutions

On uniprocessor systems, the simplest solution to achieve mutual exclusion is to disable interrupts during a process's critical section. This will prevent any interrupt service routines from running (effectively preventing a process from being preempted). Although this solution is effective, it leads to many problems. If a critical section is long, then the system clock will drift every time a critical section is executed because the timer interrupt is no longer serviced, so tracking time is impossible during the critical section. Also, if a process halts during its critical section, control will never be returned to another process, effectively halting the entire system. A more elegant method for achieving mutual exclusion is the busy-wait.

Busy-waiting is effective for both uniprocessor and multiprocessor systems. The use of shared memory and an atomic test-and-set instruction provide the mutual exclusion. A process can test-and-set on a location in shared memory, and since the operation is atomic, only one process can set the flag at a time. Any process that is unsuccessful in setting the flag can either go on to do other tasks and try again later, release the processor to another process and try again later, or continue to loop while checking the flag until it is successful in acquiring it. Preemption is still possible, so this method allows the system to continue to function—even if a process halts while holding the lock.

Several other atomic operations can be used to provide mutual exclusion of data structures; most notable of these is compare-and-swap (CAS). CAS can be used to achieve wait-free mutual exclusion for any shared data structure by creating a linked list where each node represents the desired operation to be performed. CAS is then used to change the pointers in the linked list[6] during the insertion of a new node. Only one process can be successful in its CAS; all other processes attempting to add a node at the same time will have to try again. Each process can then keep a local copy of the data structure, and upon traversing the linked list, can perform each operation from the list on its local copy.

Software solutions

In addition to hardware-supported solutions, some software solutions exist that use busy waiting to achieve mutual exclusion. Examples include:

- Dekker's algorithm

- Peterson's algorithm

- Lamport's bakery algorithm[7]

- Szymanski's algorithm

- Taubenfeld's black-white bakery algorithm[2]

- Maekawa's algorithm

These algorithms do not work if out-of-order execution is used on the platform that executes them. Programmers have to specify strict ordering on the memory operations within a thread.[8]

It is often preferable to use synchronization facilities provided by an operating system's multithreading library, which will take advantage of hardware solutions if possible but will use software solutions if no hardware solutions exist. For example, when the operating system's lock library is used and a thread tries to acquire an already acquired lock, the operating system could suspend the thread using a context switch and swap it out with another thread that is ready to be run, or could put that processor into a low power state if there is no other thread that can be run. Therefore, most modern mutual exclusion methods attempt to reduce latency and busy-waits by using queuing and context switches. However, if the time that is spent suspending a thread and then restoring it can be proven to be always more than the time that must be waited for a thread to become ready to run after being blocked in a particular situation, then spinlocks are an acceptable solution (for that situation only).

Bound on the mutual exclusion problem

One binary test&set register is sufficient to provide the deadlock-free solution to the mutual exclusion problem. But a solution built with a test&set register can possibly lead to the starvation of some processes which become caught in the trying section.[4] In fact, distinct memory states are required to avoid lockout. To avoid unbounded waiting, n distinct memory states are required.[9]

Recoverable mutual exclusion

Most algorithms for mutual exclusion are designed with the assumption that no failure occurs while a process is running inside the critical section. However, in reality such failures may be commonplace. For example, a sudden loss of power or faulty interconnect might cause a process in a critical section to experience an unrecoverable error or otherwise be unable to continue. If such a failure occurs, conventional, non-failure-tolerant mutual exclusion algorithms may deadlock or otherwise fail key liveness properties. To deal with this problem, several solutions using crash-recovery mechanisms have been proposed.[10]

Types of mutual exclusion devices

The solutions explained above can be used to build the synchronization primitives below:

- Locks (mutexes)

- Readers–writer locks

- Recursive locks

- Semaphores

- Monitors

- Message passing

- Tuple space

Many forms of mutual exclusion have side-effects. For example, classic semaphores permit deadlocks, in which one process gets a semaphore, another process gets a second semaphore, and then both wait till the other semaphore to be released. Other common side-effects include starvation, in which a process never gets sufficient resources to run to completion; priority inversion, in which a higher-priority thread waits for a lower-priority thread; and high latency, in which response to interrupts is not prompt.

Much research is aimed at eliminating the above effects, often with the goal of guaranteeing non-blocking progress. No perfect scheme is known. Blocking system calls used to sleep an entire process. Until such calls became threadsafe, there was no proper mechanism for sleeping a single thread within a process (see polling).

See also

- Atomicity (programming)

- Concurrency control

- Dining philosophers problem

- Exclusive or

- Mutually exclusive events

- Reentrant mutex

- Semaphore

- Spinlock

References

- Dijkstra, E. W. (1965). "Solution of a problem in concurrent programming control". Communications of the ACM. 8 (9): 569. doi:10.1145/365559.365617.

- Taubenfeld, "The Black-White Bakery Algorithm". In Proc. Distributed Computing, 18th international conference, DISC 2004. Vol 18, 56-70, 2004

- "PODC Influential Paper Award: 2002", ACM Symposium on Principles of Distributed Computing, retrieved 24 August 2009

- Attiya, Hagit; Welch, Jennifer (25 March 2004). Distributed computing: fundamentals, simulations, and advanced topics. John Wiley & Sons, Inc. ISBN 978-0-471-45324-6.

- Lamport, Leslie (26 June 2000), The Mutual Exclusion Problem Part II: Statement and Solutions (PDF)

- https://timharris.uk/papers/2001-disc.pdf

- Lamport, Leslie (August 1974). "A new solution of Dijkstra's concurrent programming problem". Communications of the ACM. 17 (8): 453–455. doi:10.1145/361082.361093.

- Holzmann, Gerard J.; Bosnacki, Dragan (1 October 2007). "The Design of a Multicore Extension of the SPIN Model Checker" (PDF). IEEE Transactions on Software Engineering. 33 (10): 659–674. doi:10.1109/TSE.2007.70724.

- Burns, James E.; Paul Jackson, Nancy A. Lynch (January 1982), Data Requirements for Implementation of N-Process Mutual Exclusion Using a Single Shared Variable (PDF)

- Golab, Wojciech; Ramaraju, Aditya (July 2016), Recoverable Mutual Exclusion

Further reading

- Michel Raynal: Algorithms for Mutual Exclusion, MIT Press, ISBN 0-262-18119-3

- Sunil R. Das, Pradip K. Srimani: Distributed Mutual Exclusion Algorithms, IEEE Computer Society, ISBN 0-8186-3380-8

- Thomas W. Christopher, George K. Thiruvathukal: High-Performance Java Platform Computing, Prentice Hall, ISBN 0-13-016164-0

- Gadi Taubenfeld, Synchronization Algorithms and Concurrent Programming, Pearson/Prentice Hall, ISBN 0-13-197259-6

External links

- Common threads: POSIX threads explained – The little things called mutexes" by Daniel Robbins

- Mutual Exclusion Petri Net at the Wayback Machine (archived 2016-06-02)

- Mutual Exclusion with Locks – an Introduction

- Mutual exclusion variants in OpenMP

- The Black-White Bakery Algorithm