Multiple buffering

In computer science, multiple buffering is the use of more than one buffer to hold a block of data, so that a "reader" will see a complete (though perhaps old) version of the data, rather than a partially updated version of the data being created by a "writer". It is also used to avoid the need to use dual-ported RAM (DPRAM) when the readers and writers are different devices.

Description

An easy way to explain how multiple buffering works is to take a real-world example. It is a nice sunny day and you have decided to get the paddling pool out, only you can not find your garden hose. You'll have to fill the pool with buckets. So you fill one bucket (or buffer) from the tap, turn the tap off, walk over to the pool, pour the water in, walk back to the tap to repeat the exercise. This is analogous to single buffering. The tap has to be turned off while you "process" the bucket of water.

Now consider how you would do it if you had two buckets. You would fill the first bucket and then swap the second in under the running tap. You then have the length of time it takes for the second bucket to fill in order to empty the first into the paddling pool. When you return you can simply swap the buckets so that the first is now filling again, during which time you can empty the second into the pool. This can be repeated until the pool is full. It is clear to see that this technique will fill the pool far faster as there is much less time spent waiting, doing nothing, while buckets fill. This is analogous to double buffering. The tap can be on all the time and does not have to wait while the processing is done.

If you employed another person to carry a bucket to the pool while one is being filled and another emptied, then this would be analogous to triple buffering. If this step took long enough you could employ even more buckets, so that the tap is continuously running filling buckets.

In computer science the situation of having a running tap that cannot be, or should not be, turned off is common (such as a stream of audio). Also, computers typically prefer to deal with chunks of data rather than streams. In such situations, double buffering is often employed.

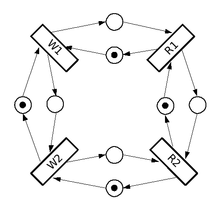

Double buffering Petri net

The Petri net in the illustration shows how double buffering works. Transitions W1 and W2 represent writing to buffer 1 and 2 respectively while R1 and R2 represent reading from buffer 1 and 2 respectively. At the beginning only the transition W1 is enabled. After W1 fires, R1 and W2 are both enabled and can proceed in parallel. When they finish, R2 and W1 proceed in parallel and so on.

So after the initial transient where W1 fires alone, this system is periodic and the transitions are enabled – always in pairs (R1 with W2 and R2 with W1 respectively).

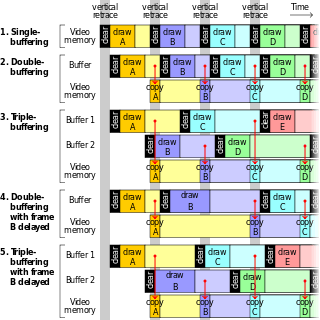

Double buffering in computer graphics

In computer graphics, double buffering is a technique for drawing graphics that shows no (or less) stutter, tearing, and other artifacts.

It is difficult for a program to draw a display so that pixels do not change more than once. For instance, when updating a page of text, it is much easier to clear the entire page and then draw the letters than to somehow erase only the pixels that are used in old letters but not in new ones. However, this intermediate image is seen by the user as flickering. In addition, computer monitors constantly redraw the visible video page (traditionally at around 60 times a second), so even a perfect update may be visible momentarily as a horizontal divider between the "new" image and the un-redrawn "old" image, known as tearing.

Software double buffering

A software implementation of double buffering has all drawing operations store their results in some region of system RAM; any such region is often called a "back buffer". When all drawing operations are considered complete, the whole region (or only the changed portion) is copied into the video RAM (the "front buffer"); this copying is usually synchronized with the monitor's raster beam in order to avoid tearing. Software implementations of double buffering necessarily requires more memory and CPU time than single buffering because of the system memory allocated for the back buffer, the time for the copy operation, and the time waiting for synchronization.

Compositing window managers often combine the "copying" operation with "compositing" used to position windows, transform them with scale or warping effects, and make portions transparent. Thus the "front buffer" may contain only the composite image seen on the screen, while there is a different "back buffer" for every window containing the non-composited image of the entire window contents.

Page flipping

In the page-flip method, instead of copying the data, both buffers are capable of being displayed (both are in Video RAM). At any one time, one buffer is actively being displayed by the monitor, while the other, background buffer is being drawn. When the background buffer is complete, the roles of the two are switched. The page-flip is typically accomplished by modifying a hardware register in the video display controller—the value of a pointer to the beginning of the display data in the video memory.

The page-flip is much faster than copying the data and can guarantee that tearing will not be seen as long as the pages are switched over during the monitor's vertical blanking interval—the blank period when no video data is being drawn. The currently active and visible buffer is called the front buffer, while the background page is called the back buffer.

Triple buffering

In computer graphics, triple buffering is similar to double buffering but can provide improved performance. In double buffering, the program must wait until the finished drawing is copied or swapped before starting the next drawing. This waiting period could be several milliseconds during which neither buffer can be touched.

In triple buffering the program has two back buffers and can immediately start drawing in the one that is not involved in such copying. The third buffer, the front buffer, is read by the graphics card to display the image on the monitor. Once the image has been sent to the monitor, the front buffer is flipped with (or copied from) the back buffer holding the most recent complete image. Since one of the back buffers is always complete, the graphics card never has to wait for the software to complete. Consequently, the software and the graphics card are completely independent and can run at their own pace. Finally, the displayed image was started without waiting for synchronization and thus with minimum lag.[1]

Due to the software algorithm not polling the graphics hardware for monitor refresh events, the algorithm may continuously draw additional frames as fast as the hardware can render them. For frames that are completed much faster than interval between refreshes, it is possible to replace a back buffers' frames with newer iterations multiple times before copying. This means frames may be written to the back buffer that are never used at all before being overwritten by successive frames. Nvidia has implemented this method under the name "Fast Sync".[2]

An alternative method sometimes referred to as triple buffering is a swap chain three buffers long. After the program has drawn both back buffers, it waits until the first one is placed on the screen, before drawing another back buffer (i.e. it is a 3-long first in, first out queue). Most Windows games seem to refer to this method when enabling triple buffering.

Quad buffering

The term quad buffering means the use of double buffering for each of the left and right eye images in stereoscopic implementations, thus four buffers total (if triple buffering was used then there would be six buffers). The command to swap or copy the buffer typically applies to both pairs at once, so at no time does one eye see an older image than the other eye.

Quad buffering requires special support in the graphics card drivers which is disabled for most consumer cards. AMD's Radeon HD 6000 Series and newer support it .

3D standards like OpenGL[3] and Direct3D support quad buffering.

Double buffering for DMA

The term double buffering is used for copying data between two buffers for direct memory access (DMA) transfers, not for enhancing performance, but to meet specific addressing requirements of a device (esp. 32-bit devices on systems with wider addressing provided via Physical Address Extension).[4] DOS and Windows device drivers are a place where the term "double buffering" is likely to be used. Linux and BSD source code calls these "bounce buffers".[5]

Some programmers try to avoid this kind of double buffering with zero-copy techniques.

Other uses

Double buffering is also used as a technique to facilitate interlacing or deinterlacing of video signals.

See also

- Swap Chain

- Vertical synchronization

- Stereoscopy

- LC shutter glasses

- Nvidia 3D Vision

- HD3D

- Virtual DMA Services (VDS)

References

- "Triple Buffering: Why We Love It". AnandTech. June 26, 2009. Retrieved 2009-07-16.

- Smith, Ryan. "The NVIDIA GeForce GTX 1080 & GTX 1070 Founders Editions Review: Kicking Off the FinFET Generation". Retrieved 2017-08-01.

- OpenGL 3.0 Specification, Chapter 4

- "Physical Address Extension - PAE Memory and Windows". Microsoft Windows Hardware Development Central. 2005. Retrieved 2008-04-07.

- Gorman, Mel. "Understanding The Linux Virtual Memory Manager, 10.4 Bounce Buffers".

External links

- Triple buffering: improve your PC gaming performance for free by Mike Doolittle (2007-05-24)

- http://www.tweakguides.com/Graphics_10.html