Flynn's taxonomy

Flynn's taxonomy is a classification of computer architectures, proposed by Michael J. Flynn in 1966.[1][2] The classification system has stuck, and it has been used as a tool in design of modern processors and their functionalities. Since the rise of multiprocessing central processing units (CPUs), a multiprogramming context has evolved as an extension of the classification system.

Classifications

The four classifications defined by Flynn are based upon the number of concurrent instruction (or control) streams and data streams available in the architecture.[3]

| Flynn's taxonomy |

|---|

| Single data stream |

| Multiple data streams |

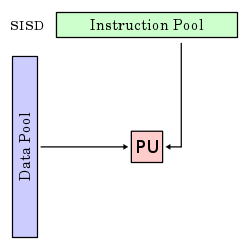

Single instruction stream, single data stream (SISD)

A sequential computer which exploits no parallelism in either the instruction or data streams. Single control unit (CU) fetches single instruction stream (IS) from memory. The CU then generates appropriate control signals to direct single processing element (PE) to operate on single data stream (DS) i.e., one operation at a time.

Examples of SISD architecture are the traditional uniprocessor machines like older personal computers (PCs; by 2010, many PCs had multiple cores) and mainframe computers.

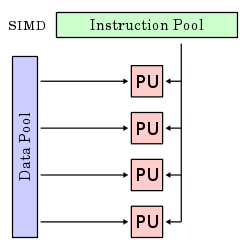

Single instruction stream, multiple data streams (SIMD)

A single instruction operates on multiple different data streams. Instructions can be executed sequentially, such as by pipelining, or in parallel by multiple functional units.

Single instruction, multiple threads (SIMT) is an execution model used in parallel computing where single instruction, multiple data (SIMD) is combined with multithreading. This is not a distinct classification in Flynn's taxonomy, where it would be a subset of SIMD. Nvidia commonly uses the term in its marketing materials and technical documents where it argues for the novelty of Nvidia architecture.[4]

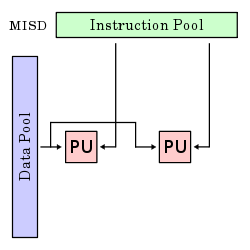

Multiple instruction streams, single data stream (MISD)

Multiple instructions operate on one data stream. This is an uncommon architecture which is generally used for fault tolerance. Heterogeneous systems operate on the same data stream and must agree on the result. Examples include the Space Shuttle flight control computer.[5]

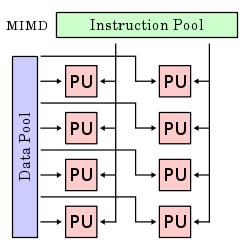

Multiple instruction streams, multiple data streams (MIMD)

Multiple autonomous processors simultaneously executing different instructions on different data. MIMD architectures include multi-core superscalar processors, and distributed systems, using either one shared memory space or a distributed memory space.

Diagram comparing classifications

These four architectures are shown below visually. Each processing unit (PU) is shown for a uni-core or multi-core computer:

Further divisions

As of 2006, all the top 10 and most of the TOP500 supercomputers are based on a MIMD architecture.

Some further divide the MIMD category into the two categories below,[6][7][8][9][10] and even further subdivisions are sometimes considered.[11]

Single program, multiple data streams (SPMD)

Multiple autonomous processors simultaneously executing the same program (but at independent points, rather than in the lockstep that SIMD imposes) on different data. Also termed single process, multiple data[10] - the use of this terminology for SPMD is technically incorrect, as SPMD is a parallel execution model and assumes multiple cooperating processors executing a program. SPMD is the most common style of parallel programming.[12] The SPMD model and the term was proposed by Frederica Darema.[13] Gregory F. Pfister was a manager of the RP3 project, and Darema was part of the RP3 team.

Multiple programs, multiple data streams (MPMD)

Multiple autonomous processors simultaneously operating at least 2 independent programs. Typically such systems pick one node to be the "host" ("the explicit host/node programming model") or "manager" (the "Manager/Worker" strategy), which runs one program that farms out data to all the other nodes which all run a second program. Those other nodes then return their results directly to the manager. An example of this would be the Sony PlayStation 3 game console, with its SPU/PPU processor.

See also

- Feng's classification

- Händler's Erlangen Classification System (ECS)

- SWAR

References

- Flynn, Michael J. (September 1972). "Some Computer Organizations and Their Effectiveness". IEEE Transactions on Computers. C-21 (9): 948–960. doi:10.1109/TC.1972.5009071.

- Duncan, Ralph (February 1990). "A Survey of Parallel Computer Architectures" (PDF). Computer. 23 (2): 5–16. doi:10.1109/2.44900. Archived (PDF) from the original on 2018-07-18. Retrieved 2018-07-18.

- http://www.cse.msu.edu/~cse820/lectures/CAQA5e_ch4.pdf

- http://www.nvidia.com/content/PDF/fermi_white_papers/NVIDIA_Fermi_Compute_Architecture_Whitepaper.pdf

- Spector, A.; Gifford, D. (September 1984). "The space shuttle primary computer system". Communications of the ACM. 27 (9): 872–900. doi:10.1145/358234.358246.

- "Single Program Multiple Data stream (SPMD)". Llnl.gov. Retrieved 2013-12-09.

- Archived September 1, 2006, at the Wayback Machine

- "CTC Virtual Workshop". Web0.tc.cornell.edu. Retrieved 2013-12-09.

- "NIST SP2 Primer: Distributed-memory programming". Math.nist.gov. Archived from the original on 2013-12-13. Retrieved 2013-12-09.

- Archived February 3, 2007, at the Wayback Machine

- Archived September 10, 2006, at the Wayback Machine

- "Single program multiple data". Nist.gov. 2004-12-17. Retrieved 2013-12-09.

- Darema, Frederica; George, David A.; Norton, V. Alan; Pfister, Gregory F. (1988). "A single-program-multiple-data computational model for EPEX/FORTRAN". Parallel Computing. 7 (1): 11–24. doi:10.1016/0167-8191(88)90094-4.

This article is based on material taken from the Free On-line Dictionary of Computing prior to 1 November 2008 and incorporated under the "relicensing" terms of the GFDL, version 1.3 or later.