Coverage error

Coverage error is a type of non-sampling error[1] that occurs when there is not a one-to-one correspondence between the target population and the sampling frame from which a sample is drawn.[2] This can bias estimates calculated using survey data.[3] For example, a researcher may wish to study the opinions of registered voters (target population) by calling residences listed in a telephone directory (sampling frame). Undercoverage may occur if not all voters are listed in the phone directory. Overcoverage could occur if some voters have more than one listed phone number. Bias could also occur if some phone numbers listed in the directory do not belong to registered voters.[4] In this example, undercoverage, overcoverage, and bias due to inclusion of unregistered voters in the sampling frame are examples of coverage error.

Discussion

Coverage error is one type of Total survey error that can occur in survey sampling. In survey sampling, a sampling frame is the list of sampling units from which samples of a target population are drawn.[3] Coverage error results when there are differences between the target population and the sample frame.[5]

For example, suppose a researcher is using Twitter to determine the opinion of U.S. voters on a recent action taken by the U.S. President. Although the researcher's target population is U.S. voters, she is using a list of Twitter users as her sampling frame. Because not all voters are Twitter users, and because not all Twitter users are voters, there will be a misalignment between the target population and the sampling frame that could lead to biased survey results because the demographics and opinions of Twitter using voters might not be representative of the target population of voters.[4]

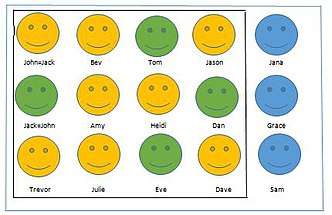

Undercoverage occurs when the sampling frame does not include all members of the target population. In the previous example, voters are undercovered because not all voters are Twitter users. On the other hand, overcoverage results when some members of the target population are overrepresented in the sampling frame. In the previous example, it is possible that some users have more than one Twitter account, and are more likely to be included in the poll than Twitter users with only one account.[4]

Longitudinal studies are particularly susceptible to undercoverage, since the population being studied in a longitudinal survey can change over time.[6] For example, a researcher might want to study the relationship between the letter grades received by third graders in a particular school district and the wages that these same children earn when they become adults. In this case, the researcher is interested in all third graders in the district who grow-up to be adults (target population). Her sampling frame might be a list of third graders in the school district (sampling frame). Over time, it is likely that the researcher will lose track of some of children used in the original study, so that her sample frame of adults no longer matches the sample frame of children used in the study.

Ways to Quantify Coverage Error

Many different methods have been used to quantify and correct for coverage error. Often, the methods employed are unique to specific agencies and organizations. For example, the United States Census Bureau has developed models using the U.S. Postal Service's Delivery Sequence File, IRS 1040 address data, commercially available foreclosure counts, and other data to develop models capable of predicting undercount by census block. The Census Bureau has reported some success fitting such models to Zero Inflated Negative Binomial or Zero Inflated Poisson (ZIP) distributions.[7]

Another method for quantifying coverage error employs mark-and-recapture methodology.[8] In mark-and-recapture methodology, a sample is taken directly from the population, marked, and re-introduced into the population. At a later date, another sample is then taken from the population (re-capture), and the proportion of previously marked samples is used to estimate actual population size. This method can be extended to determining the validity of a sampling frame by taking a sample directly from the target population and then taking another sample from the data frame in order to estimate under-coverage.[9] For example, suppose a census were conducted. After the completion of the census, random samples from the frame could be drawn to be counted again.[8]

Ways to Reduce Coverage Error

One way to reduce coverage error is to rely on multiple sources to either build a sample frame or to solicit information. This is called a mixed-mode approach. For example, Washington State University students conducted Student Survey Experience Surveys by building a sample frame using both street addresses and email addresses.[5]

In another example of a mixed-mode approach, the 2010 U.S. Census primarily relied on residential mail responses, and then deployed field interviewers to interview non-responders. That way, Field Interviewers could determine whether or not the particular address still existed, or was still occupied. This approach had the added benefit of cost reduction as the majority of people responded by mail and did not require a field visit.[8][5]

Example: 2010 Census

The U.S. Census Bureau prepares and maintains a Master Address File of some 144.9 million addresses that it uses as a sampling frame for the U.S. Decennial census and other surveys. Despite the efforts of some 111,105 field representatives and an expenditure of nearly half a billion dollars, the Census bureau still found a significant number of addresses that had not found their way into the Master Address File.[7]

Coverage Follow-Up (CFU) and Field Verification (FV) were Census Bureau operations conducted to improve the 2010 census using 2000 census data as a base. These operations were intended to address the following types of coverage error: Not counting someone who should have been counted; counting someone who should not have been counted; and counting someone who should have been counted, but whose identified location was in error. Coverage errors in the U.S. Census have the potential impact of allowing people groups to be underrepresented by the government. Of particular concern are "differential undercounts" which are underestimates of targeted demographic groups. Although the efforts of the CFU and FV improved the 2010 Census accuracy, more study was recommended to address the question of differential undercounts.[10]

See also

References

- Salant, Priscilla, and Don A. Dillman. "How to Conduct your own Survey: Leading professional give you proven techniques for getting reliable results." (1995)

- Fisheries, NOAA (2019-02-21). "Survey Statistics Overview | NOAA Fisheries". www.fisheries.noaa.gov. Retrieved 2019-02-24.

- Scheaffer, Richard L. 1996. Section 5 of Teaching Survey Sampling, by Ronald S. Fecso, William D. Kalsbeek, Sharon L. Lohr, Richard L. Scheaffer, Fritz J. Scheuren, Elizabeth A. Stasny. The American Statistician 50:4 (Nov., 1996), pp 335–337. (on jstor)

- Scheaffer, Richard L. (2012). Elementary survey sampling (7th, student ed.). Boston, MA: Brooks/Cole. ISBN 0840053614. OCLC 732960076.

- Dillman, Don A.; Smyth, Jolene D.; Christian, Leah Melani. Internet, phone, mail, and mixed-mode surveys : the tailored design method (Fourth ed.). Hoboken. ISBN 9781118921302. OCLC 878301194.

- Lynn, Peter (2009). Methodology of longitudinal surveys. Chichester, UK: John Wiley & Sons. ISBN 9780470743911. OCLC 317116422.

- Bureau, US Census. "Selection of Predictors to Model Coverage Errors". www.census.gov. Retrieved 2019-02-24.

- Biemer, Paul P.; de Leeuw, Edith Desirée; Eckman, Stephanie; Edwards, Brad; Kreuter, Frauke; Lyberg, Lars (eds.). Total survey error in practice. Hoboken, New Jersey. ISBN 9781119041689. OCLC 971891428.

- Bureau, US Census. "Coverage Error Models for Census and Survey Data". www.census.gov. Retrieved 2019-02-24.

- 2010 census : follow-up should reduce coverage errors, but effects on demographic groups need to be determined : report to congressional requesters. U.S. Govt. Accountability Office. 2010. OCLC 721261877.