Context-adaptive binary arithmetic coding

Context-adaptive binary arithmetic coding (CABAC) is a form of entropy encoding used in the H.264/MPEG-4 AVC[1][2] and High Efficiency Video Coding (HEVC) standards. It is a lossless compression technique, although the video coding standards in which it is used are typically for lossy compression applications. CABAC is notable for providing much better compression than most other entropy encoding algorithms used in video encoding, and it is one of the key elements that provides the H.264/AVC encoding scheme with better compression capability than its predecessors.

In H.264/MPEG-4 AVC, CABAC is only supported in the Main and higher profiles (but not the extended profile) of the standard, as it requires a larger amount of processing to decode than the simpler scheme known as context-adaptive variable-length coding (CAVLC) that is used in the standard's Baseline profile. CABAC is also difficult to parallelize and vectorize, so other forms of parallelism (such as spatial region parallelism) may be coupled with its use. In HEVC, CABAC is used in all profiles of the standard.

Algorithm

CABAC is based on arithmetic coding, with a few innovations and changes to adapt it to the needs of video encoding standards:[3]

- It encodes binary symbols, which keeps the complexity low and allows probability modelling for more frequently used bits of any symbol.

- The probability models are selected adaptively based on local context, allowing better modelling of probabilities, because coding modes are usually locally well correlated.

- It uses a multiplication-free range division by the use of quantized probability ranges and probability states.

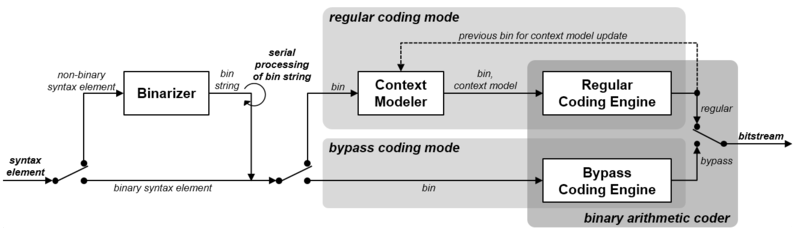

CABAC has multiple probability modes for different contexts. It first converts all non-binary symbols to binary. Then, for each bit, the coder selects which probability model to use, then uses information from nearby elements to optimize the probability estimate. Arithmetic coding is finally applied to compress the data.

The context modeling provides estimates of conditional probabilities of the coding symbols. Utilizing suitable context models, a given inter-symbol redundancy can be exploited by switching between different probability models according to already-coded symbols in the neighborhood of the current symbol to encode. The context modeling is responsible for most of CABAC's roughly 10% savings in bit rate over the CAVLC entropy coding method.

Coding a data symbol involves the following stages.

- Binarization: CABAC uses Binary Arithmetic Coding which means that only binary decisions (1 or 0) are encoded. A non-binary-valued symbol (e.g. a transform coefficient or motion vector) is "binarized" or converted into a binary code prior to arithmetic coding. This process is similar to the process of converting a data symbol into a variable length code but the binary code is further encoded (by the arithmetic coder) prior to transmission.

- Stages are repeated for each bit (or "bin") of the binarized symbol.

- Context model selection: A "context model" is a probability model for one or more bins of the binarized symbol. This model may be chosen from a selection of available models depending on the statistics of recently coded data symbols. The context model stores the probability of each bin being "1" or "0".

- Arithmetic encoding: An arithmetic coder encodes each bin according to the selected probability model. Note that there are just two sub-ranges for each bin (corresponding to "0" and "1").

- Probability update: The selected context model is updated based on the actual coded value (e.g. if the bin value was "1", the frequency count of "1"s is increased).

Example

1. Binarize the value MVDx, the motion vector difference in the x direction.

| MVDx | Binarization |

|---|---|

| 0 | 0 |

| 1 | 10 |

| 2 | 110 |

| 3 | 1110 |

| 4 | 11110 |

| 5 | 111110 |

| 6 | 1111110 |

| 7 | 11111110 |

| 8 | 111111110 |

The first bit of the binarized codeword is bin 1; the second bit is bin 2; and so on.

2. Choose a context model for each bin. One of 3 models is selected for bin 1, based on previous coded MVD values. The L1 norm of two previously-coded values, ek, is calculated:

| ek | Context model for bin 1 |

|---|---|

| 0 ≤ ek < 3 | Model 0 |

| 3 ≤ ek < 33 | Model 1 |

| 33 ≤ ek | Model 2 |

If ek is small, then there is a high probability that the current MVD will have a small magnitude; conversely, if ek is large then it is more likely that the current MVD will have a large magnitude. We select a probability table (context model) accordingly. The remaining bins are coded using one of 4 further context models:

| Bin | Context model | |

|---|---|---|

| 1 | 0, 1 or 2 depending on ek | |

| 2 | 3 | |

| 3 | 4 | |

| 4 | 5 | |

| 5 | 6 and higher | 6 |

3. Encode each bin. The selected context model supplies two probability estimates: the probability that the bin contains “1” and the probability that the bin contains “0”. These estimates determine the two sub-ranges that the arithmetic coder uses to encode the bin.

4. Update the context models. For example, if context model 2 was selected for bin 1 and the value of bin 1 was “0”, the frequency count of “0”s is incremented. This means that the next time this model is selected, the probability of a “0” will be slightly higher. When the total number of occurrences of a model exceeds a threshold value, the frequency counts for “0” and “1” will be scaled down, which in effect gives higher priority to recent observations.

The arithmetic decoding engine

The arithmetic decoder is described in some detail in the Standard. It has three distinct properties:

- Probability estimation is performed by a transition process between 64 separate probability states for "Least Probable Symbol" (LPS, the least probable of the two binary decisions "0" or "1").

- The range R representing the current state of the arithmetic coder is quantized to a small range of pre-set values before calculating the new range at each step, making it possible to calculate the new range using a look-up table (i.e. multiplication-free).

- A simplified encoding and decoding process is defined for data symbols with a near uniform probability distribution.

The definition of the decoding process is designed to facilitate low-complexity implementations of arithmetic encoding and decoding. Overall, CABAC provides improved coding efficiency compared with CAVLC-based coding, at the expense of greater computational complexity.

History

In 1986, IBM researchers Kottappuram M. A. Mohiuddin and Jorma Johannen Rissanen filed a patent for a multiplication-free binary arithmetic coding algorithm.[4][5] In 1988, an IBM research team including R.B. Arps, T.K. Truong, D.J. Lu, W. B. Pennebaker, L. Mitchell and G. G. Langdon presented an adaptive binary arithmetic coding (ABAC) algorithm called Q-Coder.[6][7]

The above patents and research papers, along several others from IBM and Mitsubishi Electric, were later cited by the CCITT and Joint Photographic Experts Group as the basis for the JPEG image compression format's adaptive binary arithmetic coding algorithm in 1992.[4] However, encoders and decoders of the JPEG file format, which has options for both Huffman encoding and arithmetic coding, typically only support the Huffman encoding option, which was originally because of patent concerns, although JPEG's arithmetic coding patents[8] have since expired due to the age of the JPEG standard.[9]

In 1999, Youngjun Yoo (Texas Instruments), Young Gap Kwon and Antonio Ortega (University of Southern California) presented a context-adaptive form of binary arithmetic coding.[10] The modern context-adaptive binary arithmetic coding (CABAC) algorithm was commercially introduced with the H.264/MPEG-4 AVC format in 2003.[11] The majority of patents for the AVC format are held by Panasonic, Godo Kaisha IP Bridge and LG Electronics.[12]

See also

References

- Richardson, Iain E. G., H.264 / MPEG-4 Part 10 White Paper, 17 October 2002.

- Richardson, Iain E. G. (2003). H.264 and MPEG-4 Video Compression: Video Coding for Next-generation Multimedia. Chichester: John Wiley & Sons Ltd.

- Marpe, D., Schwarz, H., and Wiegand, T., Context-Based Adaptive Binary Arithmetic Coding in the H.264/AVC Video Compression Standard, IEEE Trans. Circuits and Systems for Video Technology, Vol. 13, No. 7, pp. 620–636, July, 2003.

- "T.81 – DIGITAL COMPRESSION AND CODING OF CONTINUOUS-TONE STILL IMAGES – REQUIREMENTS AND GUIDELINES" (PDF). CCITT. September 1992. Retrieved 12 July 2019.

- U.S. Patent 4,652,856

- Arps, R. B.; Truong, T. K.; Lu, D. J.; Pasco, R. C.; Friedman, T. D. (November 1988). "A multi-purpose VLSI chip for adaptive data compression of bilevel images". IBM Journal of Research and Development. 32 (6): 775–795. doi:10.1147/rd.326.0775. ISSN 0018-8646.

- Pennebaker, W. B.; Mitchell, J. L.; Langdon, G. G.; Arps, R. B. (November 1988). "An overview of the basic principles of the Q-Coder adaptive binary arithmetic coder". IBM Journal of Research and Development. 32 (6): 717–726. doi:10.1147/rd.326.0717. ISSN 0018-8646.

- "Recommendation T.81 (1992) Corrigendum 1 (01/04)". Recommendation T.81 (1992). International Telecommunication Union. 9 November 2004. Retrieved 3 February 2011.

- JPEG Still Image Data Compression Standard, W. B. Pennebaker and J. L. Mitchell, Kluwer Academic Press, 1992. ISBN 0-442-01272-1

- Ortega, A. (October 1999). "Embedded image-domain compression using context models". Proceedings 1999 International Conference on Image Processing (Cat. 99CH36348). 1: 477–481 vol.1. doi:10.1109/ICIP.1999.821655.

- "Context-Based Adaptive Binary Arithmetic Coding (CABAC)". Fraunhofer Heinrich Hertz Institute. Retrieved 13 July 2019.

- "AVC/H.264 – Patent List" (PDF). MPEG LA. Retrieved 6 July 2019.